Chapter 5 Dimensionality reduction

Each gene in the data represents a different dimension of the data. Reducing the number of dimensions in our data has multiple benefits, including reducing the computational work needed for downstream analyses. It also reduces the noise in the data through averaging the signal across multiple genes.

Dimensionality reduction is a very common technique used in data science in general, not just in scRNA-seq analysis. You will find yourself using it over and over whenever you work with high-dimensional data. Dimensionality reduction is possible for genomic expression methods because so many genes have correlated expression. This is a consequence of different genes being involved in the same biological processes.

5.1 Calculating and choosing PCs

We first use principal component analysis (PCA), which is a dimensionality reduction method that maximizes the amount of variation captured by each component, or PC.

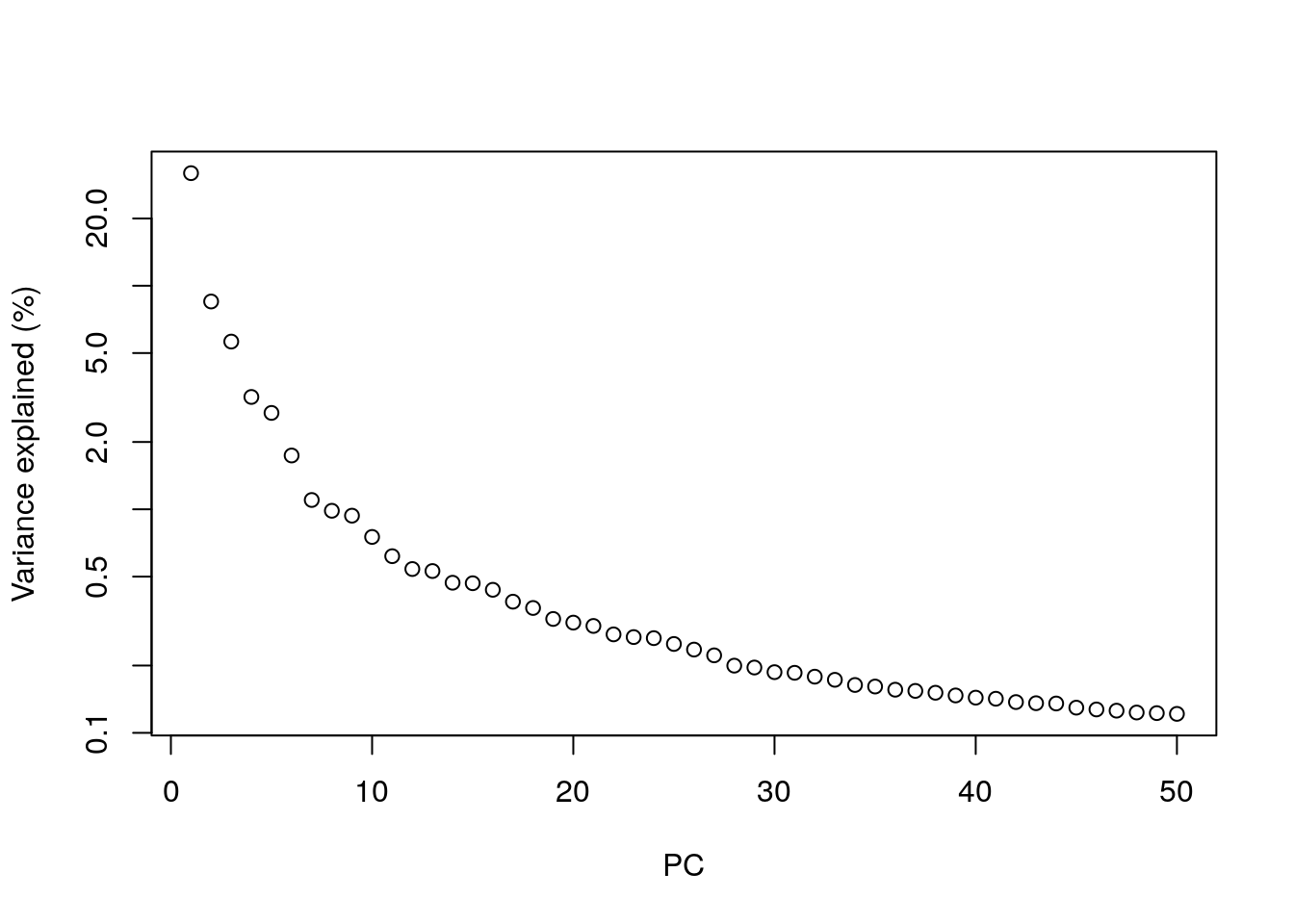

It’s up to the researcher to choose how many PCs to use for downstream analyses. More PCs mean that more biological signal is retained in the data, but it also increases the potential for noise. In our analyses, we will use 50 (the default). We will also use the top 1000 genes to calculate the PCs.

library(scran)

# calculating PCA

# the denoisePCA command calculates PCs and removes those that primarily capture technical noise

set.seed(101011001)

sce.zeisel.PCA.1000 <- denoisePCA(sce.zeisel.qc, technical = dec.zeisel.qc, subset.row = top1000.hvgs) #the technical option tells R where to find information about how much of the variation is attributed to "technical", or non-biological, sources

# visualizing the percentage of variation explained by each PC

percent.var <- attr(reducedDim(sce.zeisel.PCA.1000), "percentVar")

plot(percent.var, log = "y", xlab = "PC", ylab = "Variance explained (%)")

In PCA, the total amount of variation captured decreases for each subsequent PC. By the 10th PC, each additional PC is contributing only a small fraction to the total amount of variation explained in the dataset. Excluding them from downstream analyses has no major effect, and researchers will typically choose to include somewhere between 10 to 50 PCs. Including more PCs in the downstream analyses could theoretically cause the calculations to take longer, but in reality most calculations are fast enough that any slowdown isn’t really noticeable.

More detailed information on calculating and choosing PCs for genomic analyses can be found in the Statistics for Genomics: PCA book.

5.2 Applying non-linear visualization methods to PCs

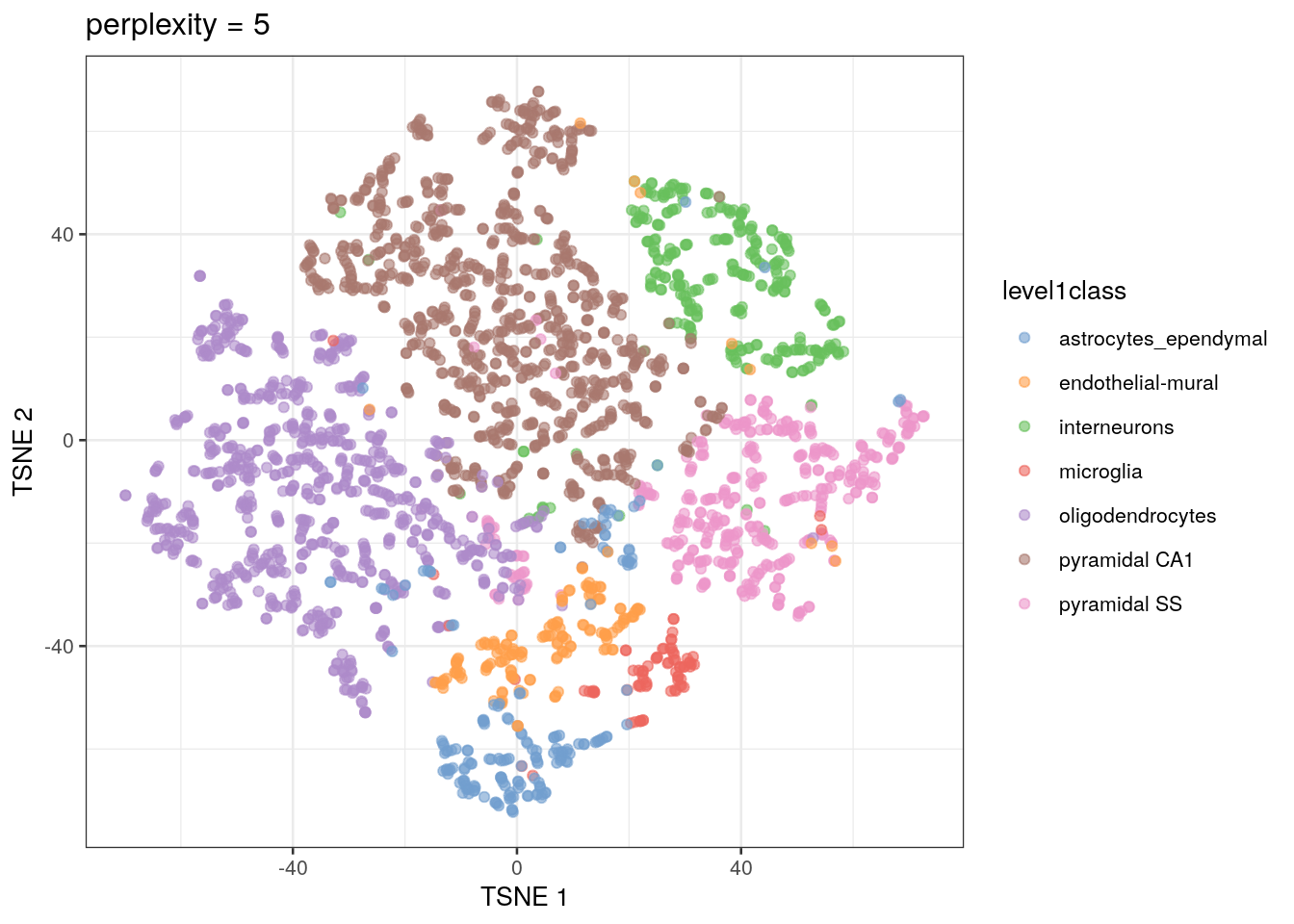

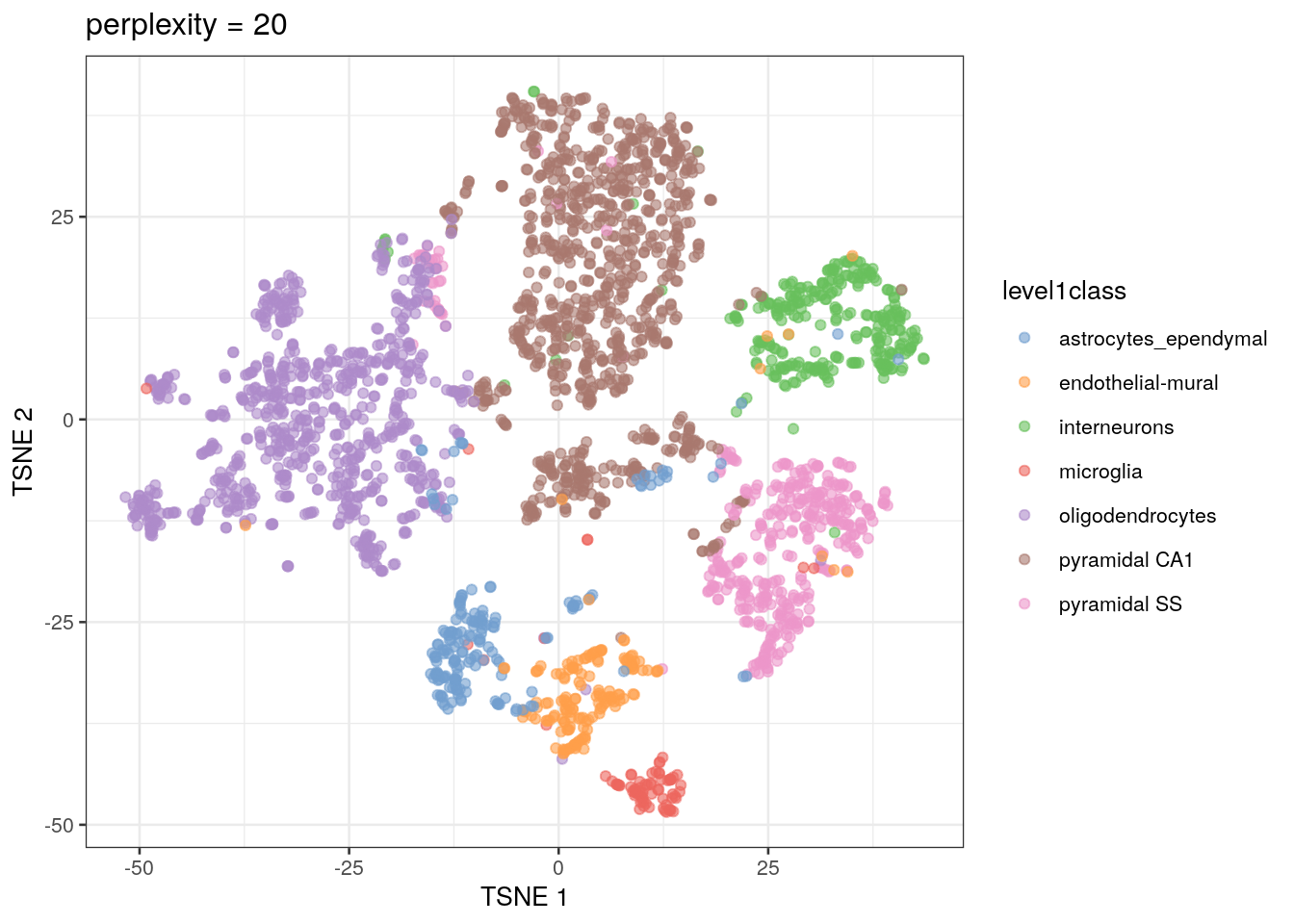

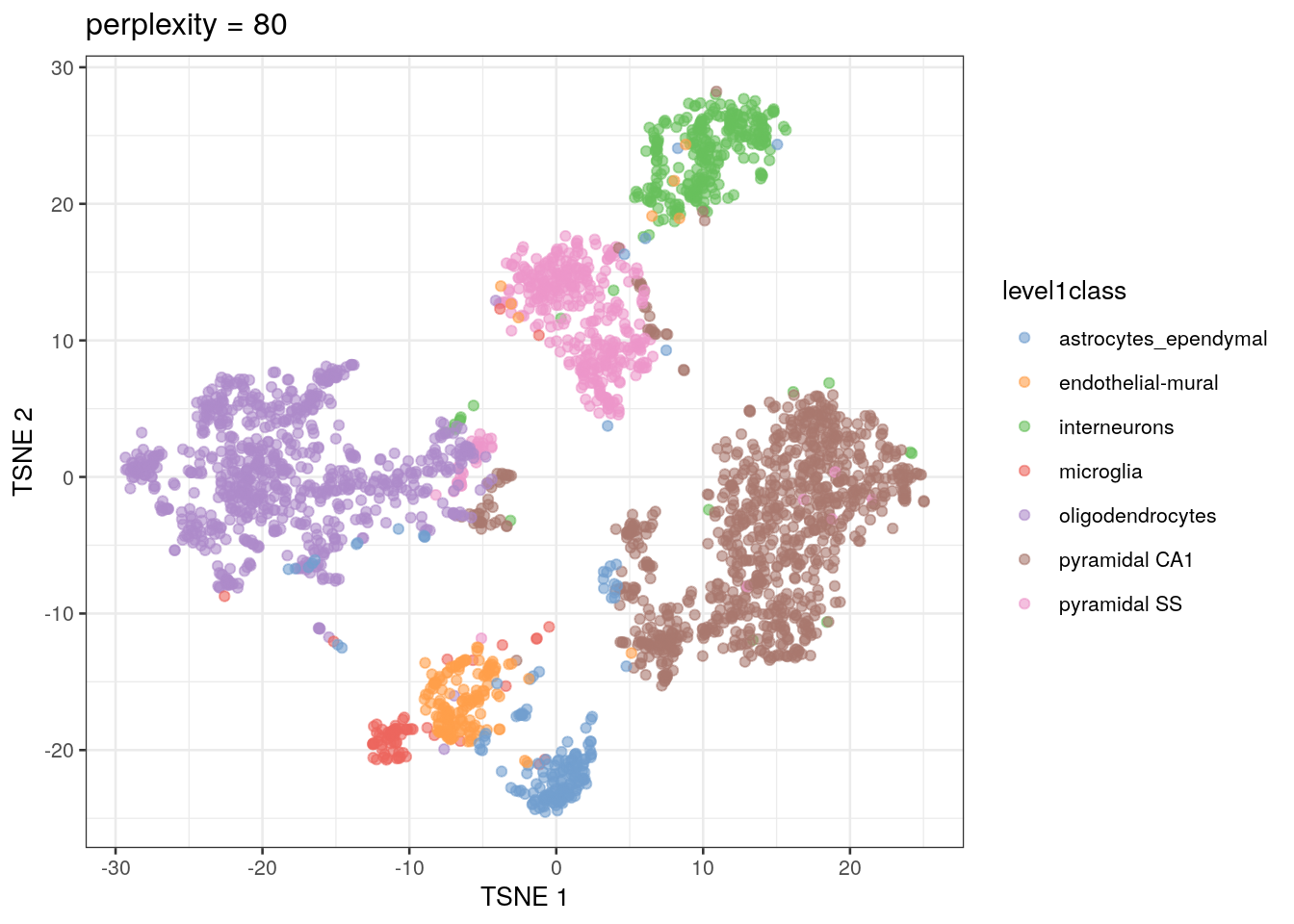

In scRNA-seq analysis, plotting PCs generally does not offer enough resolution to visualize cell clusters. Instead, we rely on additional dimensionality reduction methods that can use non-linear data transformation algorithms. The most common approach is the t-stochastic neighbor embedding (t-SNE) method (Van der Maaten and Hinton 2008).

t-SNE maps high-dimensional data in a low-dimensional space by first calculating the Euclidean distance between each set of points, then converting those distances into the probability that given pair of points are neighbors. On t-SNE plots, points that are members of the same cluster have a high probability of being neighbors. However, you can’t judge the similarity of different clusters based on their position on the final plot, because the t-SNE algorithm does not retain that information.

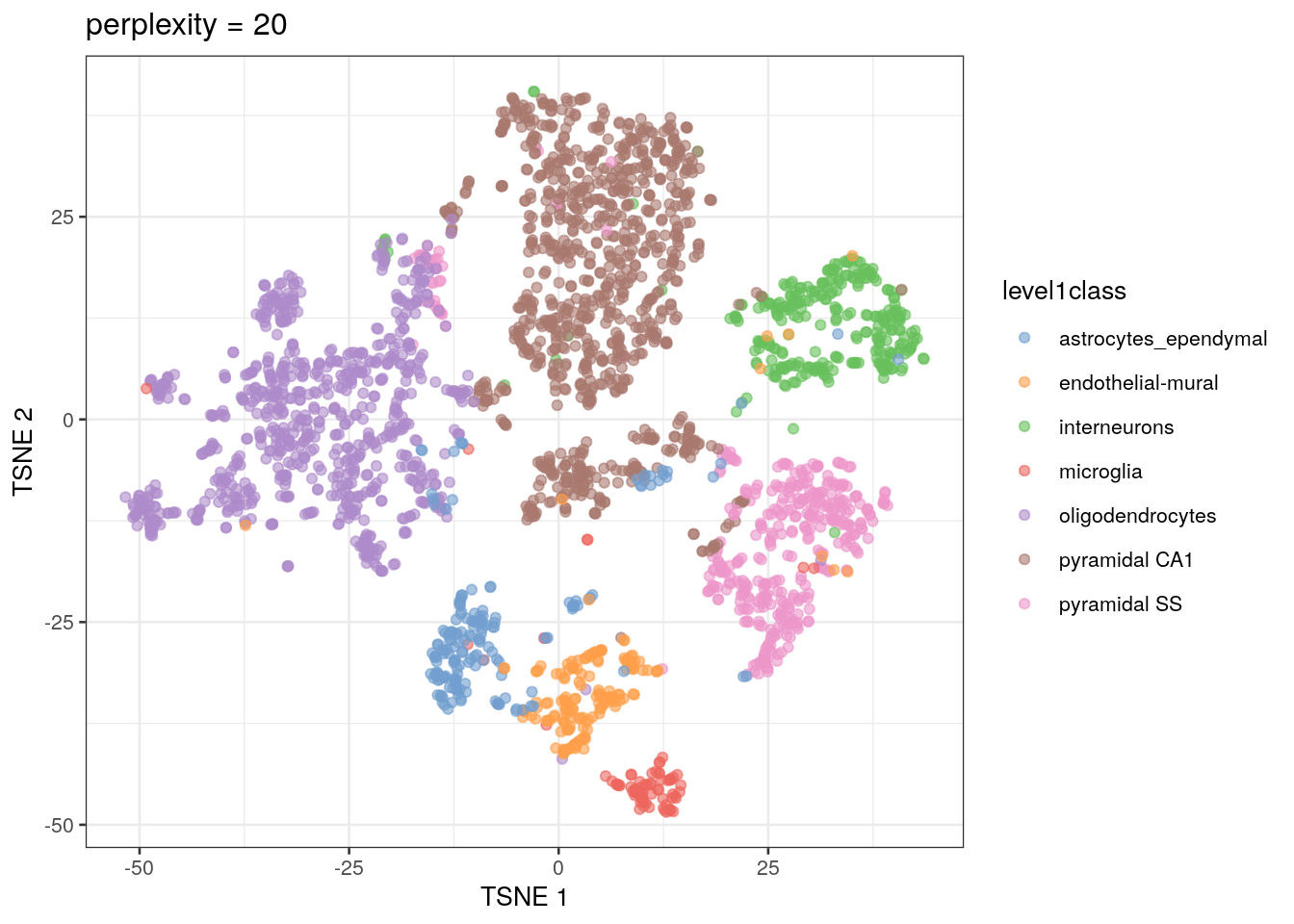

The t-SNE approach is computationally complex. In practice, we reduce the computational complexity in scRNA-seq analysis by performing t-SNE calculations on the top PCs in a dataset (this both decreases the amount of computational power and time needed for analysis). We also need to set an initial starting seed and the perplexity parameter (the number of effective neighbors for each point). This parameter will determine the resolution of the plot. Lower perplexity values allow for finer resolution of population structure but can also be noisy. It’s a good idea to test multiple perplexity values when running your t-SNE analysis.

We’re going to use the PCs calculated using the top 1000 highly-variable genes (a common threshold) for the rest of the analyses.

library(BiocSingular)

# this code first calculates the t-SNE values using PCs, and then creates a plot of the first two t-SNE dimensions

set.seed(100)

sce.zeisel.tsne5 <- runTSNE(sce.zeisel.PCA.1000, dimred = "PCA", perplexity = 5)

out5 <- plotReducedDim(sce.zeisel.tsne5, dimred = "TSNE",

colour_by = "level1class") + ggtitle("perplexity = 5")

set.seed(100)

sce.zeisel.tsne20 <- runTSNE(sce.zeisel.PCA.1000, dimred = "PCA", perplexity = 20)

out20 <- plotReducedDim(sce.zeisel.tsne20, dimred = "TSNE",

colour_by = "level1class") + ggtitle("perplexity = 20")

set.seed(100)

sce.zeisel.tsne80 <- runTSNE(sce.zeisel.PCA.1000, dimred = "PCA", perplexity = 80)

out80 <- plotReducedDim(sce.zeisel.tsne80, dimred = "TSNE",

colour_by = "level1class") + ggtitle("perplexity = 80")

out5

out20

out80

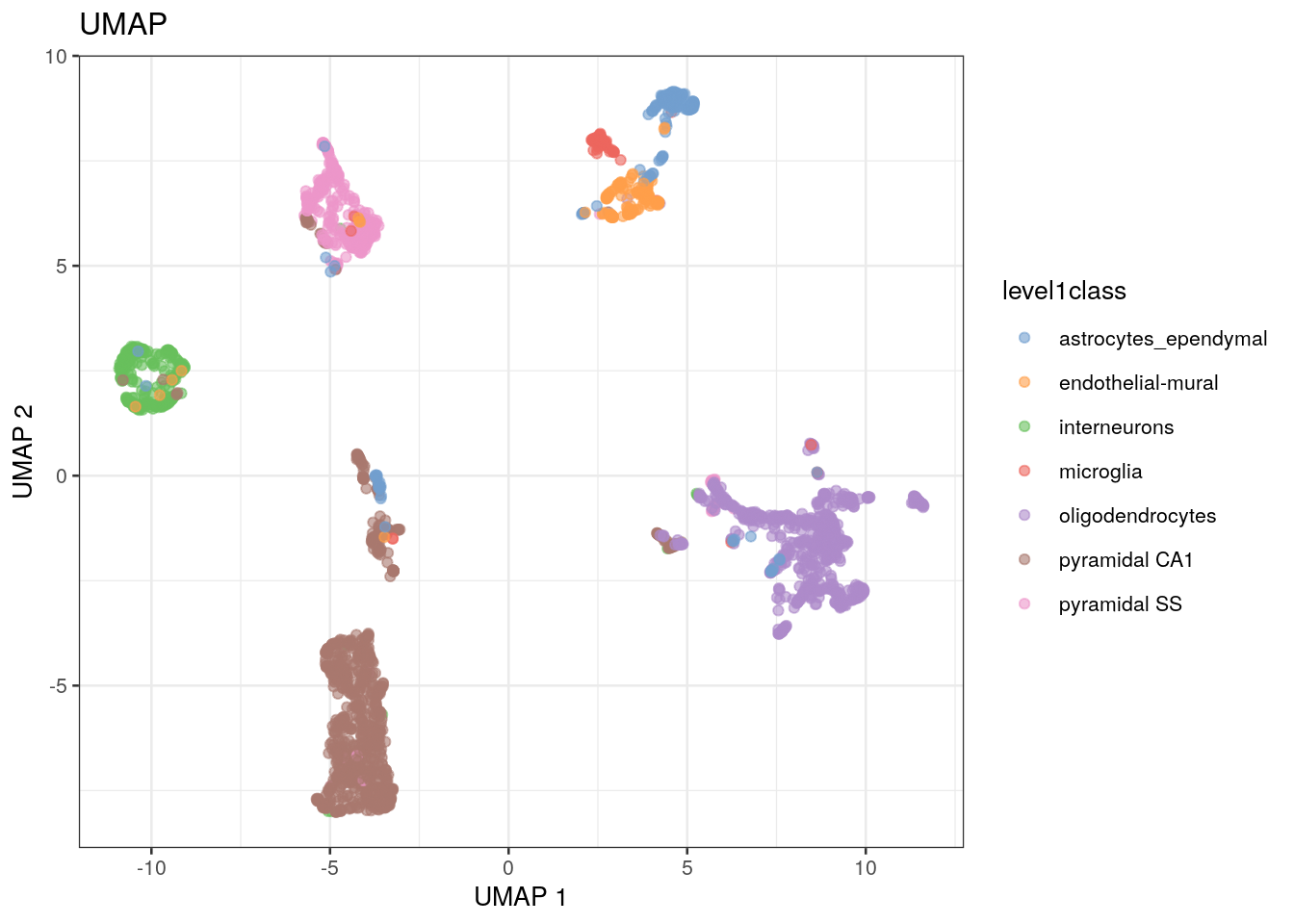

Some researchers will use a different method for the non-linear visualization step in their analysis. This algorithm, called uniform manifold approximation and projection (UMAP), is faster and preserves more of the global data structure when reducing dimensions compared to t-SNE (that is, you get more information about the similarity of clusters, not just of points). As a result, though, the resolution within each cluster is reduced. UMAP is becoming the method of choice as scRNA-seq datasets become larger and larger.

# calculate the UMAP values from the PCs, then plot the first two UMAP dimensions

set.seed(1100101001)

sce.zeisel.umap <- runUMAP(sce.zeisel.PCA.1000, dimred = "PCA")

out.umap <- plotReducedDim(sce.zeisel.umap, dimred = "UMAP", colour_by = "level1class") + ggtitle("UMAP")

out20

out.umap

QUESTIONS 1. How does changing the perplexity parameter affect the t-SNE plot?

- How does the t-SNE plot compare to the UMAP plot?