Chapter 5 Modeling Data in the Tidyverse

5.1 About This Course

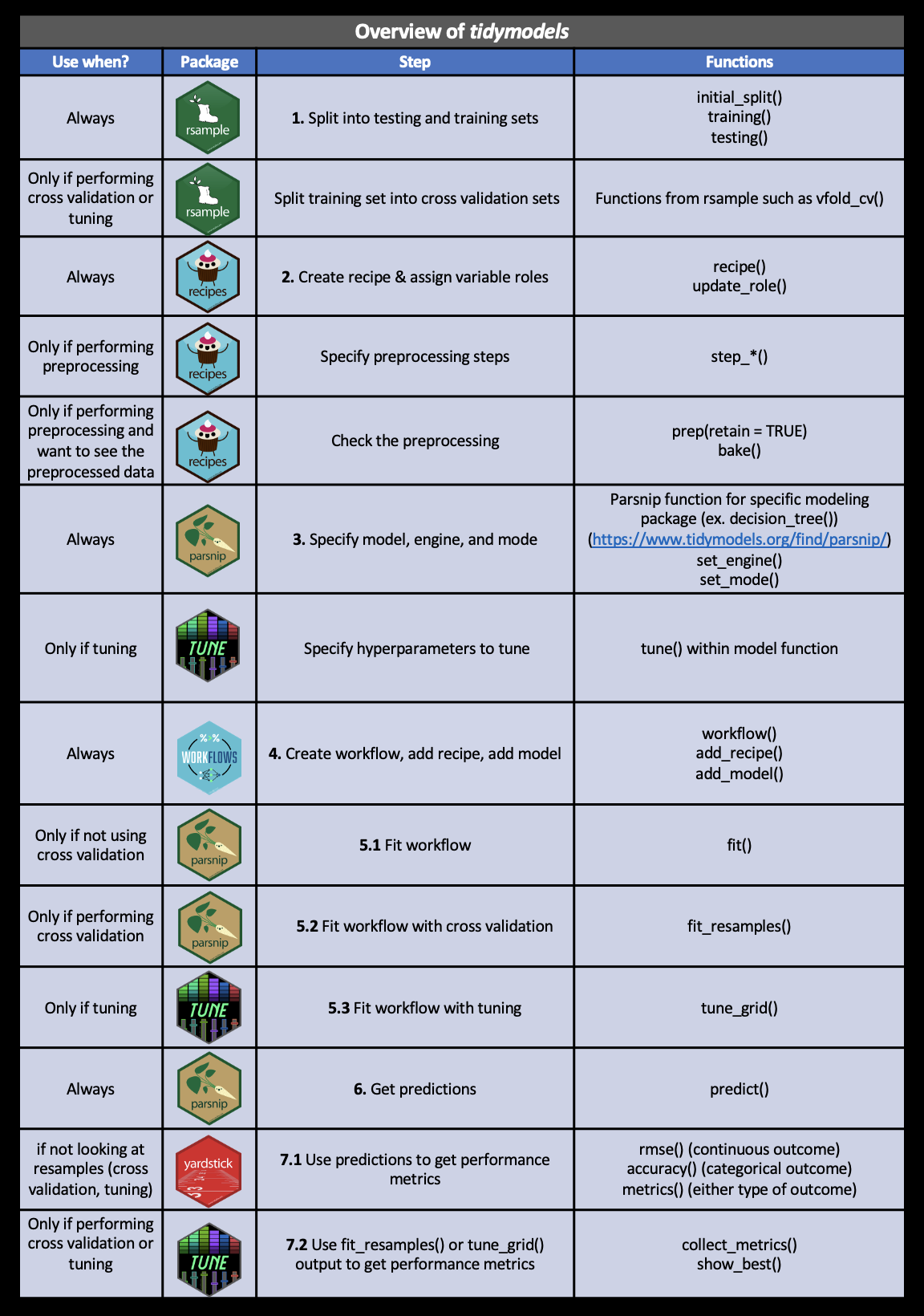

Developing insights about your organization, business, or research project depends on effective modeling and analysis of the data you collect. Building effective models requires understanding the different types of questions you can ask and how to map those questions to your data. Different modeling approaches can be chosen to detect interesting patterns in the data and identify hidden relationships.

This course covers the types of questions you can ask of data and the various modeling approaches that you can apply. Topics covered include hypothesis testing, linear regression, nonlinear modeling, and machine learning. With this collection of tools at your disposal, as well as the techniques learned in the other courses in this specialization, you will be able to make key discoveries from your data for improving decision-making throughout your organization.

In this specialization we assume familiarity with the R programming language. If you are not yet familiar with R, we suggest you first complete R Programming before returning to complete this course.

5.2 The Purpose of Data Science

Data science has multiple definitions. For this module we will use the definition:

Data science is the process of formulating a quantitative question that can be answered with data, collecting and cleaning the data, analyzing the data, and communicating the answer to the question to a relevant audience.

In general the data science process is iterative and the different components blend together a little bit. But for simplicity lets discretize the tasks into the following 7 steps:

- Define the question you want to ask the data

- Get the data

- Clean the data

- Explore the data

- Fit statistical models

- Communicate the results

- Make your analysis reproducible

This module is focused on three of these steps: (1) defining the question you want to ask, (4) exploring the data and (5) fitting statistical models to the data.

We have seen previously how to extract data from the web and from databases and we have seen how to clean it up and tidy the data. You also know how to use plots and graphs to visualize your data. You can think of this module as using those tools to start to answer questions using the tools you have already learned about.

5.3 Types of Data Science Questions

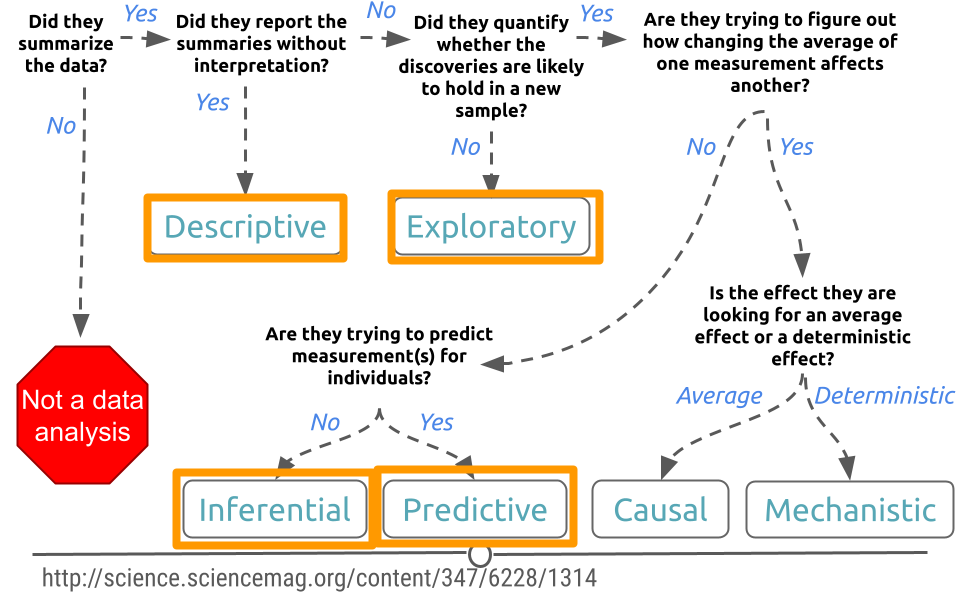

We will look at a few different types of questions that you might want to answer from data. This flowchart gives some questions you can ask to figure out what type of question your analysis focuses on. Each type of question has different goals.

There are four classes of questions that we will focus on:

Descriptive: The goal of descriptive data science questions is to understand the components of a dataset, describe what they are, and explain that description to others who might want to understand the data. This is the simplest type of data analysis.

Exploratory: The goal of exploratory data science questions is to find unknown relationships between the different variables you have measured in your dataset. Exploratory analysis is open ended and designed to find expected or unexpected relationships between different measurements. We have already seen how plotting the data can be very helpful to get a general understanding about how variables relate to one another.

Inferential: The goal of inferential data science questions is to is to use a small sample of data to say something about what would happen if we collected more data. Inferential questions come up because we want to understand the relationships between different variables but it is too expensive or difficult to collect data on every person or object.

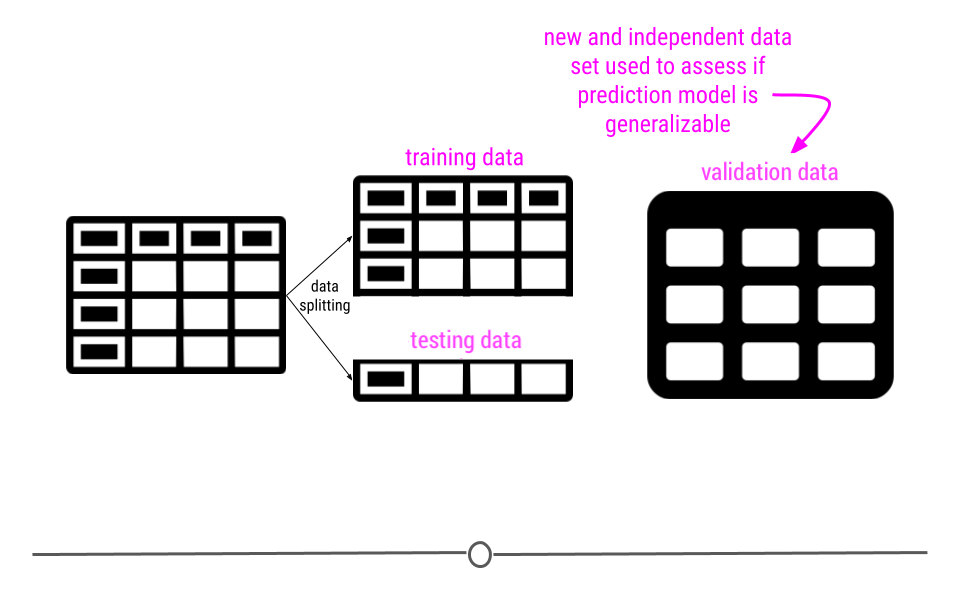

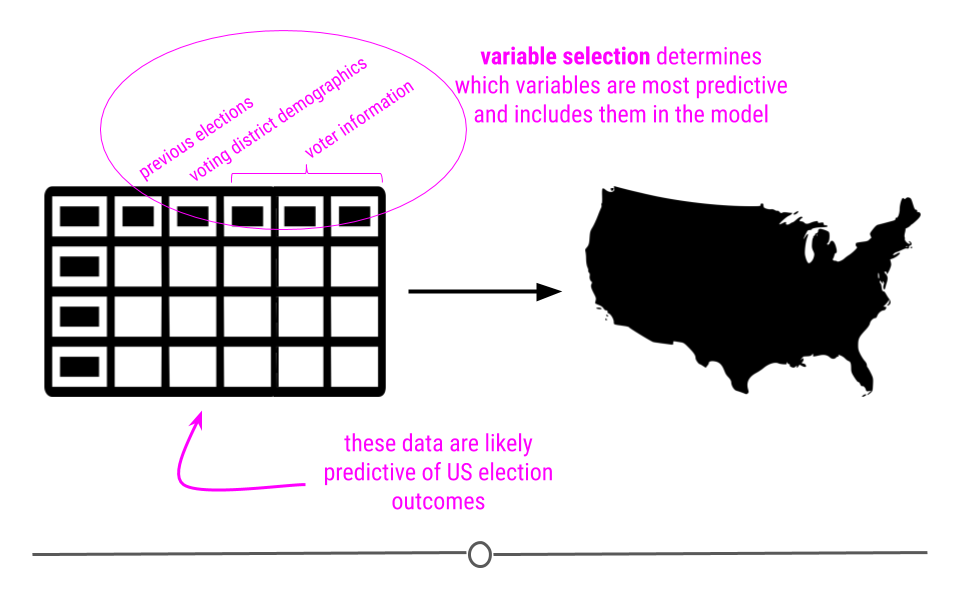

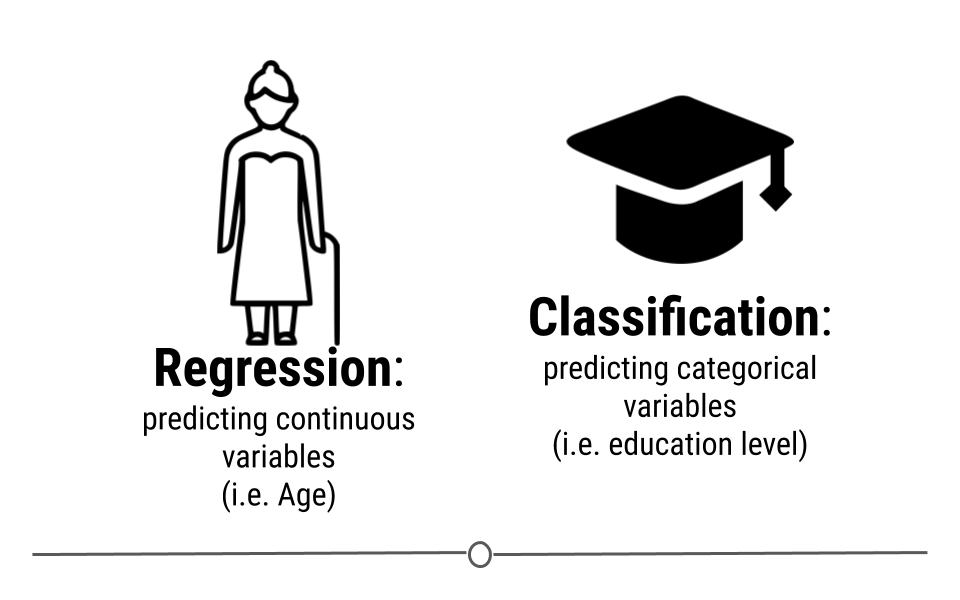

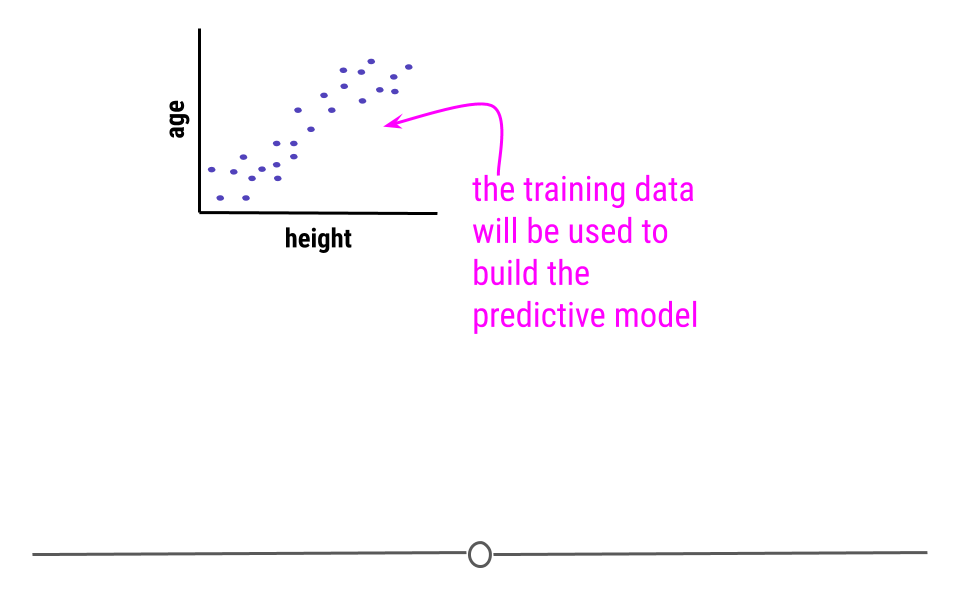

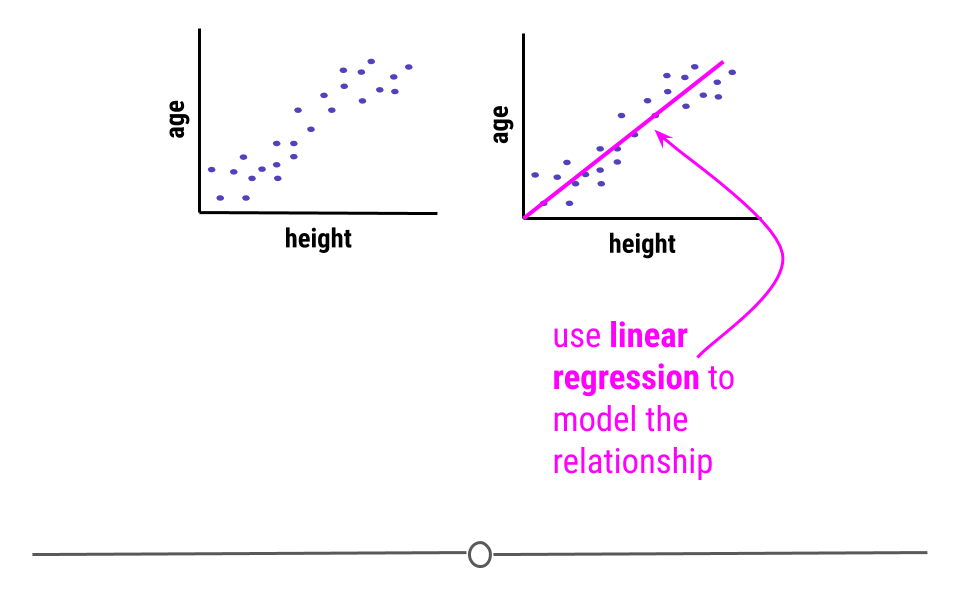

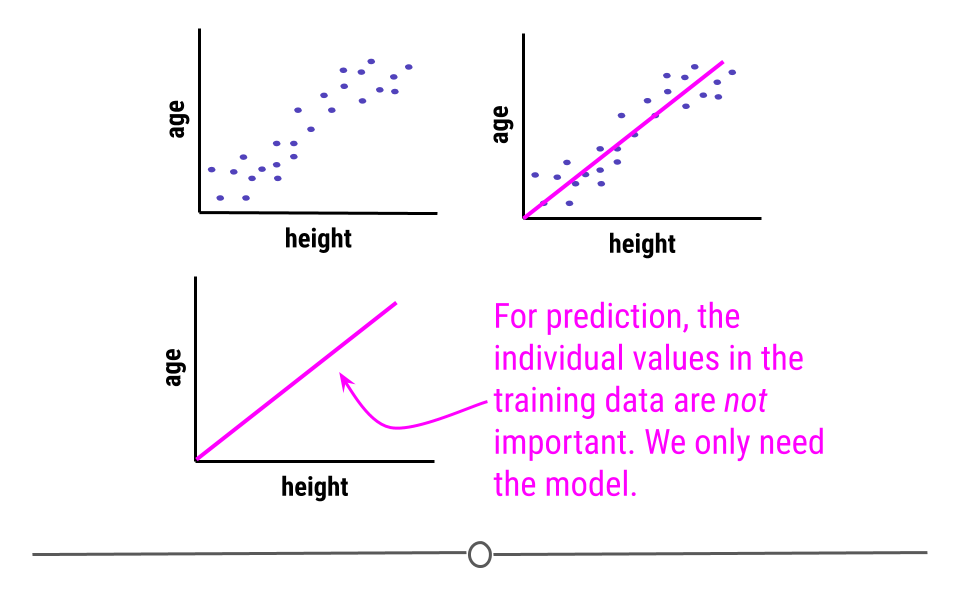

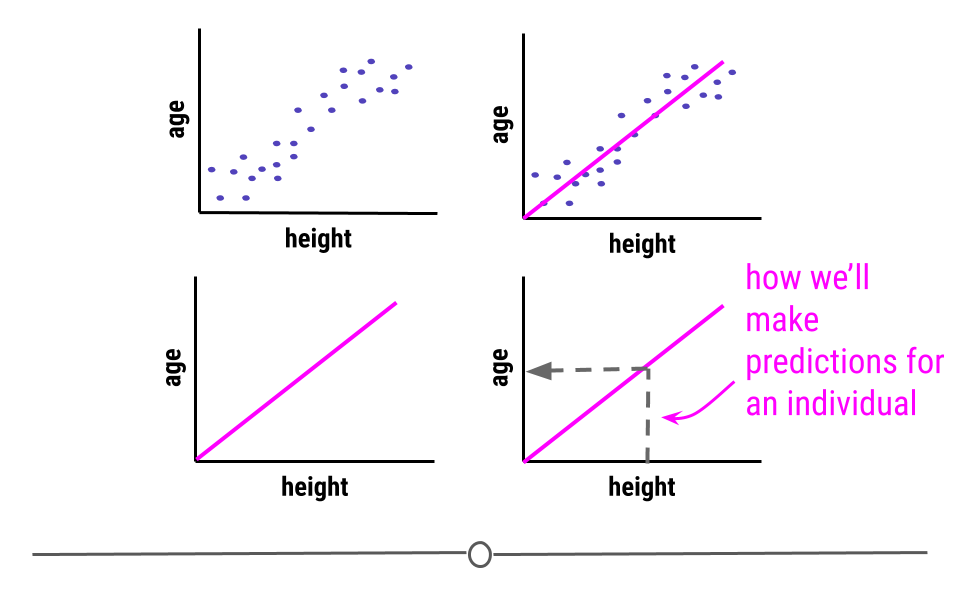

Predictive: The goal of predictive data science question is to use data from a large collection to predict values for new individuals. This might be predicting what will happen in the future or predicting characteristics that are difficult to measure. Predictive data science is sometimes called machine learning.

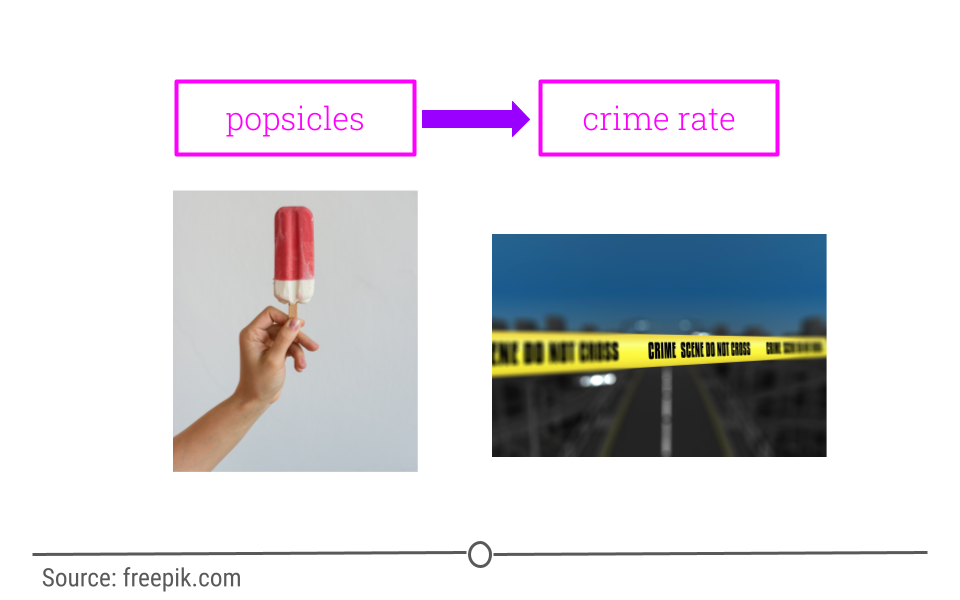

One primary thing we need to be aware of is that just because two variables are correlated with each other, doesn’t mean that changing one causes a change in the other.

One way that people illustrate this idea is to look at data where two variables show a relationship, but are clearly not related to each other. For example, in a specific time range, the number of people who drown while falling into a pool is related to the number of films that Nicholas Cage appears in. These two variables are clearly unrelated to each other, but the data seems to show a relationship. We’ll discuss more later.

5.4 Data Needs

Let’s assume you have the dataset that contains the variables you are looking for to evaluate the question(s) you are interested in, and it is tidy and ready to go for your analysis. It’s always nice to step back to make sure the data is the right data before you spend hours and hours on your analysis. So, let’s discuss some of the potential and common issues people run into with their data.

5.4.1 Number of observations is too small

It happens quite often that collecting data is expensive or not easy. For instance, in a medical study on the effect of a drug on patients with Alzheimer disease, researchers will be happy if they can get a sample of 100 people. These studies are expensive, and it’s hard to find volunteers who enroll in the study. It is also the case with most social experiments. While data are everywhere, the data you need may not be. Therefore, most data scientists at some point in their career face the curse of small sample size. Small sample size makes it hard to be confident about the results of your analysis. So when you can, and it’s feasible, a large sample is preferable to a small sample. But when your only available dataset to work with is small you will have to note that in your analysis. Although we won’t learn them in this course, there are particular methods for inferential analysis when sample size is small.

5.4.2 Dataset does not contain the exact variables you are looking for

In data analysis, it is common that you don’t always have what you need. You may need to know individuals’ IQ, but all you have is their GPA. You may need to understand food expenditure, but you have total expenditure. You may need to know parental education, but all you have is the number of books the family owns. It is often that the variable that we need in the analysis does not exist in the dataset and we can’t measure it. In these cases, our best bet is to find the closest variables to that variable. Variables that may be different in nature but are highly correlated with (similar to) the variable of interest are what are often used in such cases. These variables are called proxy variables.

For instance, if we don’t have parental education in our dataset, we can use the number of books the family has in their home as a proxy. Although the two variables are different, they are highly correlated (very similar), since more educated parents tend to have more books at home. So in most cases where you can’t have the variable you need in your analysis, you can replace it with a proxy. Again, it must always be noted clearly in your analysis why you used a proxy variable and what variable was used as your proxy.

5.4.3 Variables in the dataset are not collected in the same year

Imagine we want to find the relationship between the effect of cab prices and the number of rides in New York City. We want to see how people react to price changes. We get a hold of data on cab prices in 2018, but we only have data on the number of rides from 2015. Can these two variables be used together in our analysis? Simply, no. If we want to answer this question, we can’t match these two sets of data. If we’re using the prices from 2018, we should find the number of rides from 2018 as well. Unfortunately, a lot of the time, this is an issue you’ll run into. You’ll either have to find a way to get the data from the same year or go back to the drawing board and ask a different question. This issue can be ignored only in cases where we’re confident the variables does not change much from year to year.

5.4.4 Dataset is not representative of the population that you are interested in

You will hear the term representative sample, but what is it? Before defining a representative sample, let’s see what a population is in statistical terms. We have used the word population without really getting into its definition.

A sample is part of a population. A population, in general, is every member of the whole group of people we are interested in. Sometimes it is possible to collect data for the entire population, like in the U.S. Census, but in most cases, we can’t. So we collect data on only a subset of the population. For example, if we are studying the effect of sugar consumption on diabetes, we can’t collect data on the entire population of the United States. Instead, we collect data on a sample of the population. Now, that we know what sample and population are, let’s go back to the definition of a representative sample.

A representative sample is a sample that accurately reflects the larger population. For instance, if the population is every adult in the United States, the sample includes an appropriate share of men and women, racial groups, educational groups, age groups, geographical groups, and income groups. If the population is supposed to be every adult in the U.S., then you can’t collect data on just people in California, or just young people, or only men. This is the idea of a representative sample. It has to model the broader population in all major respects.

We give you one example in politics. Most recent telephone poles in the United States have been bad at predicting election outcomes. Why? This is because by calling people’s landlines you can’t guarantee you will have a representative sample of the voting age population since younger people are not likely to have landlines. Therefore, most telephone polls are skewed toward older adults.

Random sampling is a necessary approach to having a representative sample. Random sampling in data collection means that you randomly choose your subjects and don’t choose who gets to be in the sample and who doesn’t. In random sampling, you select your subjects from the population at random like based on a coin toss. The following are examples of lousy sampling:

- A research project on attitudes toward owning guns through a survey sent to subscribers of a gun-related magazine (gun magazine subscribers are not representative of the general population, and the sample is very biased).

- A research project on television program choices by looking at Facebook TV interests (not everybody has a Facebook account. Most online surveys or surveys on social media have to be taken with a grain of salt because not all members of all social groups have an online presentation or use social media.)

- A research study on school meals and educational outcomes done in a neighborhood with residents mainly from one racial group (school meals can have a different effect on different income and ethnic groups).

The moral of the story is to always think about what your population is. Your population will change from one project to the next. If you are researching the effect of smoking on pregnant women, then your population is, well, pregnant women (and not men). After you know your population, then you will always want collect data from a sample that is representative of your population. Random sampling helps.

And lastly, if you have no choice but to work with a dataset that is not collected randomly and is biased, be careful not to generalize your results to the entire population. If you collect data on pregnant women of age 18-24, you can’t generalize your results to older women. If you collect data from the political attitudes of residents of Washington, DC, you can’t say anything about the whole nation.

5.4.5 Some variables in the dataset are measured with error

Another curse of a dataset is measurement error. In simple, measurement error refers to incorrect measurement of variables in your sample. Just like measuring things in the physical world comes with error (like measuring distance, exact temperature, BMI, etc.), measuring variables in the social context can come with an error. When you ask people how many books they have read in the past year, not everyone remembers it correctly. Similarly, you may have measurement error when you ask people about their income. A good researcher recognizes measurement error in the data before any analysis and takes it into account during their analysis.

5.4.6 Variables are confounded

What if you were interested in determining what variables lead to increases in crime? To do so, you obtain data from a US city with lots of different variables and crime rates for a particular time period. You would then wrangle the data and at first you look at the relationship between popsicle sales and crime rates. You see that the more popsicles that are sold, the higher the crime rate.

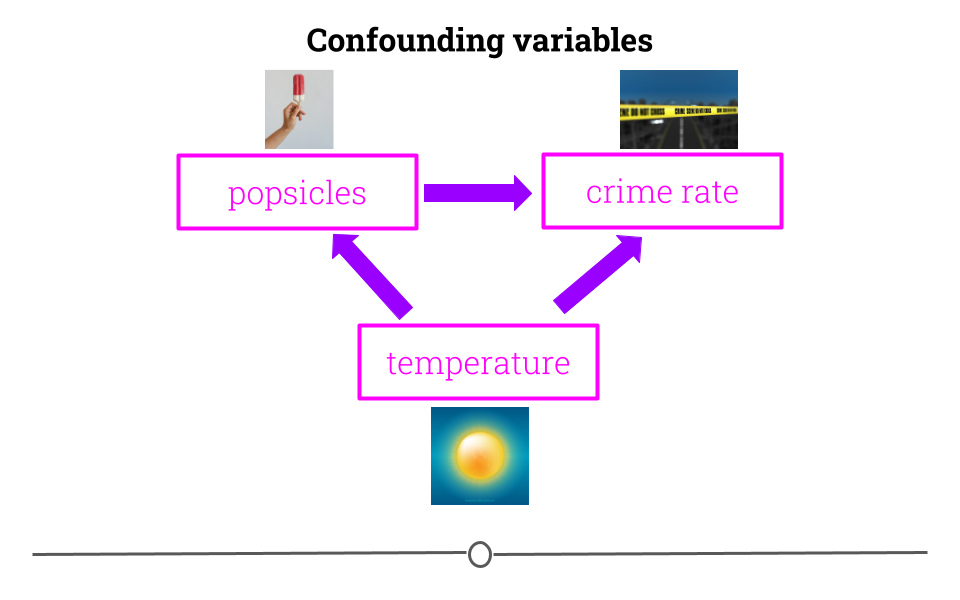

Your first thought may be that popsicles lead to crimes being committed. However, there is a confounder that’s not being considered!

In short, confounders are other variables that may affect our outcome but are also correlated with (have a relationship with) our main variable of interest. In the popsicle example, temperature is an important confounder. More crimes happen when it’s warm out and more popsicles are sold. It’s not the popsicles at all driving the relationship. Instead temperature is likely the culprit.

This is why getting an understanding of what data you have and how the variables relate to one another is so vital before moving forward with inference or prediction. We have already described exploratory analysis to some extent using visualization methods. Now we will recap a bit and discuss descriptive analysis.

5.5 Descriptive and Exploratory Analysis

Descriptive and Exploratory analysis will first and foremost generate simple summaries about the samples and their measurements to describe the data you’re working with and how the variables might relate to one another. There are a number of common descriptive statistics that we’ll discuss in this lesson: measures of central tendency (eg: mean, median, mode) or measures of variability (eg: range, standard deviations, or variance).

This type of analysis is aimed at summarizing your dataset. Unlike analysis approaches we’ll discuss in later, descriptive and exploratory analysis is not for generalizing the results of the analysis to a larger population nor trying to draw any conclusions. Description of data is separated from interpreting the data. Here, we’re just summarizing what we’re working with.

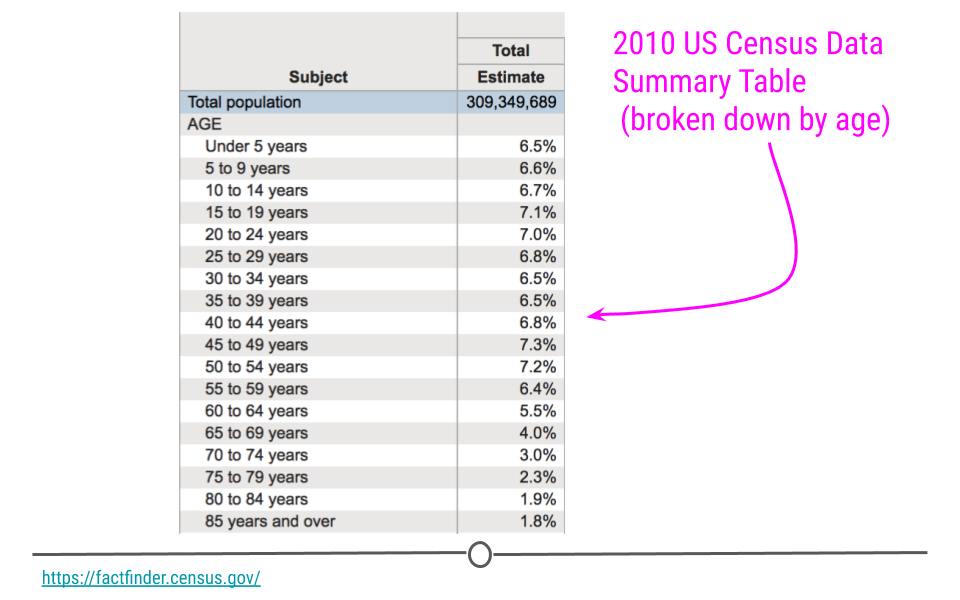

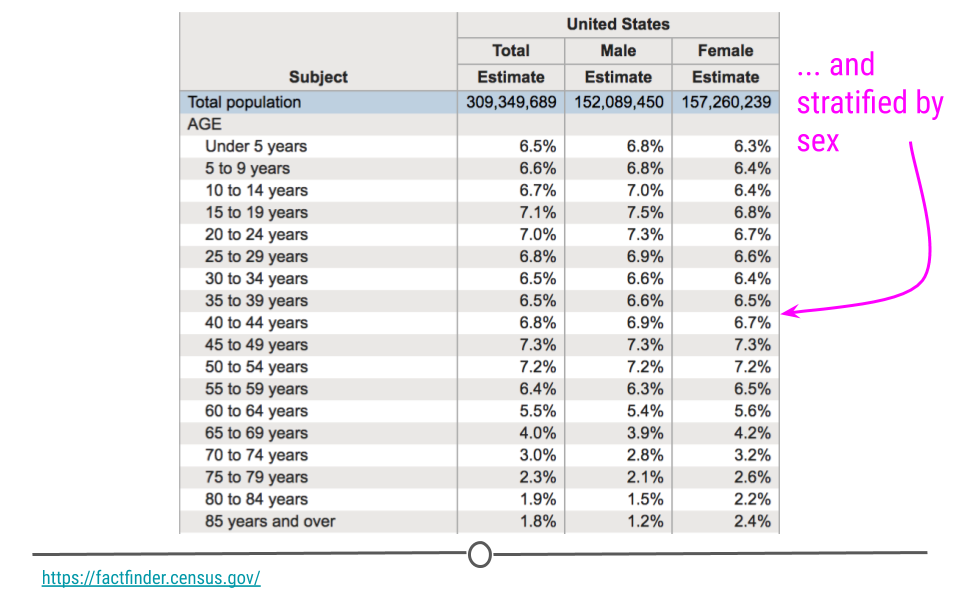

Some examples of purely descriptive analysis can be seen in censuses. In a census, the government collects a series of measurements on all of the country’s citizens. After collecting these data, they are summarized. From this descriptive analysis, we learn a lot about a country. For example, you can learn the age distribution of the population by looking at U.S. census data.

This can be further broken down (or stratified) by sex to describe the age distribution by sex. The goal of these analyses is to describe the population. No inferences are made about what this means nor are predictions made about how the data might trend in the future. The point of this (and every!) descriptive analysis is only to summarize the data collected.

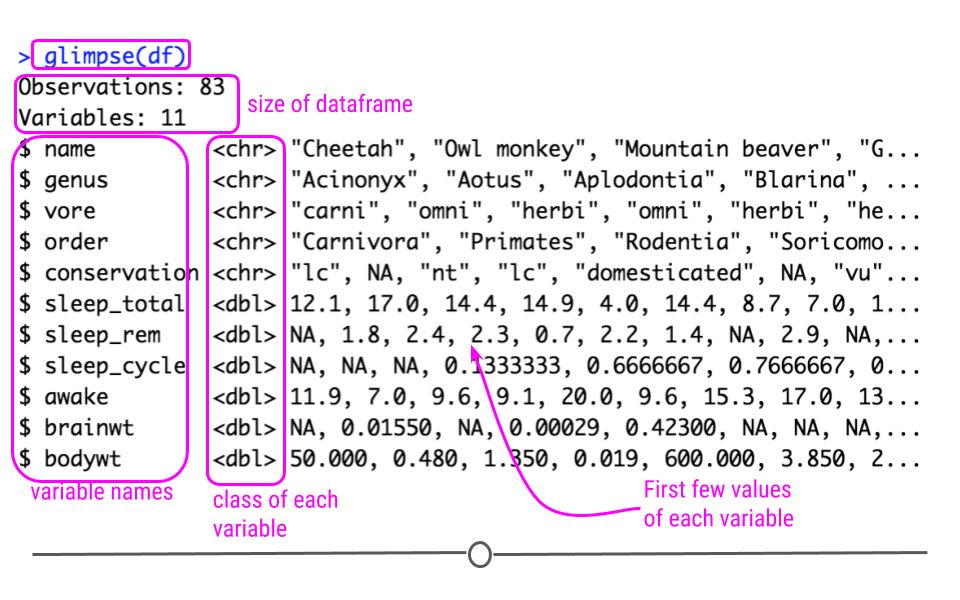

Recall that the glimpse() function of the dplyr package can help you to see what data you are working with.

## load packages

library(tidyverse)

df <- msleep # this data comes from ggplot2

## get a glimpse of your data

glimpse(df)

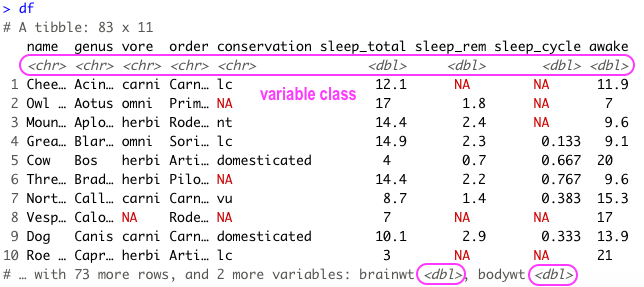

Also because the data is in tibble format, we can gain a lot of information by just viewing the data itself.

Here we also get information about the dimensions of our data object and the name and class of our variables.

5.5.1 Missing Values

In any analysis, missing data can cause a problem. Thus, it’s best to get an understanding of missingness in your data right from the start. Missingness refers to observations that are not included for a variable. In R, NA is the preferred way to specify missing data, so if you’re ever generating data, its best to include NA wherever you have a missing value.

However, individuals who are less familiar with R code missingness in a number of different ways in their data: -999, N/A, ., or a blank space. As such, it’s best to check to see how missingness is coded in your dataset. A reminder: sometimes different variables within a single dataset will code missingness differently. This shouldn’t happen, but it does, so always use caution when looking for missingness.

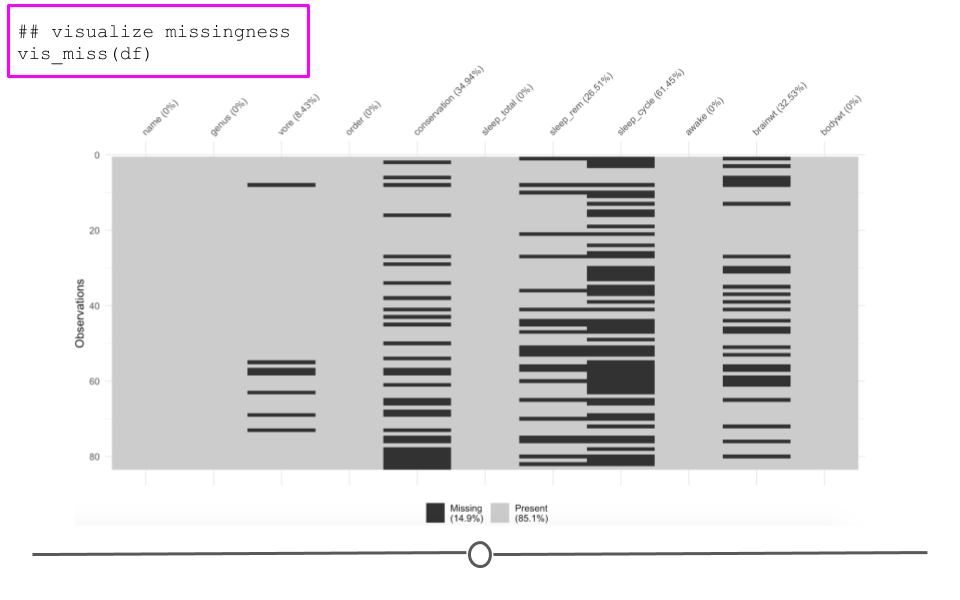

In this dataset, all missing values are coded as NA, and from the output of str(df) (or glimpse(df)), we see that at least a few variables have NA values. We’ll want to quantify this missingness though to see which variables have missing data and how many observations within each variable have missing data.

To do this, we can write a function that will calculate missingness within each of our variables. To do this we’ll combine a few functions. In the code here, is.na() returns a logical (TRUE/FALSE) depending upon whether or not the value is missing (TRUE if it is missing). The sum() function then calculates the number of TRUE values there are within an observation. We then use map() to calculate the number of missing values in each variable. The second bit of code does the exact same thing but divides those numbers by the total number of observations (using nrow(df)). For each variable, this returns the proportion of missingness:

library(purrr)

## calculate how many NAs there are in each variable

df %>%

map(is.na) %>%

map(sum)

## calculate the proportion of missingness

## for each variable

df %>%

map(is.na) %>%

map(sum)%>%

map(~ . / nrow(df))%>%

bind_cols()There are also some useful visualization methods for evaluating missingness. You could manually do this with ggplot2, but there are two packages called naniar and visdat written by Nicholas Tierney that are very helpful. The visdat package was used previously in one of our case studies.

Here, we see the variables listed along the top with percentages summarizing how many observations are missing data for that particular variable. Each row in the visualization is a different observation. Missing data are black. Non-missing values are in grey. Focusing again on brainwt, we can see the 27 missing values visually. We can also see that sleep_cycle has the most missingness, while many variables have no missing data.

5.5.2 Shape

Determining the shape of your variable is essential before any further analysis is done. Statistical methods used for inference often require your data to be distributed in a certain manner before they can be applied to the data. Thus, being able to describe the shape of your variables is necessary during your descriptive analysis.

When talking about the shape of one’s data, we’re discussing how the values (observations) within the variable are distributed. Often, we first determine how spread out the numbers are from one another (do all the observations fall between 1 and 10? 1 and 1000? -1000 and 10?). This is known as the range of the values. The range is described by the minimum and maximum values taken by observations in the variable.

After establishing the range, we determine the shape or distribution of the data. More explicitly, the distribution of the data explains how the data are spread out over this range. Are most of the values all in the center of this range? Or, are they spread out evenly across the range? There are a number of distributions used commonly in data analysis to describe the values within a variable. We’ll cover just a few, but keep in mind this is certainly not an exhaustive list.

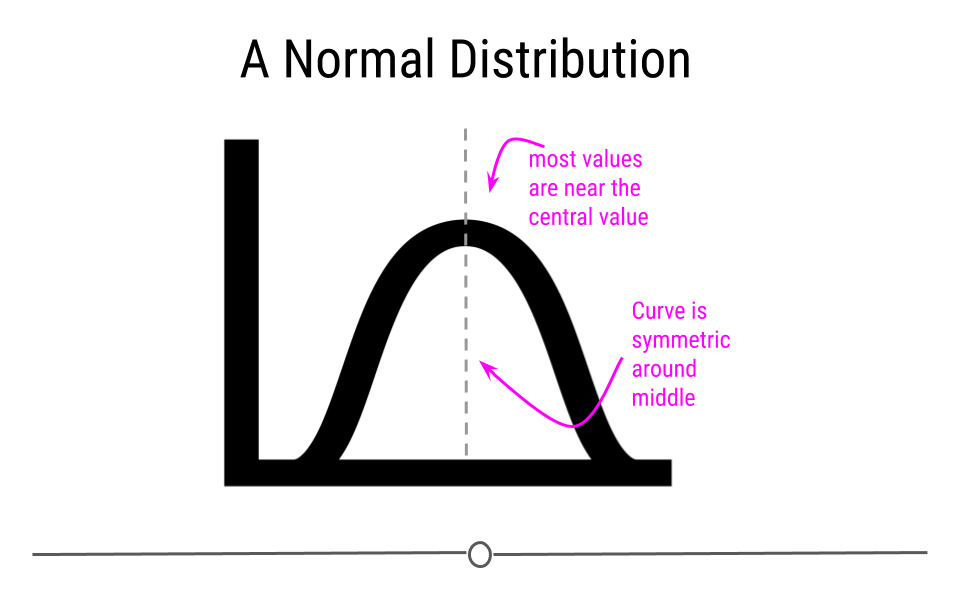

5.5.2.1 Normal Distribution

The Normal distribution (also referred to as the Gaussian distribution) is a very common distribution and is often described as a bell-shaped curve. In this distribution, the values are symmetric around the central value with a high density of the values falling right around the central value. The left hand of the curve mirrors the right hand of the curve.

A variable can be described as normally distributed if:

- There is a strong tendency for data to take a central value - many of the observations are centered around the middle of the range.

- Deviations away from the central value are equally likely in both directions - the frequency of these deviations away form the central value occurs at the same rate on either side of the central value.

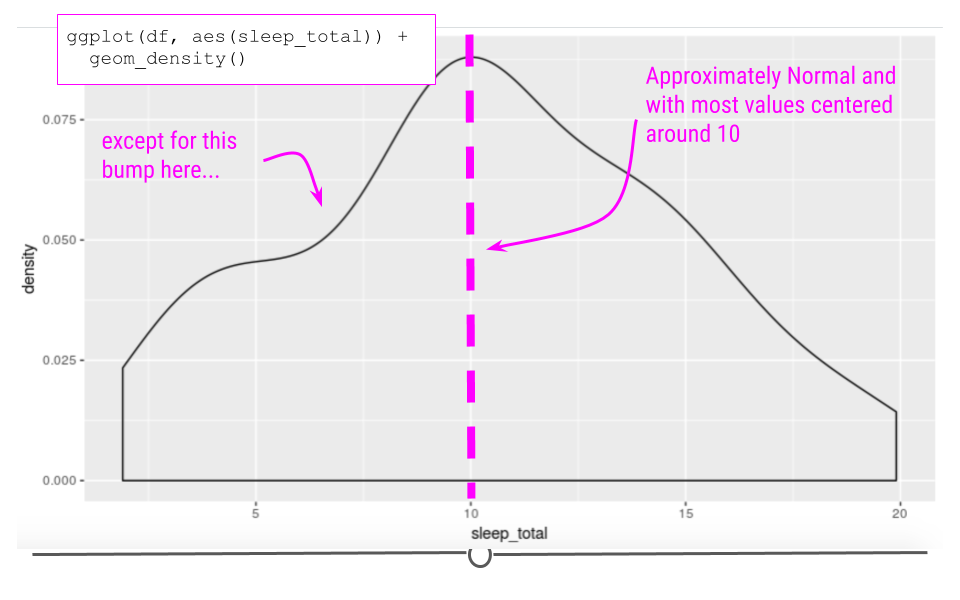

Taking a look at the sleep_total variable within our example dataset, we see that the data are somewhat normal; however, they aren’t entirely symmetric.

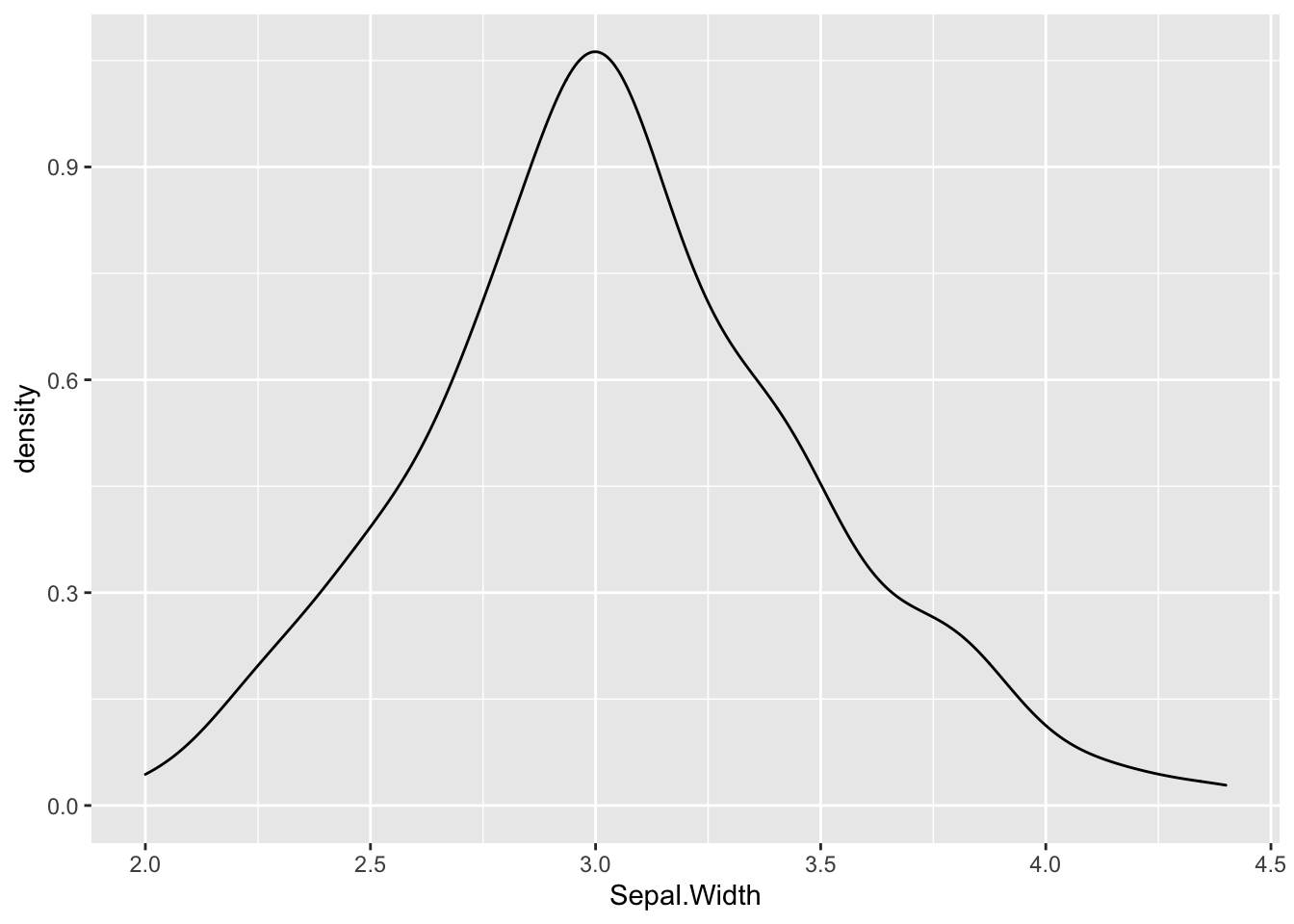

A variable that is distributed more normally can be seen in the iris dataset, when looking at the Sepal.Width variable.

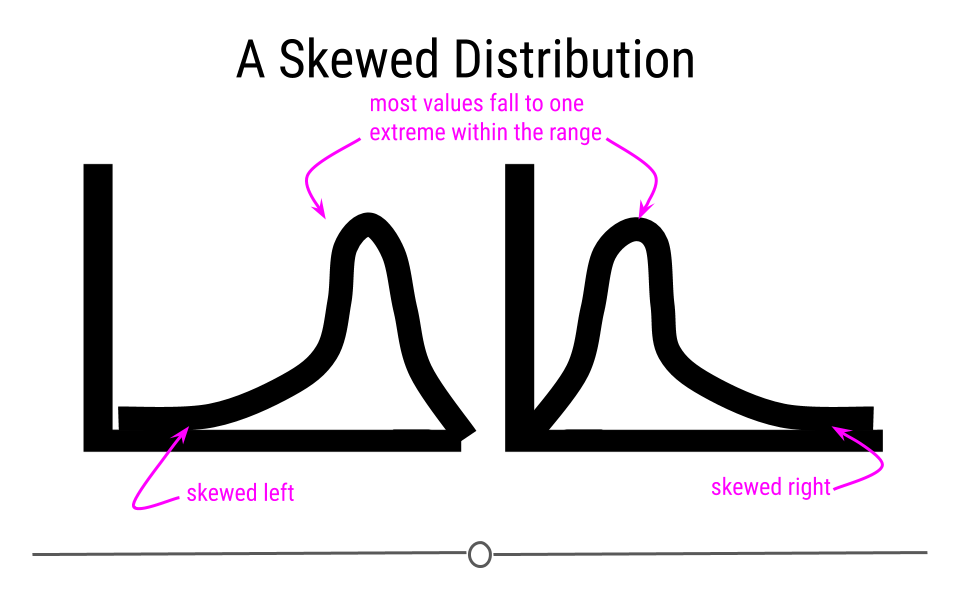

Skewed Distribution

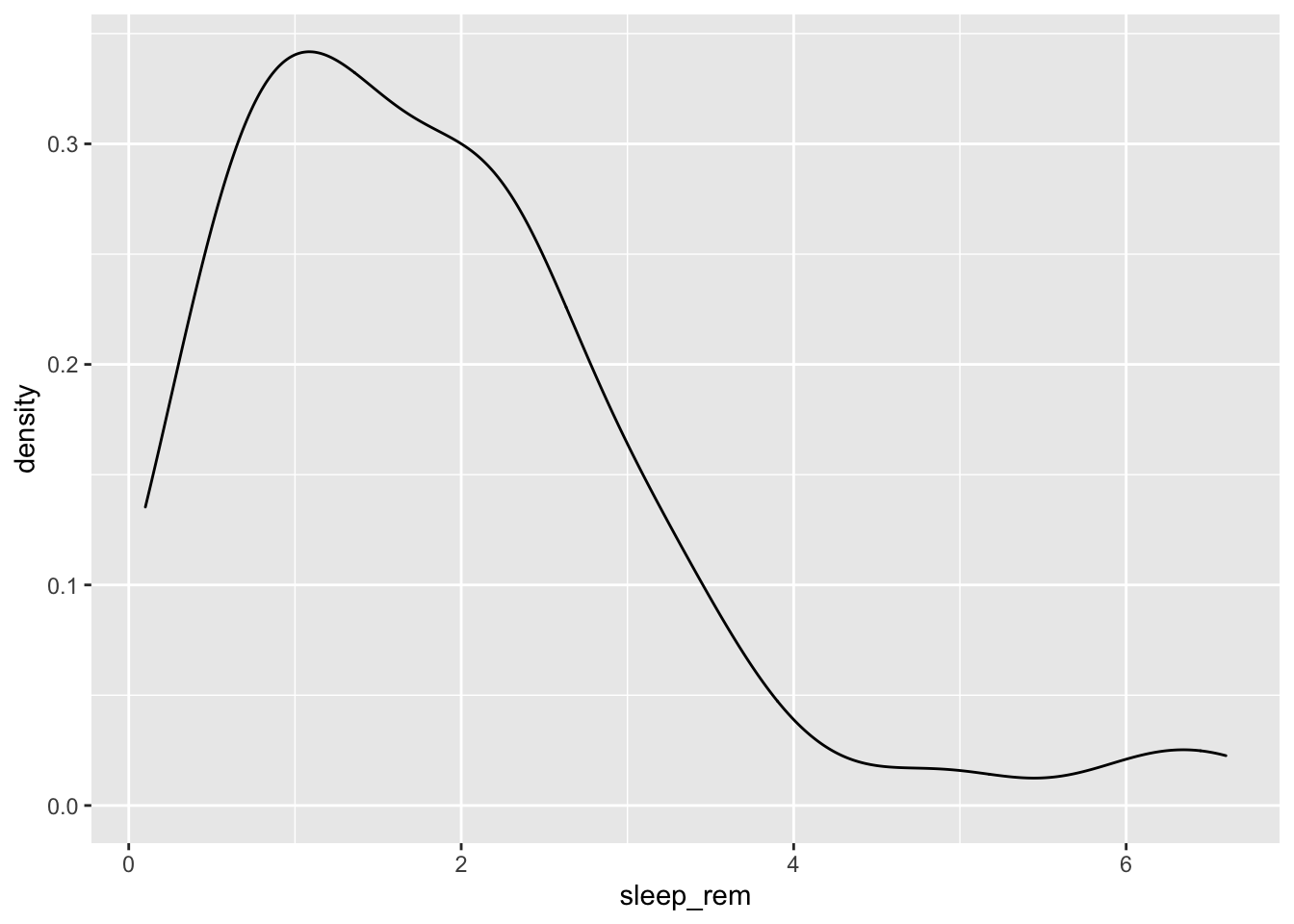

Alternatively, sometimes data follow a skewed distribution. In a skewed distribution, most of the values fall to one end of the range, leaving a tail off to the other side. When the tail is off to the left, the distribution is said to be skewed left. When off to the right, the distribution is said to be skewed right.

To see an example from the msleep dataset, we’ll look at the variable sleep_rem. Here we see that the data are skewed right, given the shift in values away from the right, leading to a long right tail. Here, most of the values are at the lower end of the range.

## Warning: Removed 22 rows containing non-finite outside the scale range

## (`stat_density()`).

Uniform Distribution

Finally, in distributions we’ll discuss today, sometimes values for a variable are equally likely to be found along any portion of the distribution. The curve for this distribution looks more like a rectangle, since the likelihood of an observation taking a value is constant across the range of possible values.

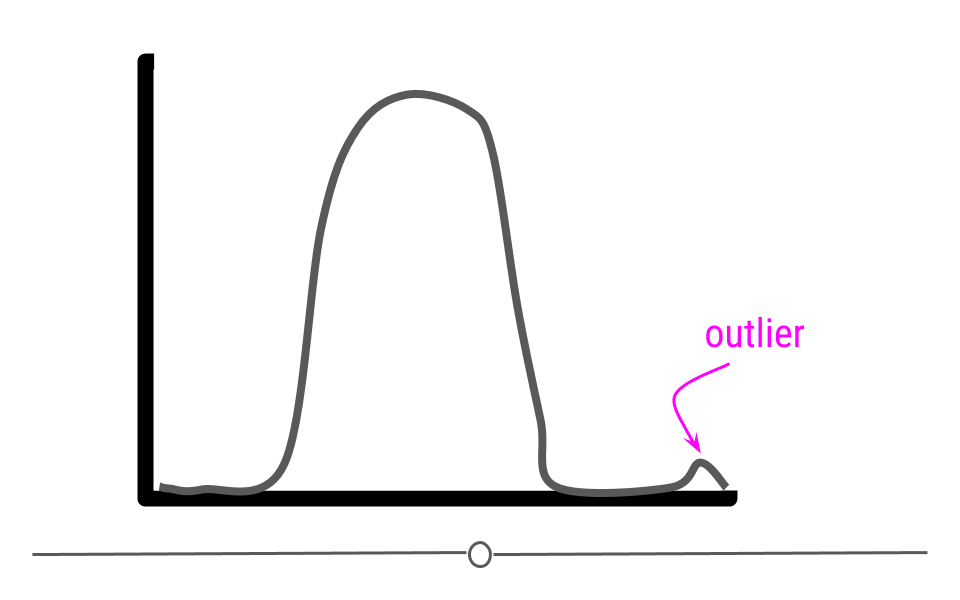

Outliers

Now that we’ve discussed distributions, it’s important to discuss outliers in more depth. An outlier is an observation that falls far away from the rest of the observations in the distribution. If you were to look at a density curve, you could visually identify outliers as observations that fall far from the rest of the observations.

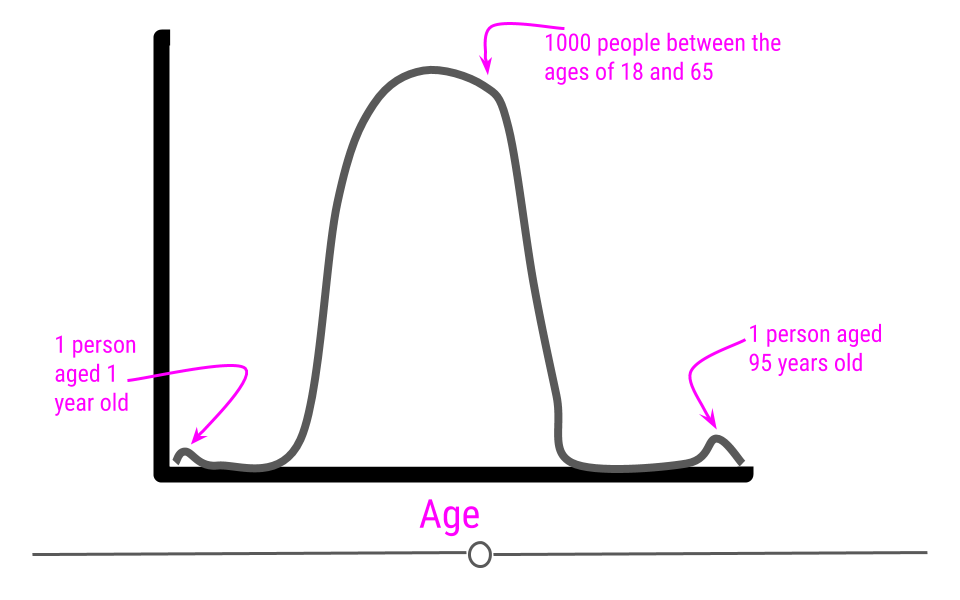

For example, imagine you had a sample where all of the individuals in your sample are between the ages of 18 and 65, but then you have one sample that is 1 year old and another that is 95 years old.

If we were to plot the age data on a density plot, it would look something like this:

It can sometimes be difficult to decide whether or not a sample should be removed from the dataset. In the simplest terms, no observation should be removed from your dataset unless there is a valid reason to do so. For a more extreme example, what if that dataset we just discussed (with all the samples having ages between 18 and 65) had one sample with the age 600? Well, if these are human data, we clearly know that is a data entry error. Maybe it was supposed to be 60 years old, but we may not know for sure. If we can follow up with that individual and double-check, it’s best to do that, correct the error, make a note of it, and continue with the analysis. However, that’s often not possible. In the cases of obvious data entry errors, it’s likely that you’ll have to remove that observation from the dataset. It’s valid to do so in this case since you know that an error occurred and that the observation was not accurate.

Outliers do not only occur due to data entry errors. Maybe you were taking weights of your observations over the course of a few weeks. On one of these days, your scale was improperly calibrated, leading to incorrect measurements. In such a case, you would have to remove these incorrect observations before analysis.

Outliers can occur for a variety of reasons. Outliers can occur due human error during data entry, technical issues with tools used for measurement, as a result of weather changes that affect measurement accuracy, or due to poor sampling procedures. It’s always important to look at the distribution of your observations for a variable to see if any observations are falling far away from the rest of the observations. It’s then important to think about why this occurred and determine whether or not you have a valid reason to remove the observations from the data.

An important note is that observations should never be removed just to make your results look better! Wanting better results is not a valid reason for removing observations from your dataset.

5.5.3 Identifying Outliers

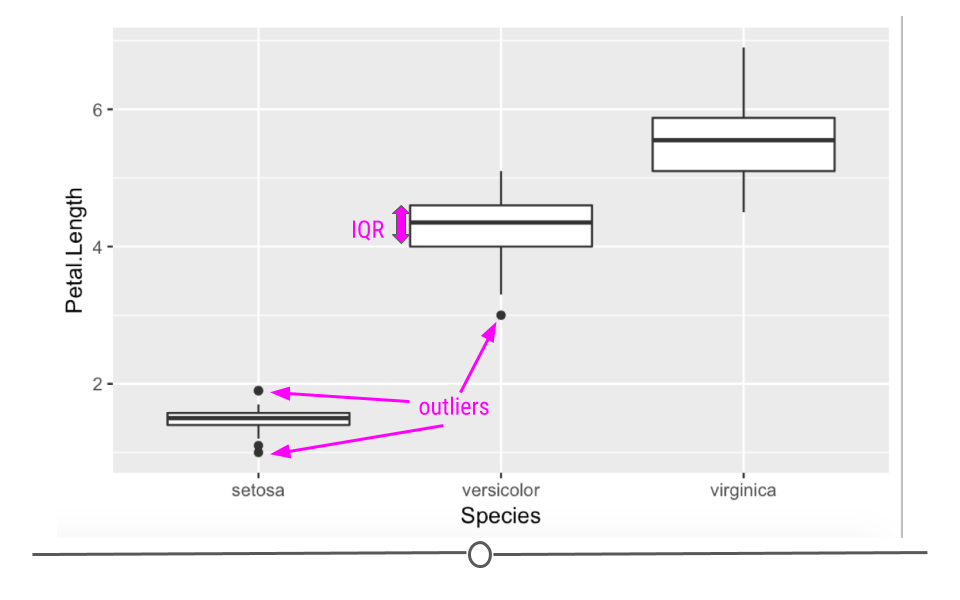

To identify outliers visually, density plots and boxplots can be very helpful.

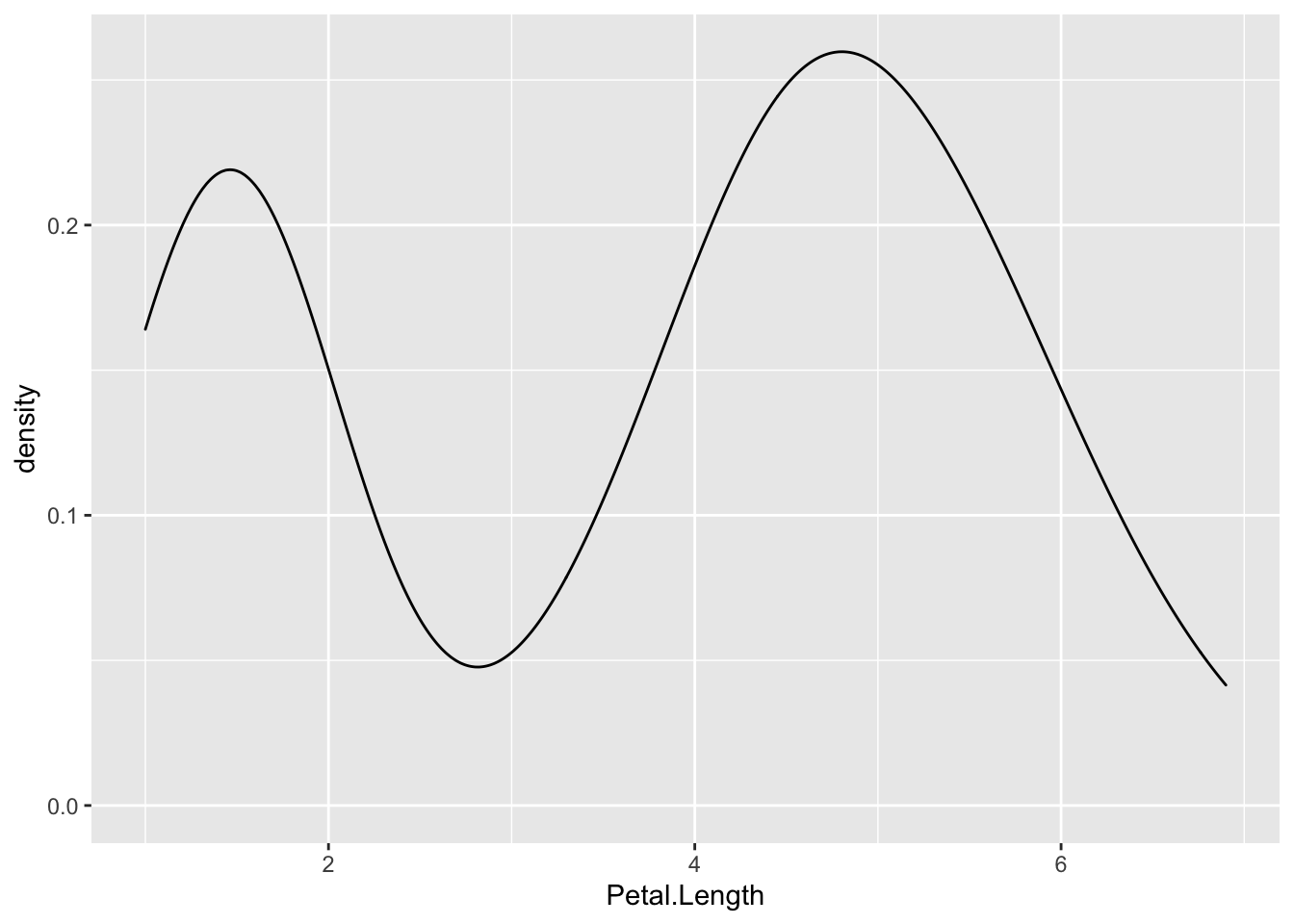

For example, if we returned to the iris dataset and looked at the distribution of Petal.Length, we would see a bimodal distribution (yet another distribution!). Bimodal distributions can be identified by density plots that have two distinct humps. In these distributions, there are two different modes – this is where the term “bimodal” comes from. In this plot, the curve suggests there are a number of flowers with petal length less than 2 and many with petal length around 5.

Since the two humps in the plot are about the same height, this shows that it’s not just one or two flowers with much smaller petal lengths, but rather that there are many. Thus, these observations aren’t likely outliers.

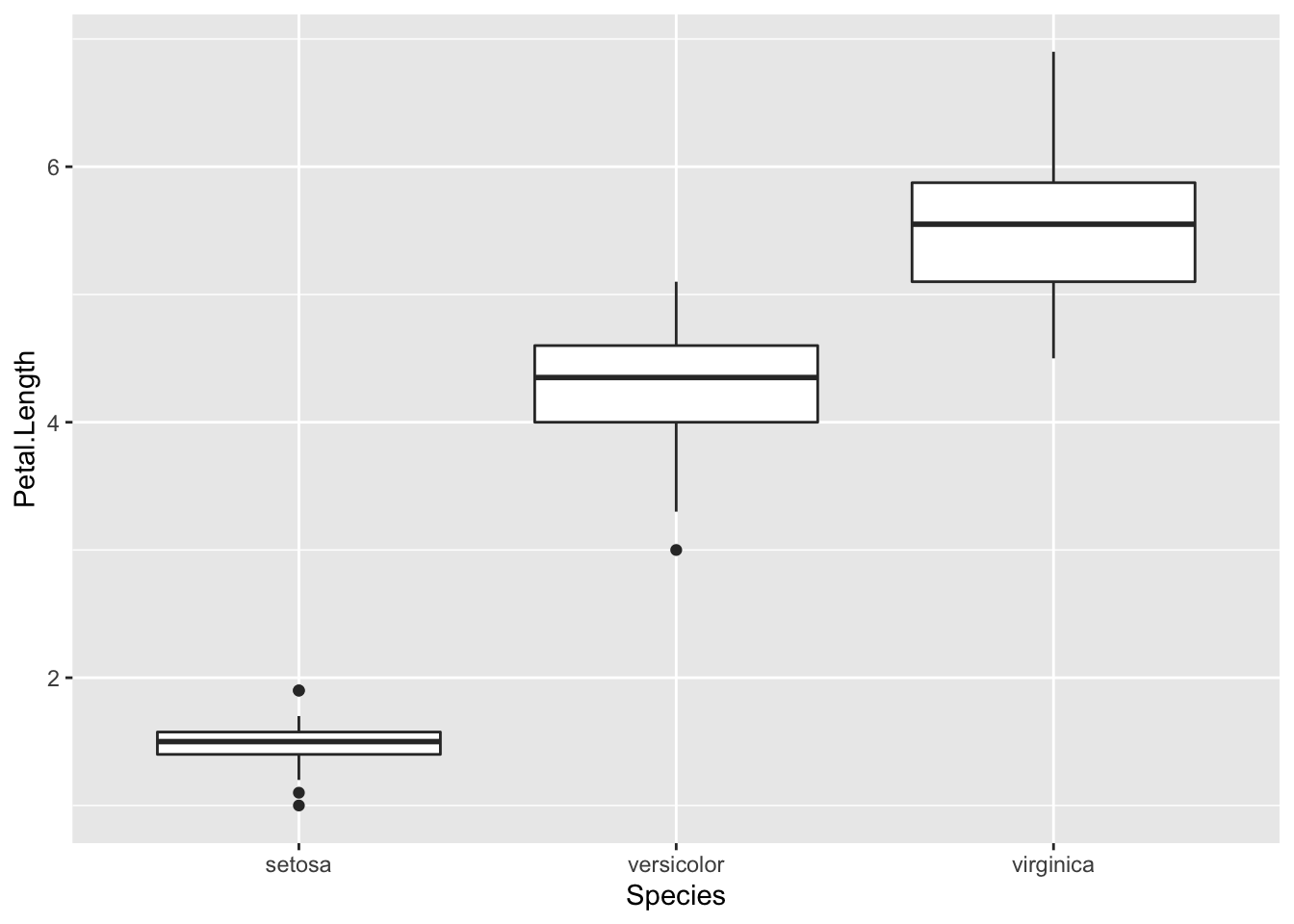

To investigate this further, we’ll look at petal length broken down by flower species:

In this boxplot, we see in fact that setosa have a shorter petal length while virginica have the longest. Had we simply removed all the shorter petal length flowers from our dataset, we would have lost information about an entire species!

Boxplots are also helpful because they plot “outlier” samples as points outside the box. By default, boxplots define “outliers” as observations as those that are 1.5 x IQR (interquartile range). The IQR is the distance between the first and third quartiles. This is a mathematical way to determine if a sample may be an outlier. It is visually helpful, but then it’s up to the analyst to determine if an observation should be removed. While the boxplot identifies outliers in the setosa and versicolor species, these values are all within a reasonable distance of the rest of the values, and unless I could determine why this occurred, I would not remove these observations from the dataset.

5.5.4 Evaluating Variables

Central Tendency

Once you know how large your dataset is, what variables you have information on, how much missing data you’ve got for each variable, and the shape of your data, you’re ready to start understanding the information within the values of each variable.

Some of the simplest and most informative measures you can calculate on a numeric variable are those of central tendency. The two most commonly used measures of central tendency are: mean and median. These measures provide information about the typical or central value in the variable.

mean

The mean (often referred to as the average) is equal to the sum of all the observations in the variable divided by the total number of observations in the variable. The mean takes all the values in your variable and calculates the most common value.

median

The median is the middle observation for a variable after the observations in that variable have been arranged in order of magnitude (from smallest to largest). The median is the middle value.

Variability

In addition to measures of central tendency, measures of variability are key in describing the values within a variable. Two common and helpful measures of variability are: standard deviation and variance. Both of these are measures of how spread out the values in a variable are.

Variance

The variance tells you how spread out the values are. If all the values within your variable are exactly the same, that variable’s variance will be zero. The larger your variance, the more spread out your values are. Take the following vector and calculate its variance in R using the var() function:

## [1] 0## [1] 104733575040The only difference between the two vectors is that the second one has one value that is much larger than “29”. The variance for this vector is thus much higher.

Standard Deviation

By definition, the standard deviation is the square root of the variance, thus if we were to calculate the standard deviation in R using the sd() function, we’d see that the sd() function is equal to the square root of the variance:

## [1] 323625.7## [1] 323625.7For both measures of variance, the minimum value is 0. The larger the number, the more spread out the values in the valuable are.

5.5.4.1 Summarizing Your Data

Often, you’ll want to include tables in your reports summarizing your dataset. These will include the number of observations in your dataset and maybe the mean/median and standard deviation of a few variables. These could be organized into a table using what you learned in the data visualization course about generating tables.

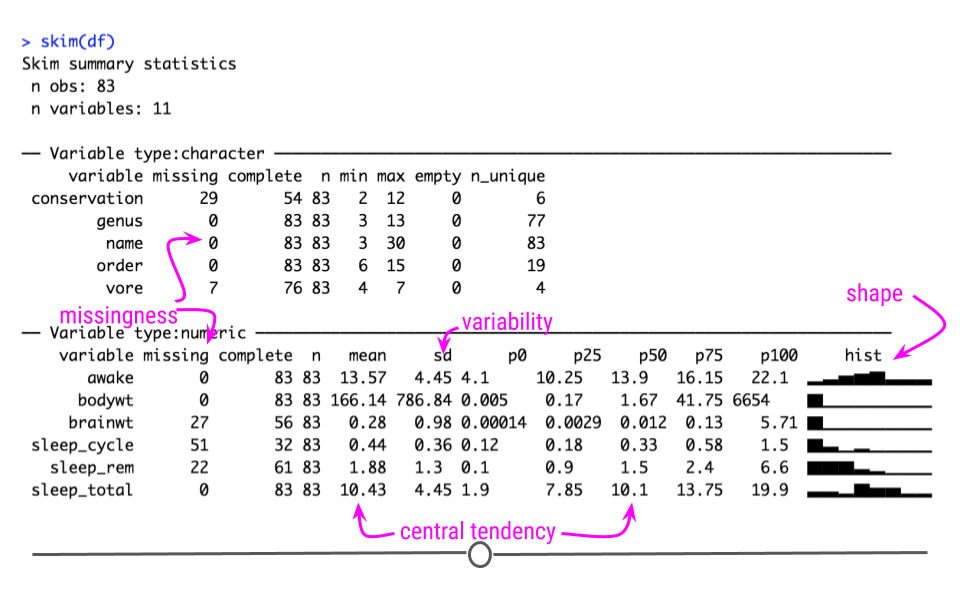

5.5.4.1.1 skimr

Alternatively, there is a helpful package that will summarize all the variables within your dataset. The skimr package provides a tidy output with information about your dataset.

To use skimr, you’ll have to install and load the package before using the helpful function skim() to get a snapshot of your dataset.

Using this function we can quickly get an idea about missingness, variability, central tendency, and shape, and outliers all at once.

The output from skim() separately summarizes categorical and continuous variables. For continuous variables you get information about the mean and median (p50) column. You know what the range of the variable is (p0 is the minimum value, p100 is the maximum value for continuous variables). You also get a measure of variability with the standard deviation (sd). It even quantifies the number of missing values (missing) and shows you the distribution or shape of each variable (hist)! Potential outliers can also be identified from the hist column and the p100 and p0 columns. This function can be incredibly useful to get a quick snapshot of what’s going on with your dataset.

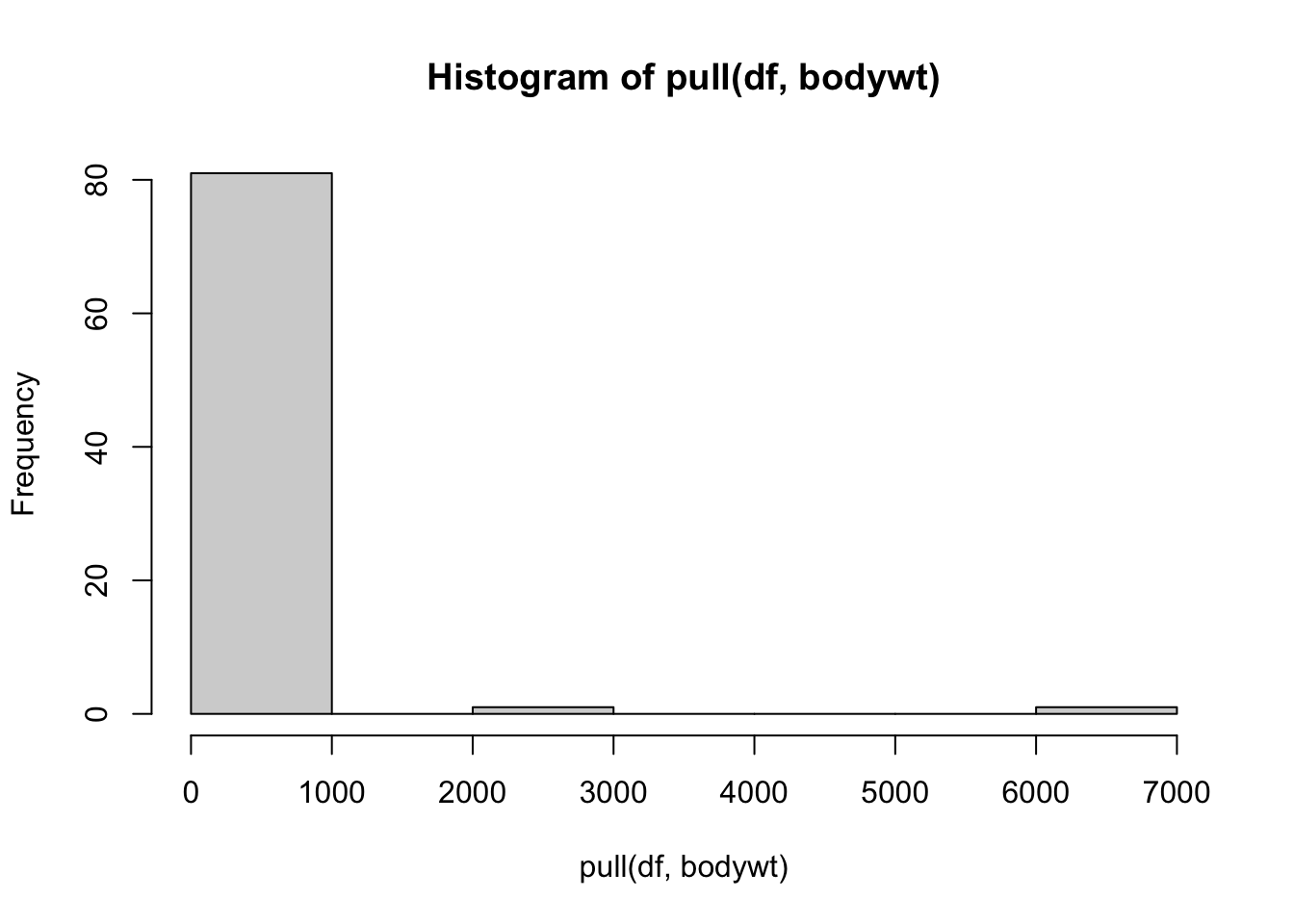

If we take a look closer at the bodywt and brianwt variables, we can see that there may be outliers. The maximum value of the bodywt variable looks very different from the mean value.

## # A tibble: 1 × 11

## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake

## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Africa… Loxo… herbi Prob… vu 3.3 NA NA 20.7

## # ℹ 2 more variables: brainwt <dbl>, bodywt <dbl>Ah! looks like it is an elephant, that makes sense.

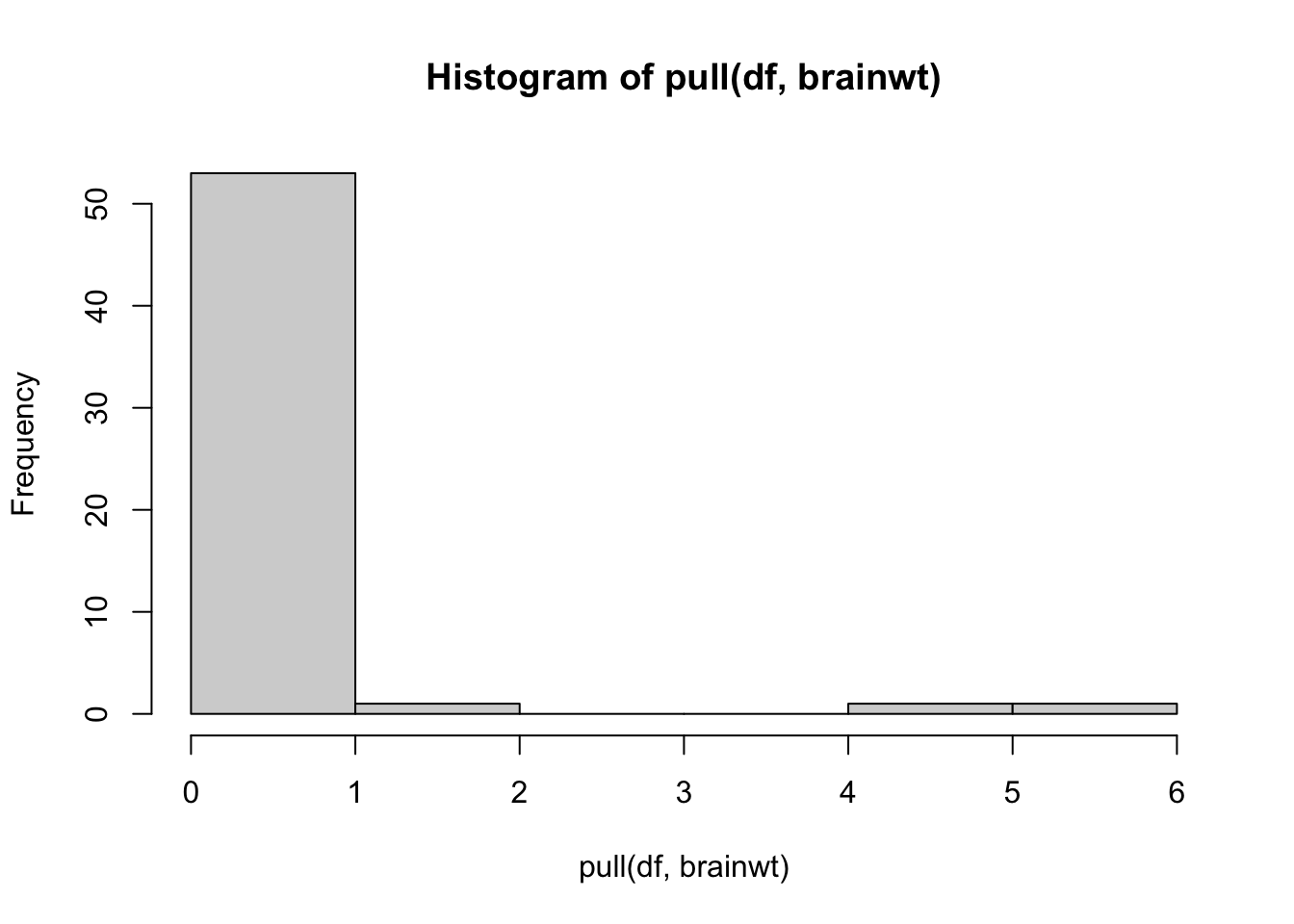

Taking a deeper look at the histogram we can see that there are two values that are especially different.

## # A tibble: 2 × 11

## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake

## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Asian … Elep… herbi Prob… en 3.9 NA NA 20.1

## 2 Africa… Loxo… herbi Prob… vu 3.3 NA NA 20.7

## # ℹ 2 more variables: brainwt <dbl>, bodywt <dbl>Looks like both data points are for elephants.

Therefore, we might consider performing an analysis both with and without the elephant data, to see if it influences the overall result.

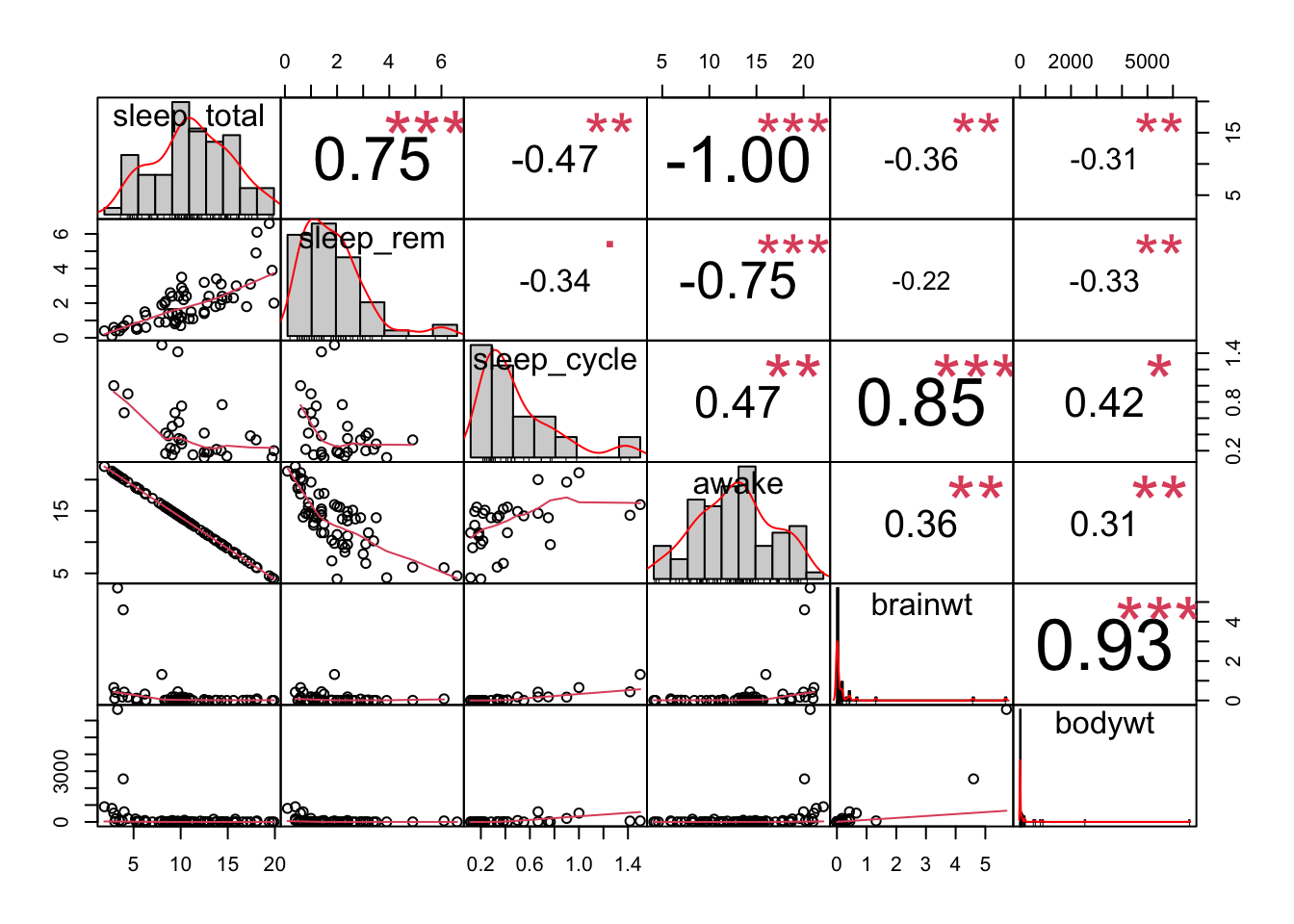

5.5.5 Evaluating Relationships

Another important aspect of exploratory analysis is looking at relationships between variables.

Again visualizations can be very helpful.

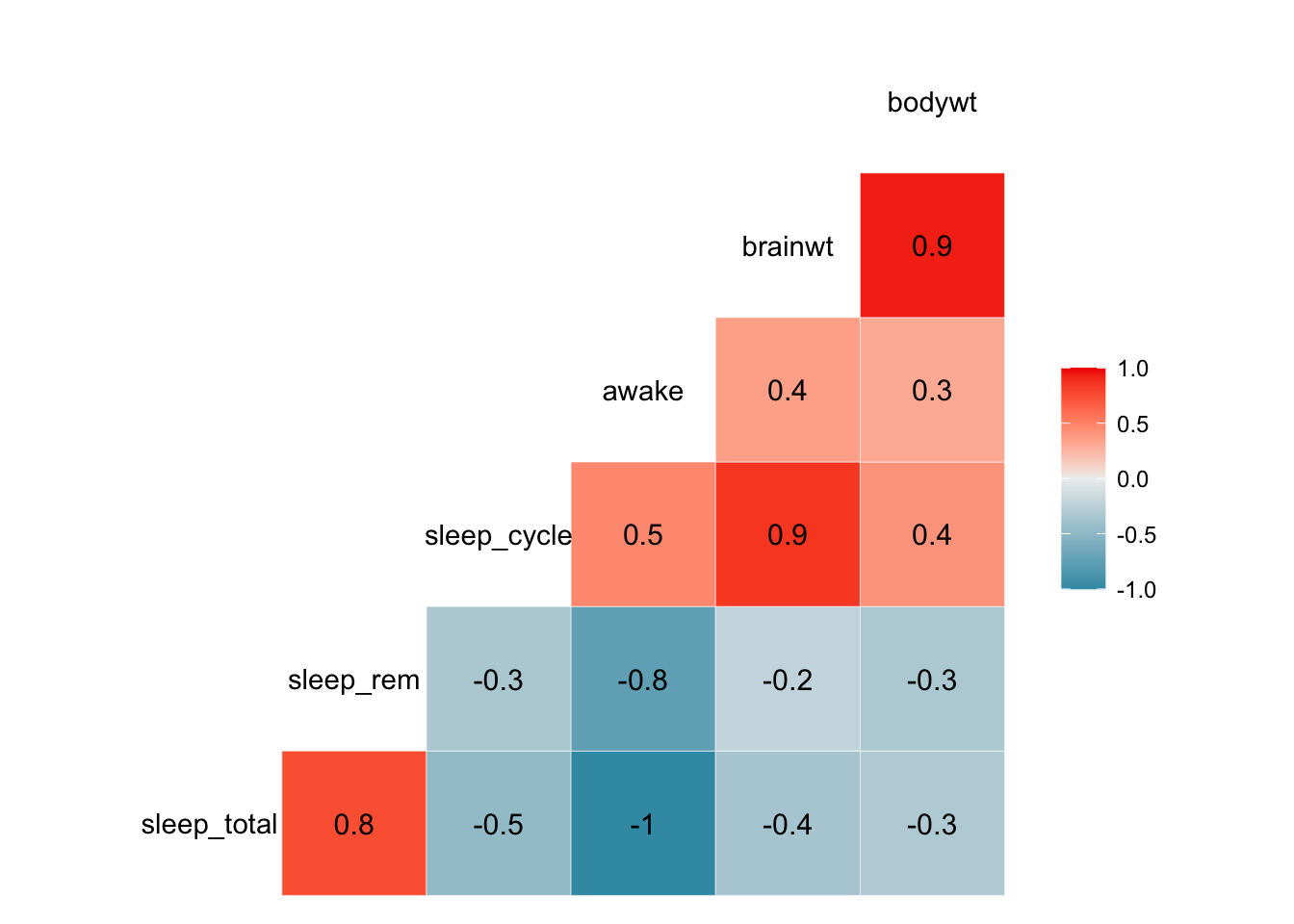

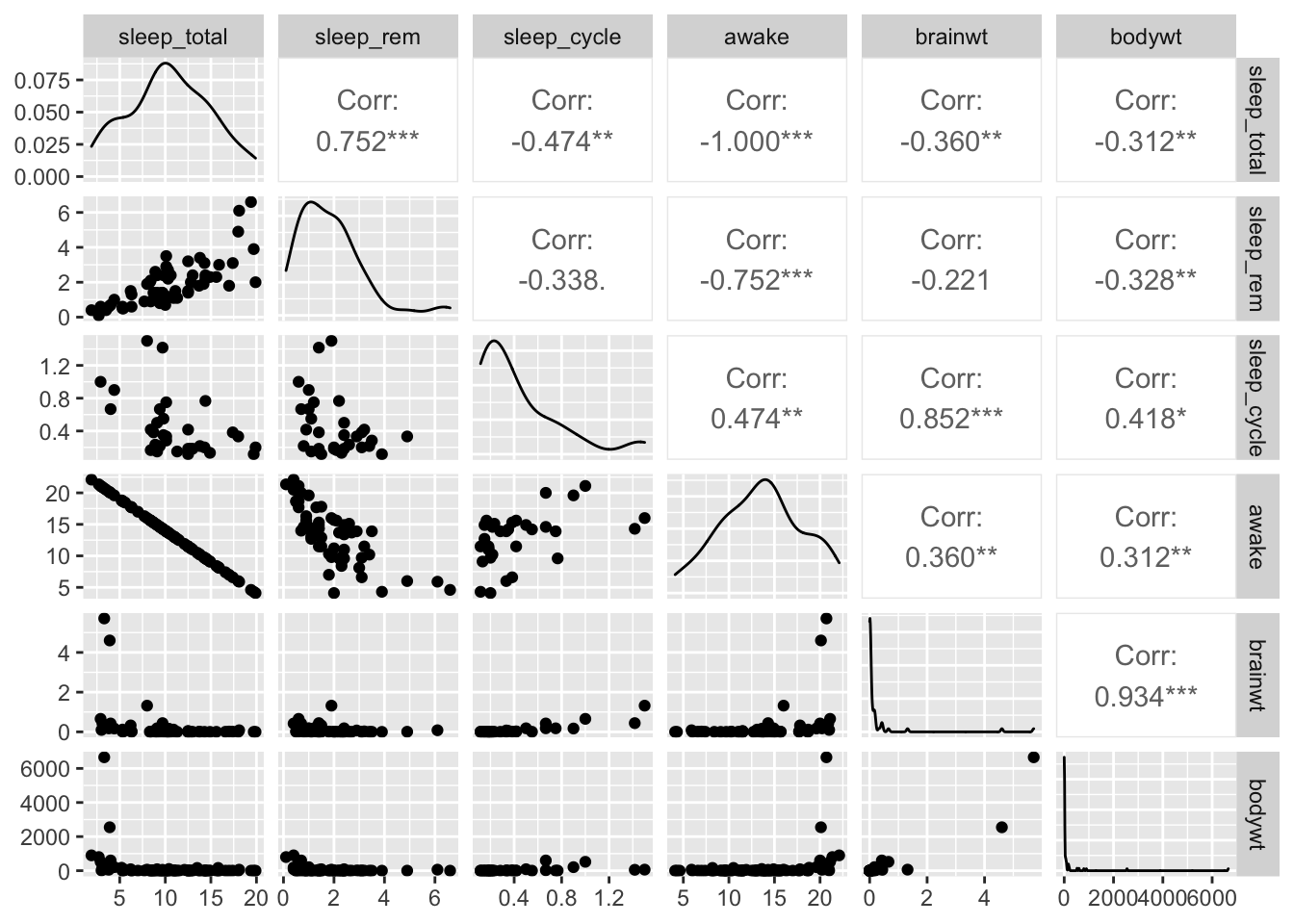

We might want to look at the relationships between all of our continuous variables. A good way to do this is to use a visualization of correlation. As a reminder, correlation is a measure of the relationship or interdependence of two variables. In other words, how much do the values of one variable change with the values of another. Correlation can be either positive or negative and it ranges from -1 to 1, with 1 and -1 indicating perfect correlation (1 being positive and -1 being negative) and 0 indicating no correlation. We will describe this in greater detail when we look at associations.

Here are some very useful plots that can be generated using the GGally package and the PerformanceAnalytics package to examine if variables are correlated.

## Registered S3 method overwritten by 'GGally':

## method from

## +.gg ggplot2

We can see from these plots that the awake variable and the sleep_total variable are perfectly correlated. This becomes important for choosing what to include in models when we try to perform prediction or inference analyses.

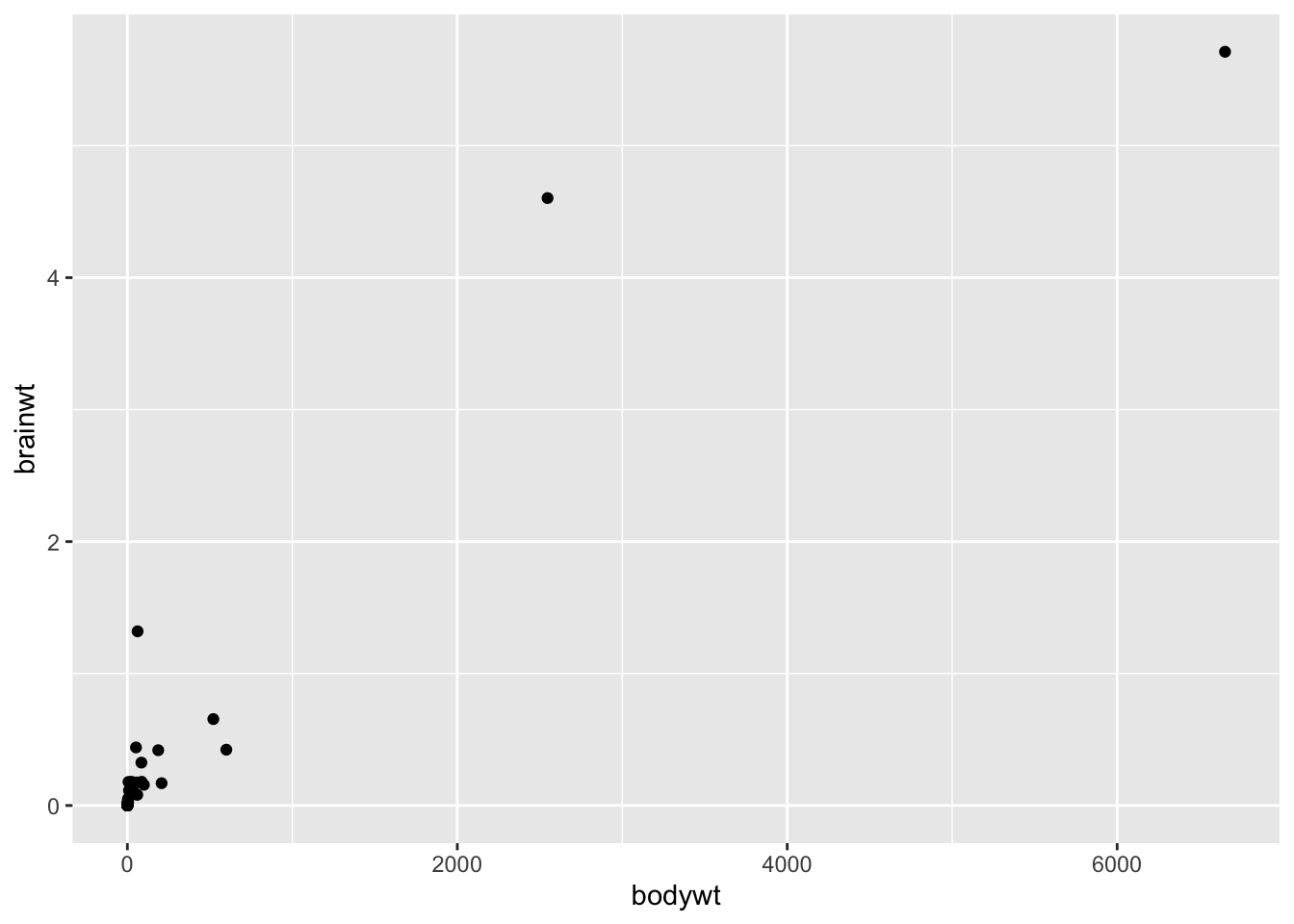

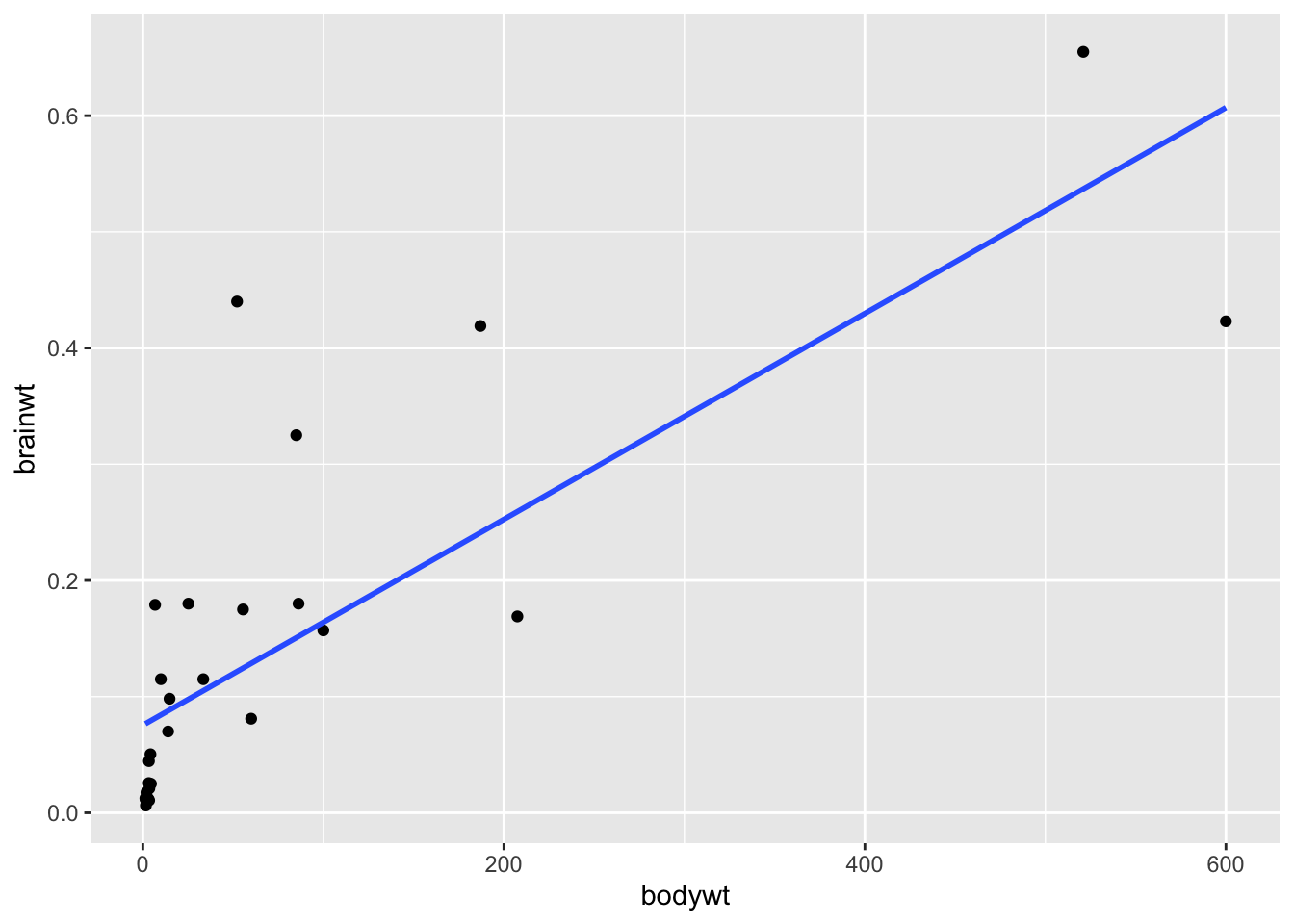

We may be especially interested in how brain weight (brain_wt) relates to body weight (body_wt). We might assume that these to variables might be related to one another.

Here is a plot of the these two variables including the elephant data:

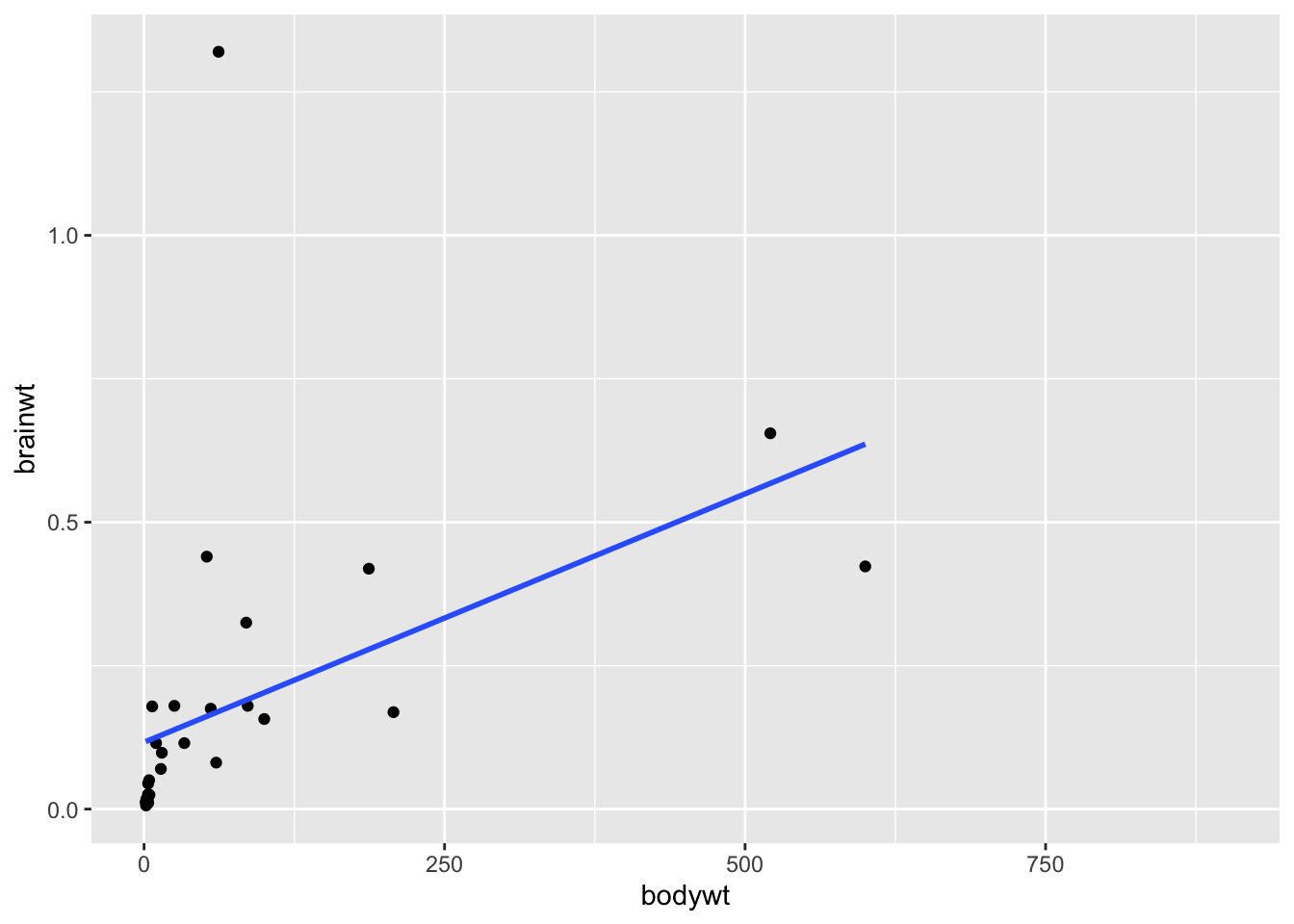

Clearly, including the elephant data points makes it hard to look at the other data points, it is possible that these points are driving the positive correlation that we get when we use all the data. Here is a plot of the relationship between these to variables excluding the elephant data and the very low body weight organisms:

library(ggplot2)

df %>%

filter(bodywt<2000 & bodywt >1) %>%

ggplot(aes(x = bodywt, y = brainwt)) +

geom_point()+

geom_smooth(method = "lm", se = FALSE)## `geom_smooth()` using formula = 'y ~ x'

cor.test(pull(df %>% filter(bodywt<2000 & bodywt >1),bodywt),

pull(df %>%filter(bodywt<2000 & bodywt >1),brainwt))##

## Pearson's product-moment correlation

##

## data: pull(df %>% filter(bodywt < 2000 & bodywt > 1), bodywt) and pull(df %>% filter(bodywt < 2000 & bodywt > 1), brainwt)

## t = 2.7461, df = 28, p-value = 0.01042

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.1203262 0.7040582

## sample estimates:

## cor

## 0.4606273We can see from this plot that in general brainwt is correlated with bodywt. Or in other words, brainwt tends to increase with bodywt.

But it also looks like we have an outlier for our brainwt variable! There is a very high brainwt value that is greater than 1.

We can also see it in our histogram of this variable:

Let’s see which organism this is:

## # A tibble: 3 × 11

## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake

## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Asian … Elep… herbi Prob… en 3.9 NA NA 20.1

## 2 Human Homo omni Prim… <NA> 8 1.9 1.5 16

## 3 Africa… Loxo… herbi Prob… vu 3.3 NA NA 20.7

## # ℹ 2 more variables: brainwt <dbl>, bodywt <dbl>It is humans! Let’s see what the plot looks like without humans:

library(ggplot2)

df %>%

filter(bodywt<2000 & bodywt >1 & brainwt<1) %>%

ggplot(aes(x = bodywt, y = brainwt)) +

geom_point()+

geom_smooth(method = "lm", se = FALSE)## `geom_smooth()` using formula = 'y ~ x'

cor.test(pull(df %>% filter(bodywt<2000 & bodywt >1 & brainwt<1),bodywt),

pull(df %>%filter(bodywt<2000 & bodywt >1 & brainwt<1),brainwt))##

## Pearson's product-moment correlation

##

## data: pull(df %>% filter(bodywt < 2000 & bodywt > 1 & brainwt < 1), bodywt) and pull(df %>% filter(bodywt < 2000 & bodywt > 1 & brainwt < 1), brainwt)

## t = 6.6127, df = 27, p-value = 4.283e-07

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.5897381 0.8949042

## sample estimates:

## cor

## 0.7862926We can see from these plots that the brainwt variable seems to have a relationship (correlation value = 0.79) with bodywt and it increases with the bodywt variable, however this relationship is less strong when humans are included (correlation value = 0.46). This information would be important to keep in mind when trying to model this data to make inference or predictions about the animals included.

5.6 Inference

Inferential Analysis is what analysts carry out after they’ve described and explored their dataset. After understanding your dataset better, analysts often try to infer something from the data. This is done using statistical tests.

We discussed a bit about how we can use models to perform inference and prediction analyses. What does this mean?

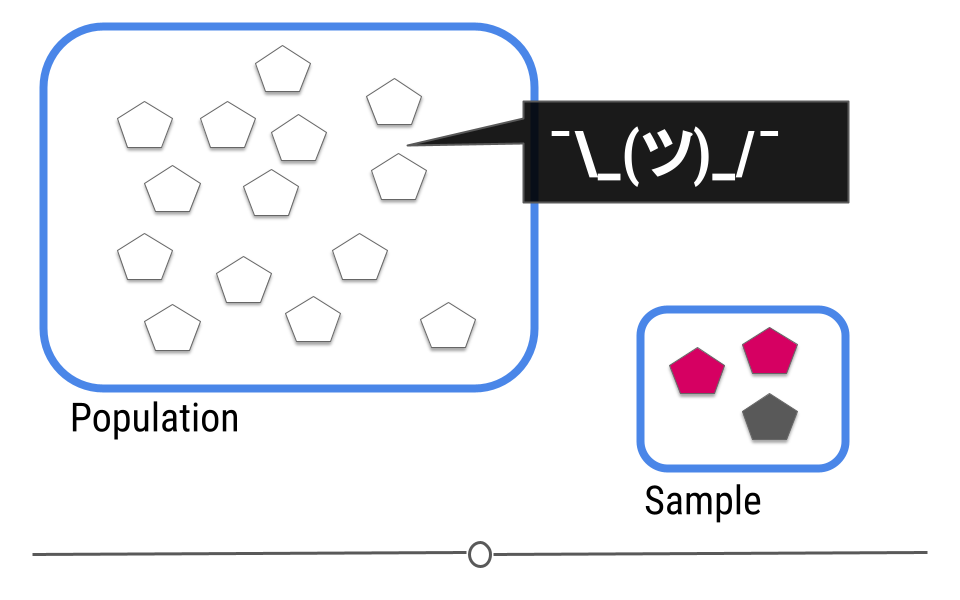

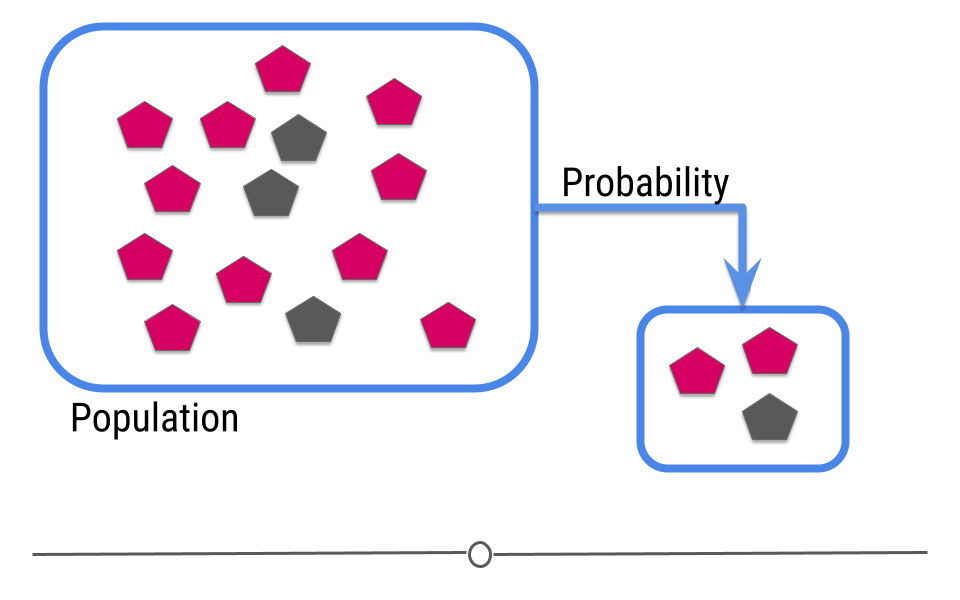

The goal of inferential analyses is to use a relatively small sample of data to infer or say something about the population at large. This is required because often we want to answer questions about a population. Let’s take a dummy example here where we have a population of 14 shapes. Here, in this graphic, the shapes represent individuals in the population and the colors of the shapes can be either pink or grey:

In this example we only have fourteen shapes in the population; however, in inferential data analysis, it’s not usually possible to sample everyone in the population. Consider if this population were everyone in the United States or every college student in the world. As getting information from every individual would be infeasible. Data are instead collected on a subset, or a sample of the individuals in the larger population.

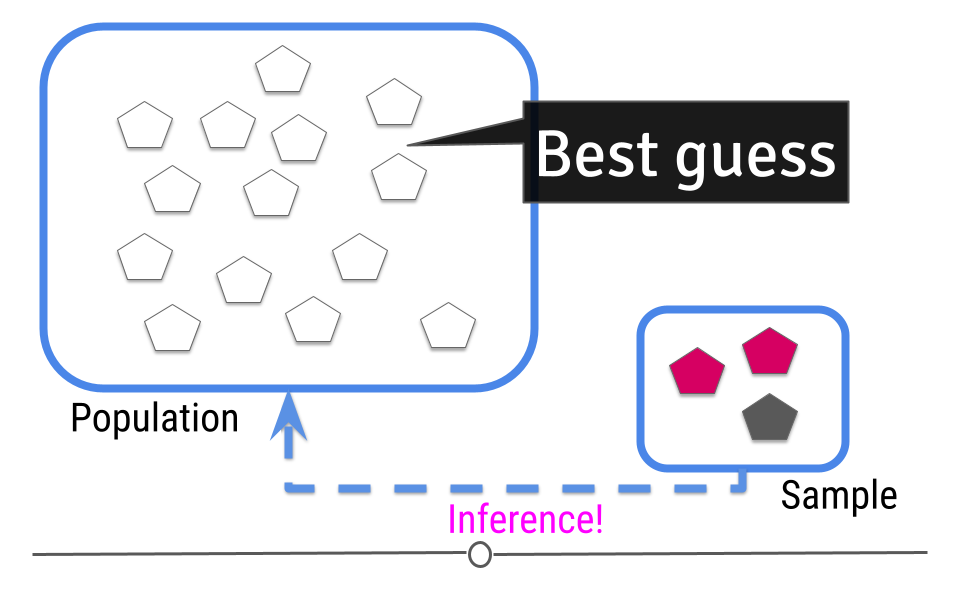

In our example, we’ve been showing you how many pink and how many gray shapes are in the larger population. However, in real life, we don’t know what the answer is in the larger population. That’s why we collected the sample!

This is where inference comes into play. We analyze the data collected in our sample and then do our best to infer what the answer is in the larger population. In other words, inferential data analysis uses data from a sample to make its best guess as to what the answer would be in the population if we were able to measure every individual.

5.6.1 Uncertainty

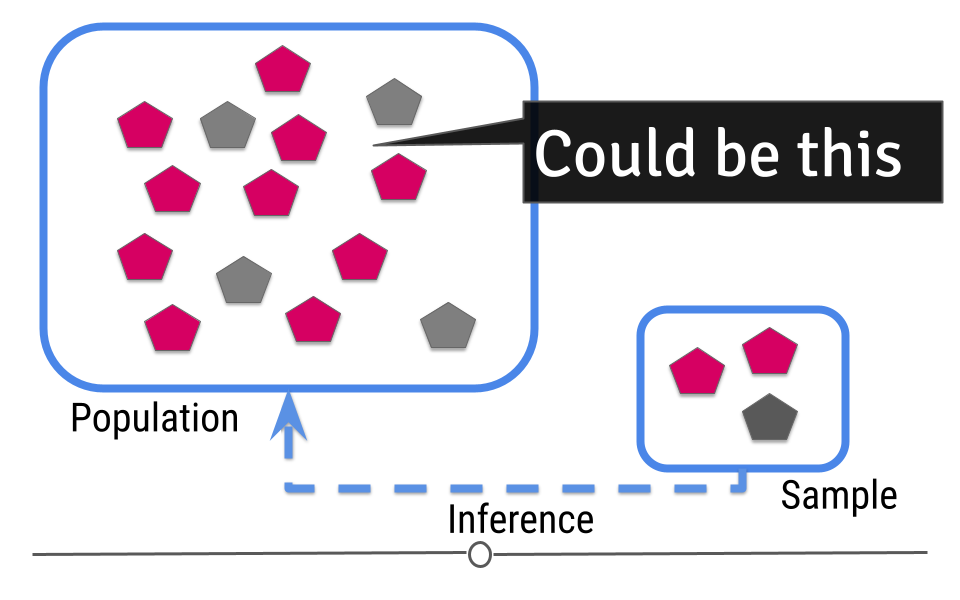

Because we haven’t directly measured the population but have only been able to take measurements on a sample of the data, when making our inference we can’t be exactly sure that our inference about the population is exact. For example, in our sample one-third of the shapes are grey. We’d expect about one-third of the shapes in our population to be grey then too! Well, one-third of 14 (the number of shapes in our population) is 4.667. Does this mean four shapes are truly gray?

Or maybe five shapes in the population are grey?

Given the sample we’ve taken, we can guess that 4-5 shapes in our population will be grey, but we aren’t certain exactly what that number is. In statistics, this “best guess” is known as an estimate. This means that we estimate that 4.667 shapes will be gray. But, there is uncertainty in that number. Because we’re taking our best guess at figuring out what that estimate should be, there’s also a measure of uncertainty in that estimate. Inferential data analysis includes generating the estimate and the measure of uncertainty around that estimate.

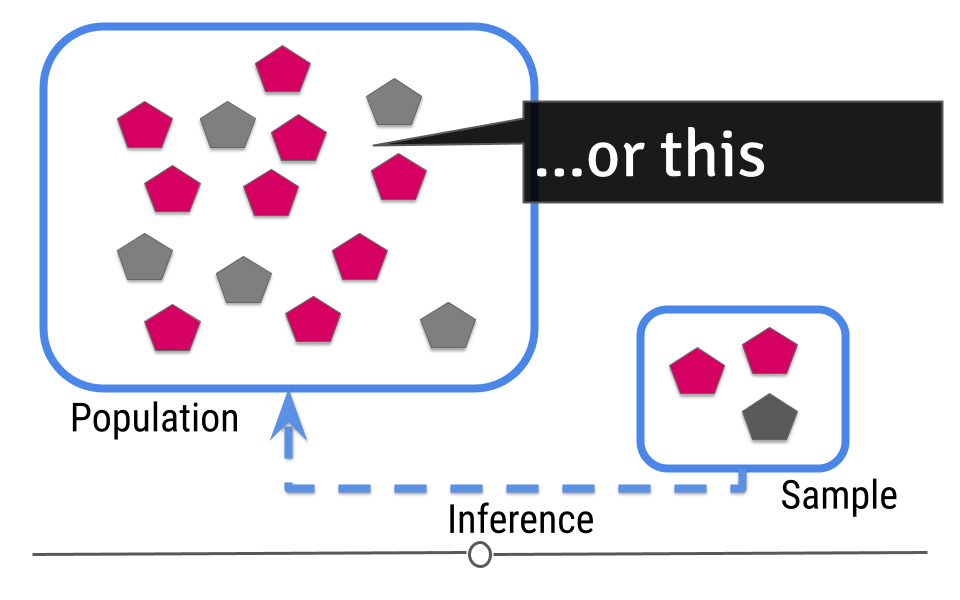

Let’s return back to the example where we know the truth in the population. Hey look! There were actually only three grey shapes after all. It is totally possible that if you put all those shapes into a bag and pulled three out that two would be pink and one would be grey. As statisticians, we’d say that getting this sample was probable (it’s within the realm of possibility). This really drives home why it’s important to add uncertainty to your estimate whenever you’re doing inferential analysis!

5.6.2 Random Sampling

Since you are moving from a small amount of data and trying to generalize to a larger population, your ability to accurately infer information about the larger population depends heavily on how the data were sampled.

The data in your sample must be representative of your larger population to be used for inferential data analysis. Let’s discuss what this means.

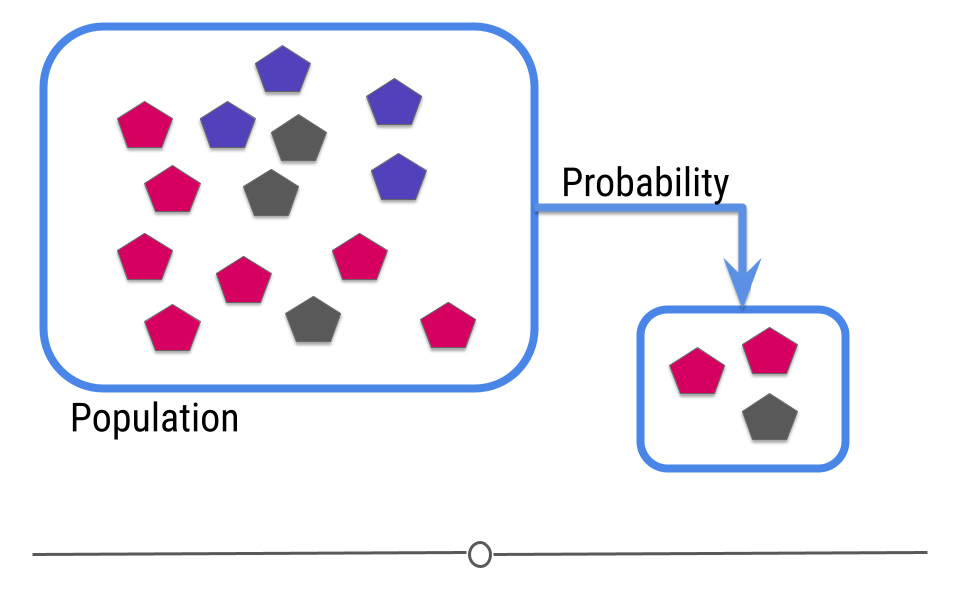

Using the same example, what if, in your larger population, you didn’t just have grey and pink shapes, but you also had blue shapes?

Well, if your sample only has pink and grey shapes, when you go to make an inference, there’s no way you’d infer that there should be blue shapes in your population since you didn’t capture any in your sample.

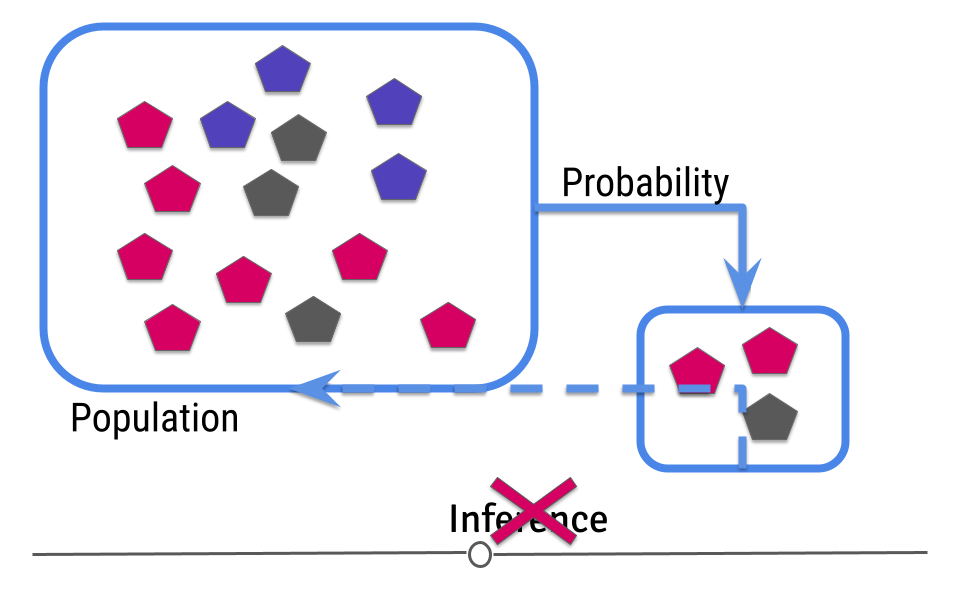

In this case, your sample is not representative of your larger population. In cases where you do not have a representative sample, you can not carry out inference, since you will not be able to correctly infer information about the larger population.

This means that you have to design your analysis so that you’re collecting representative data and that you have to check your data after data collection to make sure that you were successful.

You may at this point be thinking to yourself. “Wait a second. I thought I didn’t know what the truth was in the population. How can I make sure it’s representative?” Good point! With regards to the measurement you’re making (color distribution of the shapes, in this example), you don’t know the truth. But, you should know other information about the population. What is the age distribution of your population? Your sample should have a similar age distribution. What proportion of your population is female? If it’s half, then your sample should be comprised of half females. Your data collection procedure should be set up to ensure that the sample you collect is representative (very similar to) your larger population. Then, once the data are collected, your descriptive analysis should check to ensure that the data you’ve collected are in fact representative of your larger population. Randomly sampling your larger population helps ensure that the inference you make about the measurement of interest (color distribution of the shapes) will be the most accurate.

To reiterate: If the data you collect is not from a representative sample of the population, the generalizations you infer won’t be accurate for the population.

5.6.2.1 An example of inferential data analysis

Unlike in our previous examples, Census data wouldn’t be used for inferential analysis. By definition, a census already collects information on (functionally) the entire population. Thus, there is no population on which to infer. Census data are the rare exception where a whole population is included in the dataset. Further, using data from the US census to infer information about another country would not be a good idea because the US isn’t necessarily representative of the other country.

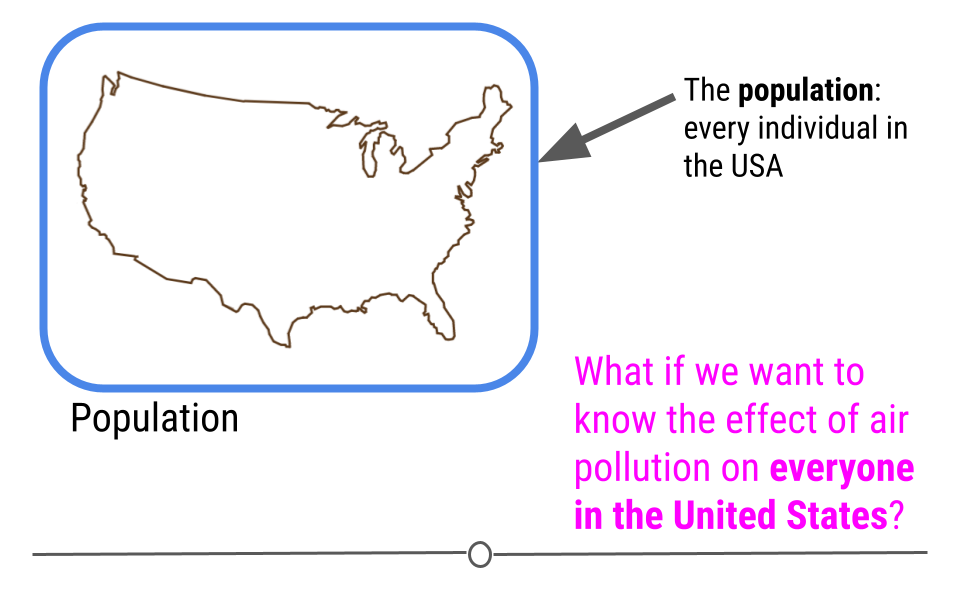

Instead, a better example of a dataset on which to carry out inferential analysis would be the data used in the study: The Effect of Air Pollution Control on Life Expectancy in the the United States: An Analysis of 545 US counties for the period 2000 to 2007. In this study, researchers set out to understand the effect of air pollution on everyone in the United States

To answer this question, a subset of the US population was studied, and the researchers looked at the level of air pollution experienced and life expectancy. It would have been nearly impossible to study every individual in the United States year after year. Instead, this study used the data they collected from a sample of the US population to infer how air pollution might be impacting life expectancy in the entire US!

5.7 Linear Modeling

5.7.1 Linear Regression

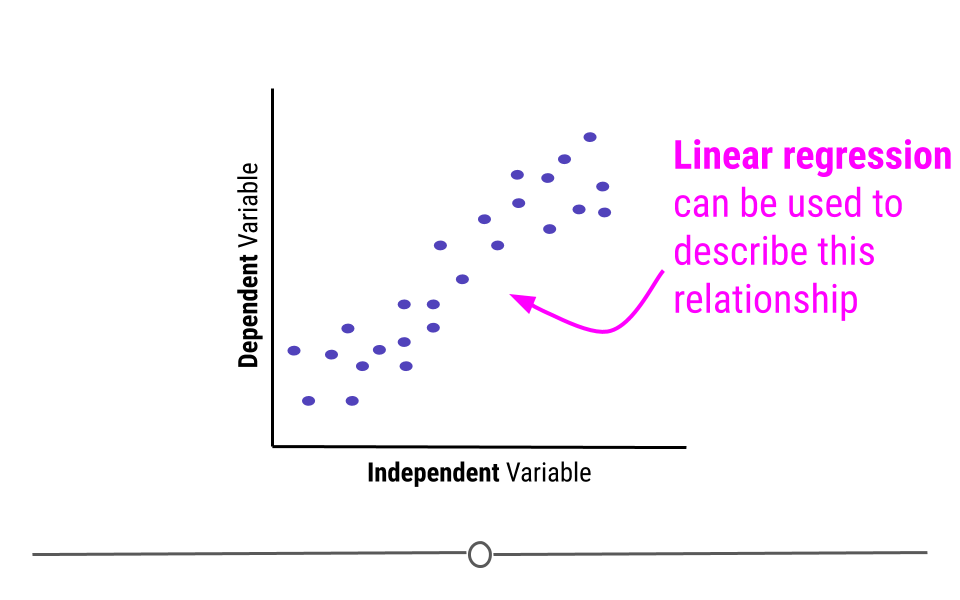

Inferential analysis is commonly the goal of statistical modeling, where you have a small amount of information to extrapolate and generalize that information to a larger group. One of the most common approaches used in statistical modeling is known as linear regression. Here, we’ll discuss how to recognize when using linear regression is appropriate, how to carry out the analysis in R, and how to interpret the results from this statistical approach.

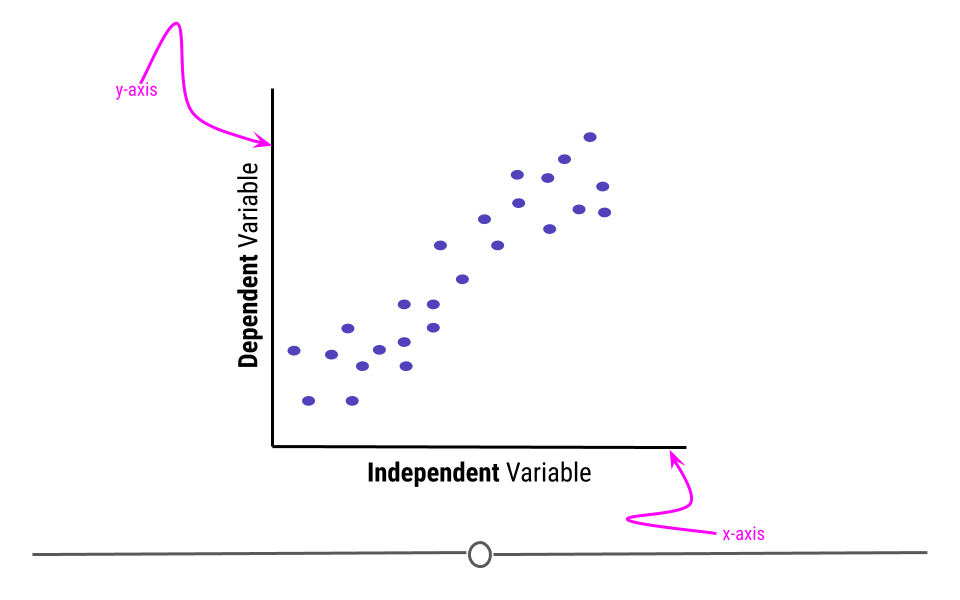

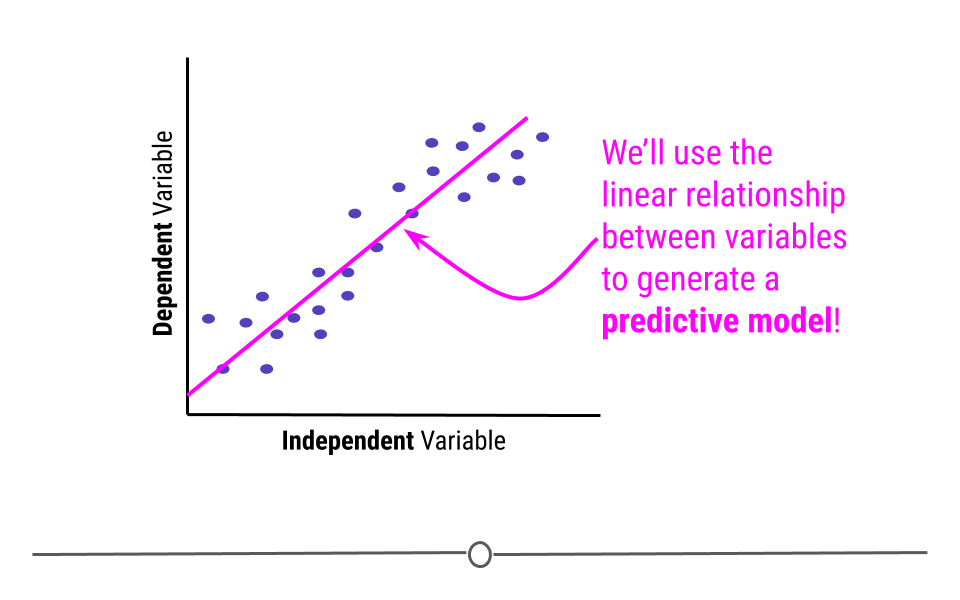

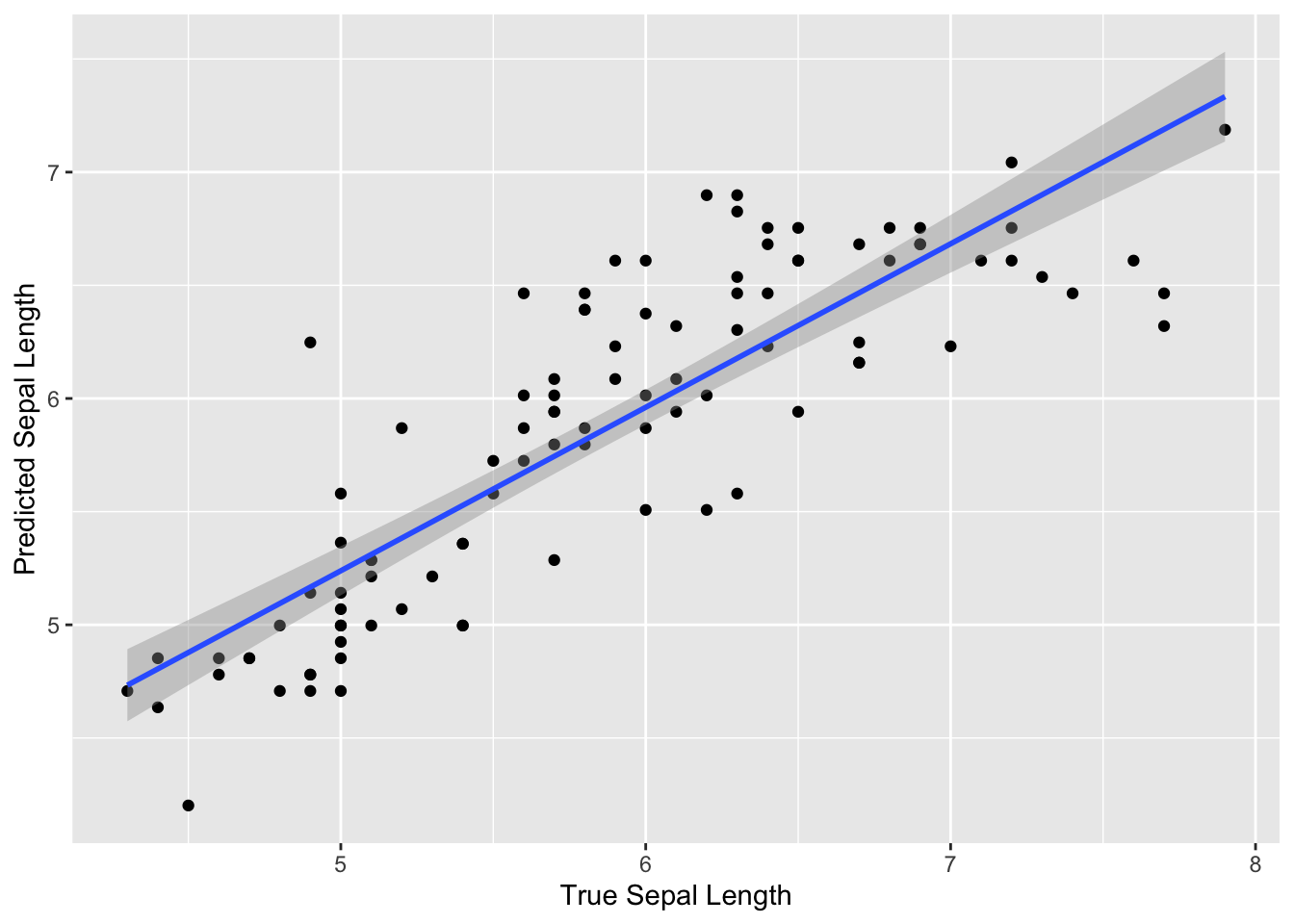

When discussing linear regression, we’re trying to describe (model) the relationship between a dependent variable and an independent variable.

When visualizing a linear relationship, the independent variable is plotted along the bottom of the graph, on the x-axis and the dependent variable is plotted along the side of the plot, on the y-axis.

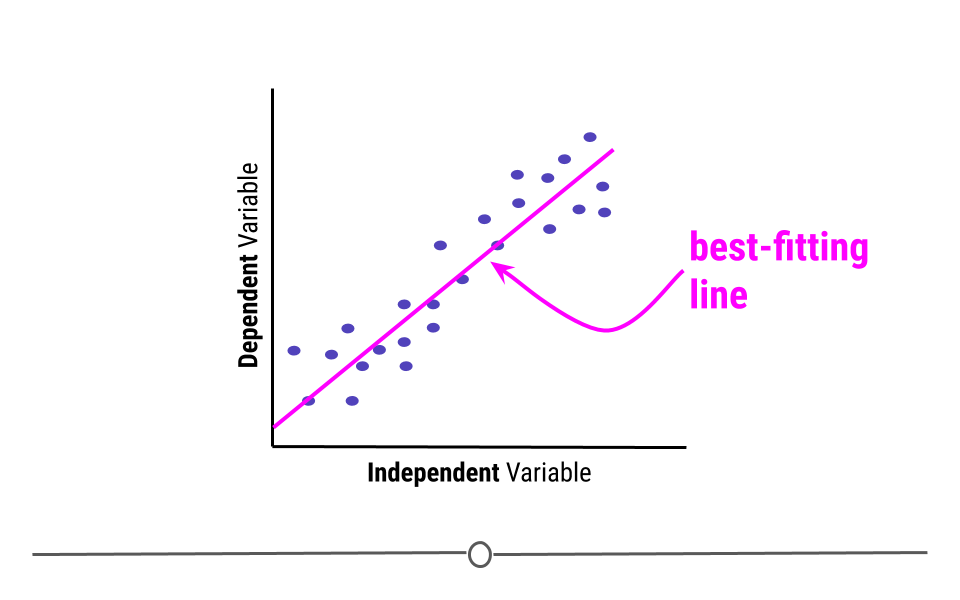

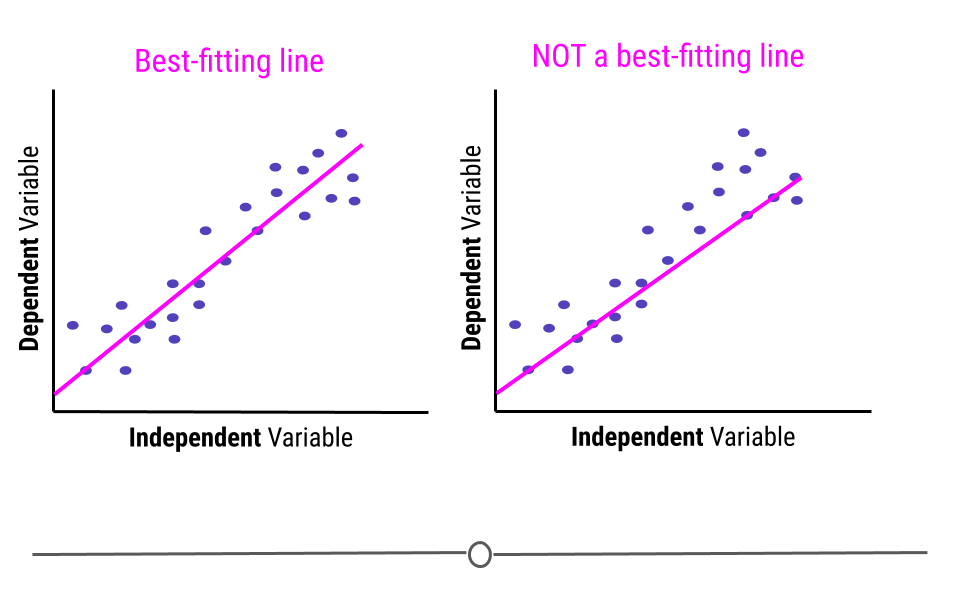

When carrying out linear regression, a best-fitting line is drawn through the data points to describe the relationship between the variables.

A best-fitting line, technically-speaking, minimizes the sum of the squared errors. In simpler terms, this means that the line that minimizes the distance of all the points from the line is the best-fitting line. Or, most simply, there are about the same number of points above the line as there are below the line. In total, the distance from the line for the points above the line will be the same as the distance from the points to the line below the line.

Note that the best fitting line does not have to go through any points to be the best-fitting line. Here, on the right, we see a line that goes through seven points on the plot (rather than the four the best-fitting line goes through, on the left). However, this is not a best-fitting line, as there are way more points above the line than there are below the line.

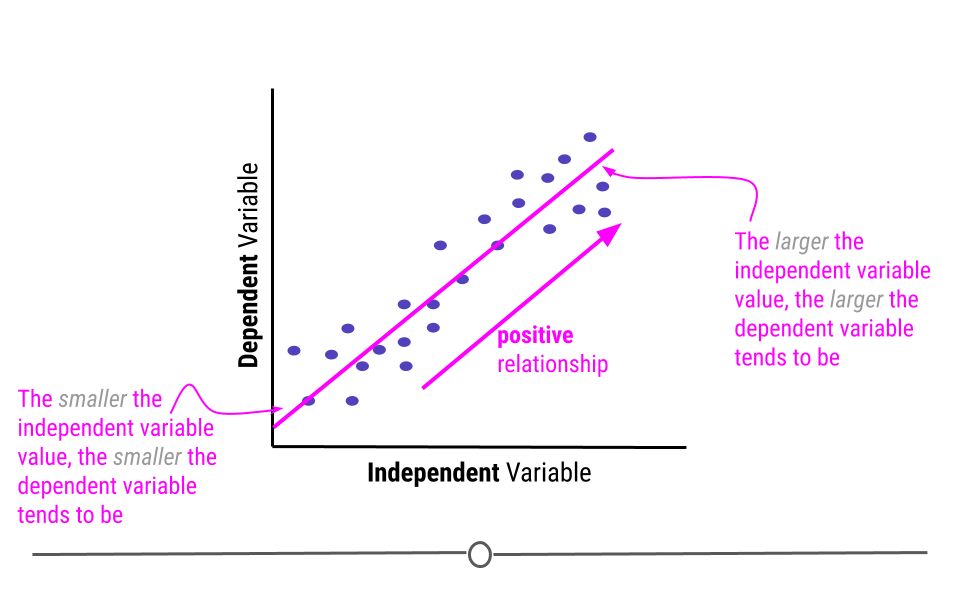

This line describes the relationship between the two variables. If you look at the direction of the line, it will tell you whether there is a positive or a negative relationship between the variables. In this case, the larger the value of the independent variable, the larger the value of the dependent variable. Similarly, the smaller the value of the independent variable, the smaller the value of the dependent variable. When this is the case, there is a positive relationship between the two variables.

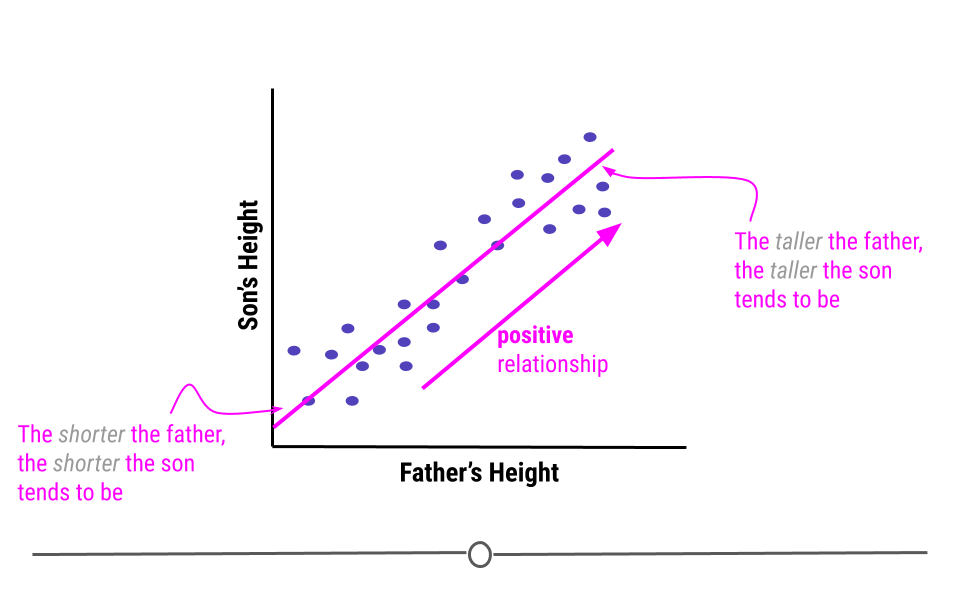

An example of variables that have a positive relationship would be the height of fathers and their sons. In general, the taller a father is, the taller his son will be. And, the shorter a father is the more likely his son is to be short.

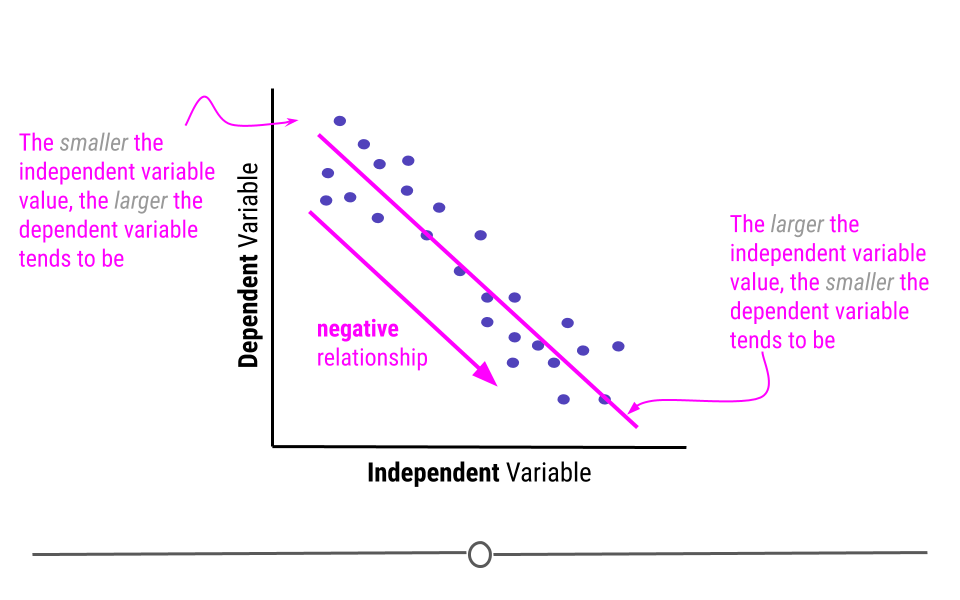

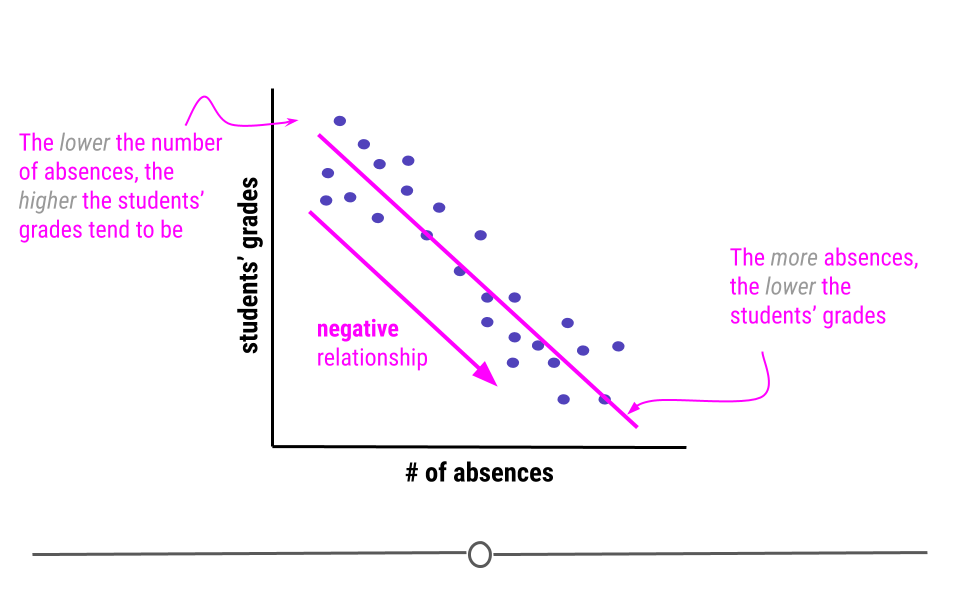

Alternatively, when the higher the value of the independent variable, the lower the value of the dependent variable, this is a negative relationship.

An example of variables that have a negative relationship would be the relationship between a students’ absences and their grades. The more absences a student has, the lower their grades tend to be.

Linear regression, in addition to to describing the direction of the relationship, it can also be used to determine the strength of that relationship.

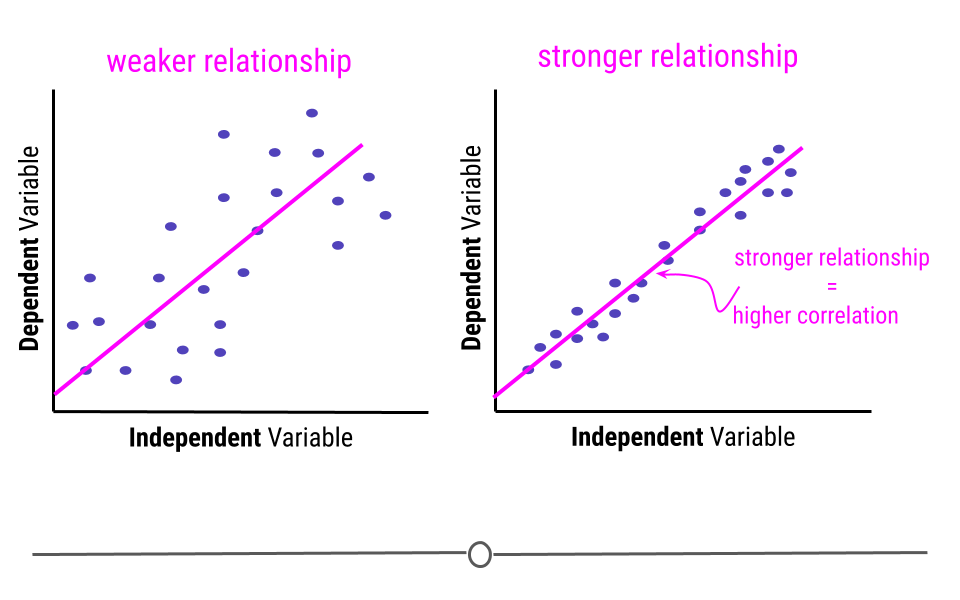

This is because the assumption with linear regression is that the true relationship is being described by the best-fitting line. Any points that fall away from the line do so due to error. This means that if all the points fall directly on top of the line, there is no error. The further the points fall from the line, the greater the error. When points are further from the best-fitting line, the relationship between the two variables is weaker than when the points fall closer to the line.

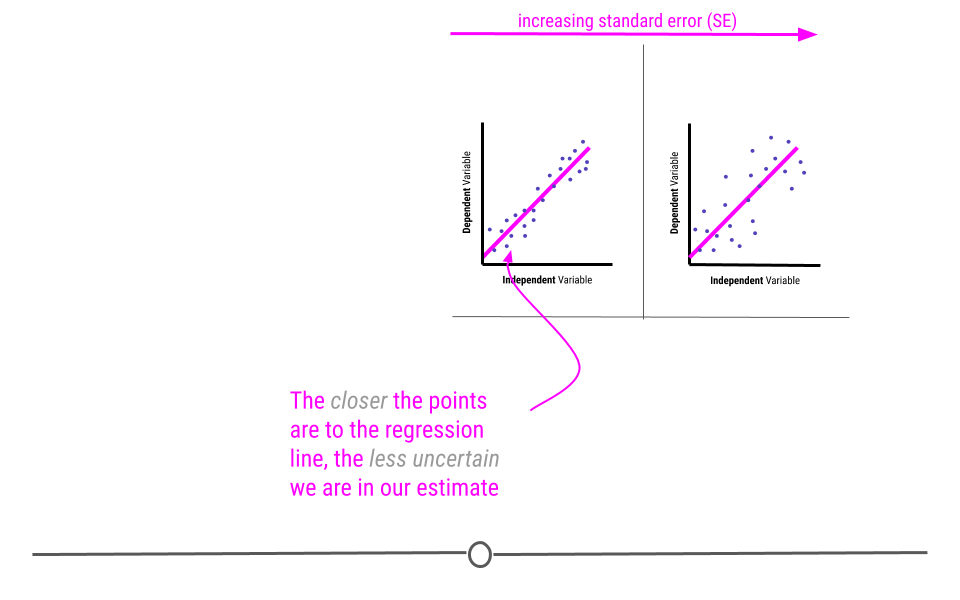

In this example, the pink line is exactly the same best-fitting line in each graph. However, on the left, where the points fall further from the line, the strength of the relationship between these two variables is weaker than on the right, where the points fall closer to the line, where the relationship is stronger. The strength of this relationship is measured using correlation. The closer the points are to the line the more correlated the two variables are, meaning the relationship between the two variables is stronger.

5.7.2 Assumptions

Thus far we have focused on drawing linear regression lines. Linear regression lines can be drawn on any plot, but just because you can do something doesn’t mean you actually should. When it comes to linear regression, in order to carry our any inference on the relationship between two variables, there are a few assumptions that must hold before inference from linear regression can be done.

The two assumptions of simple linear regression are linearity and homoscedasticity.

5.7.2.1 Linearity

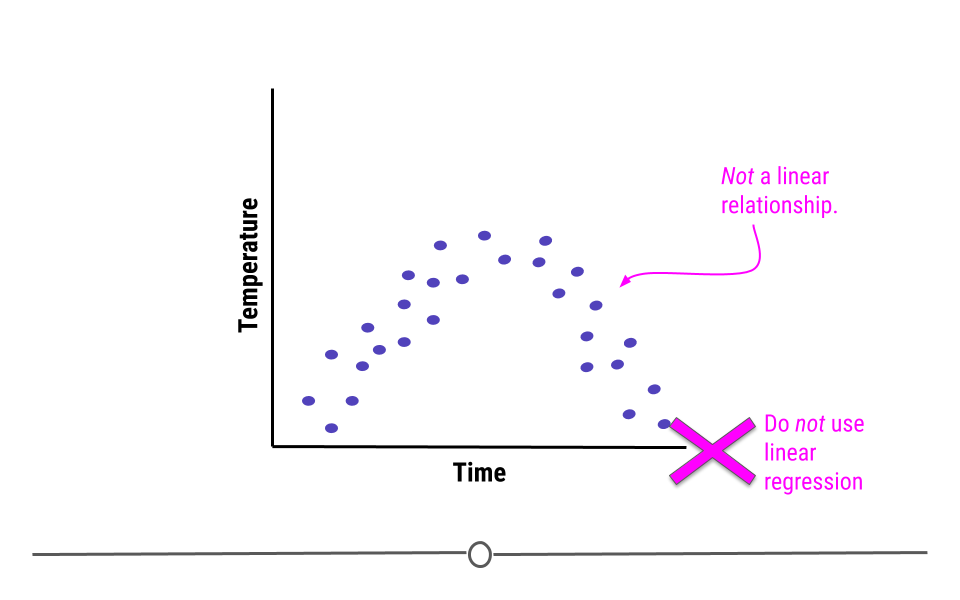

The relationship between the two variables must be linear.

For example, what if we were plotting data from a single day and we were looking at the relationship between temperature and time. Well, we know that generally temperature increases throughout the day and then decreases in the evening. Here, we see some example data reflective of this relationship. The upside-down u-shape of the data suggests that the relationship is not in fact linear. While we could draw a straight line through these data, it would be inappropriate. In cases where the relationship between the variables cannot be well-modeled with a straight line, linear regression should not be used.

5.7.2.2 Homoscedasticity

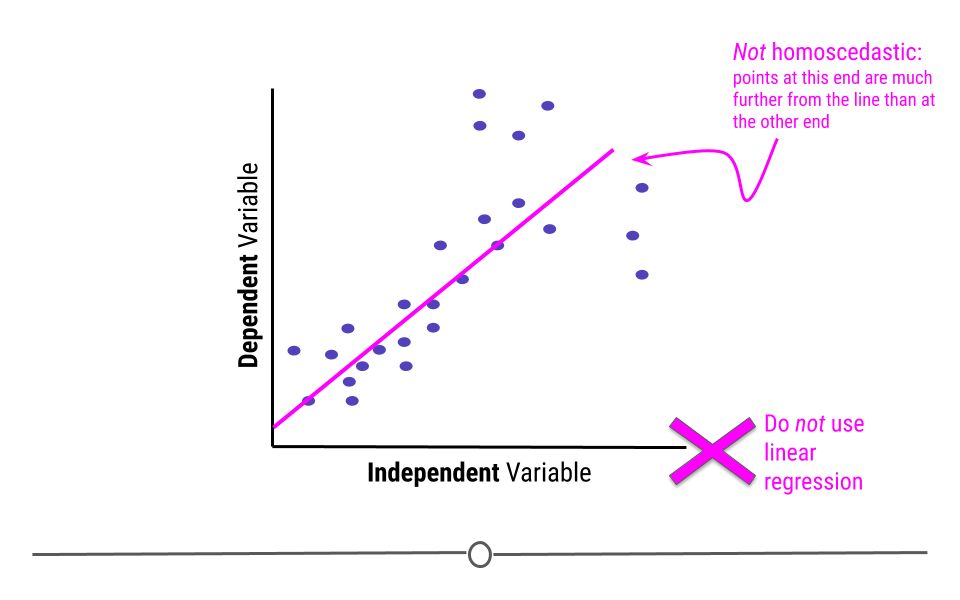

In addition to displaying a linear relationship, the random variables must demonstrate homoscedasticity. In other words, the variance (distance from the line) must be constant throughout the variable.

If points at one end are much closer to the best-fitting line than points are at the other end, homoscedasticity has been violated and linear regression is not appropriate for the data.

5.7.2.3 Normality of residuals

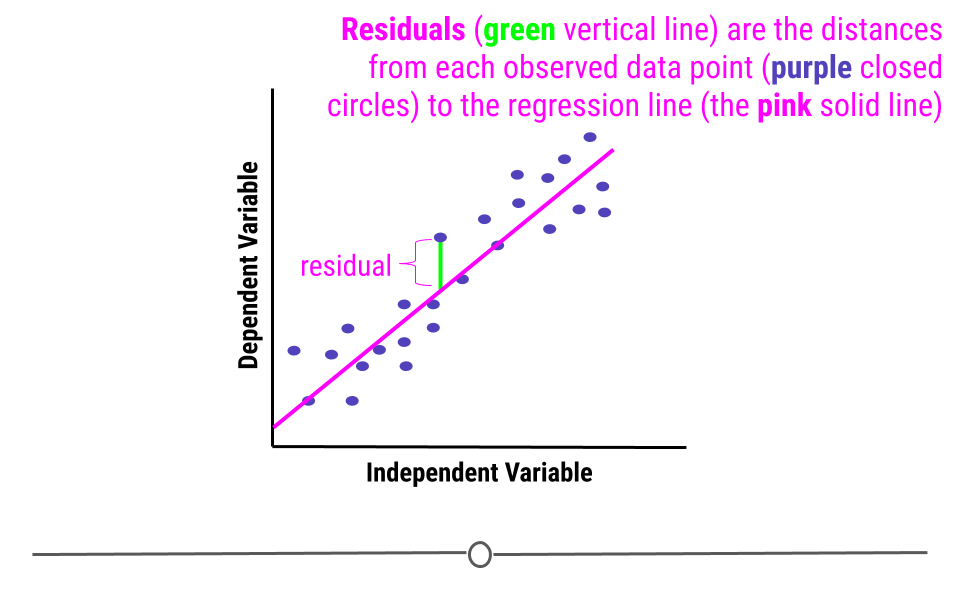

When we fit a linear regression, typically the data do not fall perfectly along the regression line. Rather, there is some distance from each point to the line. Some points are quite close to the line, while others are further away. Each point’s distance to the regression line can be calculated. This distance is the residual measurement.

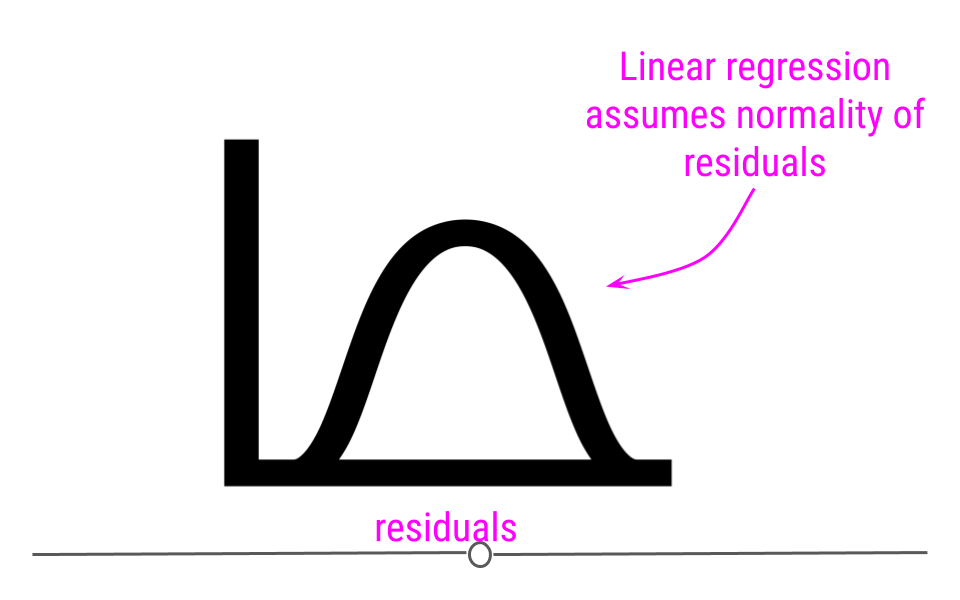

In linear regression, one assumption is that these residuals follow a Normal distribution. This means that if you were to calculate each residual (each point’s distance to the regression line) and then plot a histogram of all of those values - that plot should look like a Normal Distribution.

If you do not see normality of residuals, this can suggest that outlier values - observations more extreme than the rest of the data - may exist in your data. This can severely affect you regression results and lead you to conclude something that is untrue about your data.

Thus, it is your job, when running linear regression to check for:

- Non-linearity

- Heteroscedasticity

- Outlier values

- Normality of residuals

We’ll discuss how to use diagnostic plots below to check that these assumptions have been met and that outlier values are not severely affecting your results.

What can linear regression infer?

Now that we understand what linear regression is and what assumptions must hold for its use, when would you actually use it? Linear regression can be used to answer many different questions about your data. Here we’ll discuss specifically how to make inferences about the relationship between two numeric variables.

5.7.3 Association

Often when people are carrying out linear regression, they are looking to better understand the relationship between two variables. When looking at this relationship, analysts are specifically asking “What is the association between these two variables?” Association between variables describes the trend in the relationship (positive, neutral, or negative) and the strength of that relationship (how correlated the two variables are).

After determining that the assumptions of linear regression are met, in order to determine the association between two variables, one would carry out a linear regression. From the linear regression, one would then interpret the Beta estimate and the standard error from the model.

5.7.3.1 Beta estimate

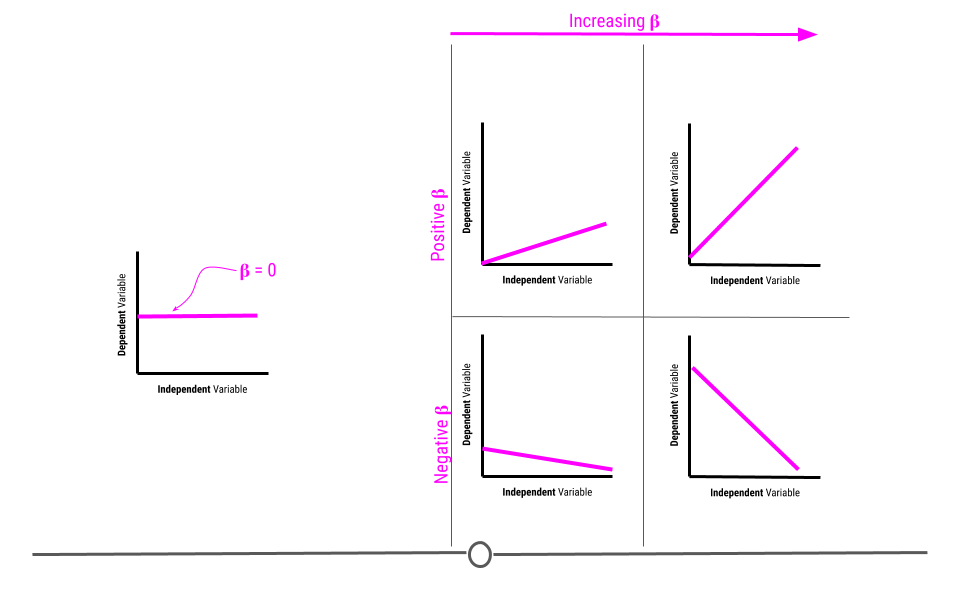

The beta estimate determines the direction and strength of the relationship between the two variables.

A beta of zero suggests there is no association between the two variables. However, if the beta value is positive, the relationship is positive. If the value is negative, the relationship is negative. Further, the larger the number, the bigger the effect is. We’ll discuss effect size and how to interpret the value in more detail later in this lesson.

5.7.3.2 Standard error

The standard error determines how uncertain the beta estimate is. The larger the standard error, the more uncertain we are in the estimate. The smaller the standard error, the less uncertain we are in the estimate.

Standard errors are calculated based on how well the best-fitting line models the data. The closer the points are to the line, the lower the standard error will be, reflecting our decreased uncertainty. However, as the points are further from the regression line, our uncertainty in the estimate will increase, and the standard error will be larger.

Remember when carrying out inferential data analysis, you will always want to report an estimate and a measure of uncertainty. For linear regression, this will be the beta estimate and the standard error.

You may have heard talk of p-values at some point. People tend to use p-values to describe the strength of their association due to its simplicity. The p-value is a single number that takes into account both the estimate (beta estimate) and the uncertainty in that estimate (SE). The lower a p-value the more significant the association is between two variables. However, while it is a simple value, it doesn’t tell you nearly as much information as reporting the estimates and standard errors directly. Thus, if you’re reporting p-values, it’s best to also include the estimate and standard errors as well.

That said, the general interpretation of a p-value is “the probability of getting the observed results (or results more extreme) by chance alone.” Since it’s a probability, the value will always be between 0 and 1. Then, for example, a p-value of 0.05, means that 5 percent of the time (or 1 in 20), you’d observe results this extreme simply by chance.

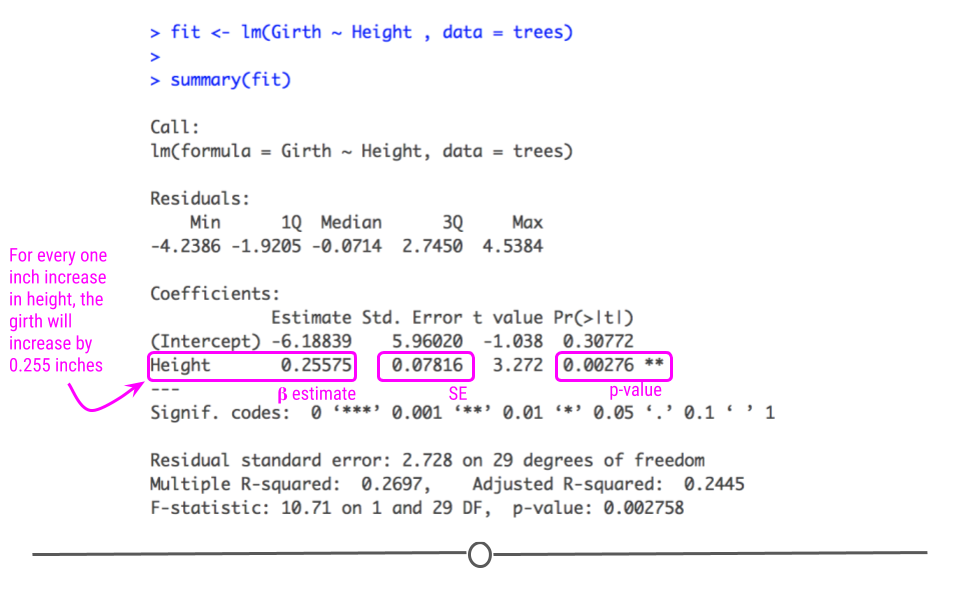

5.7.4 Association Testing in R

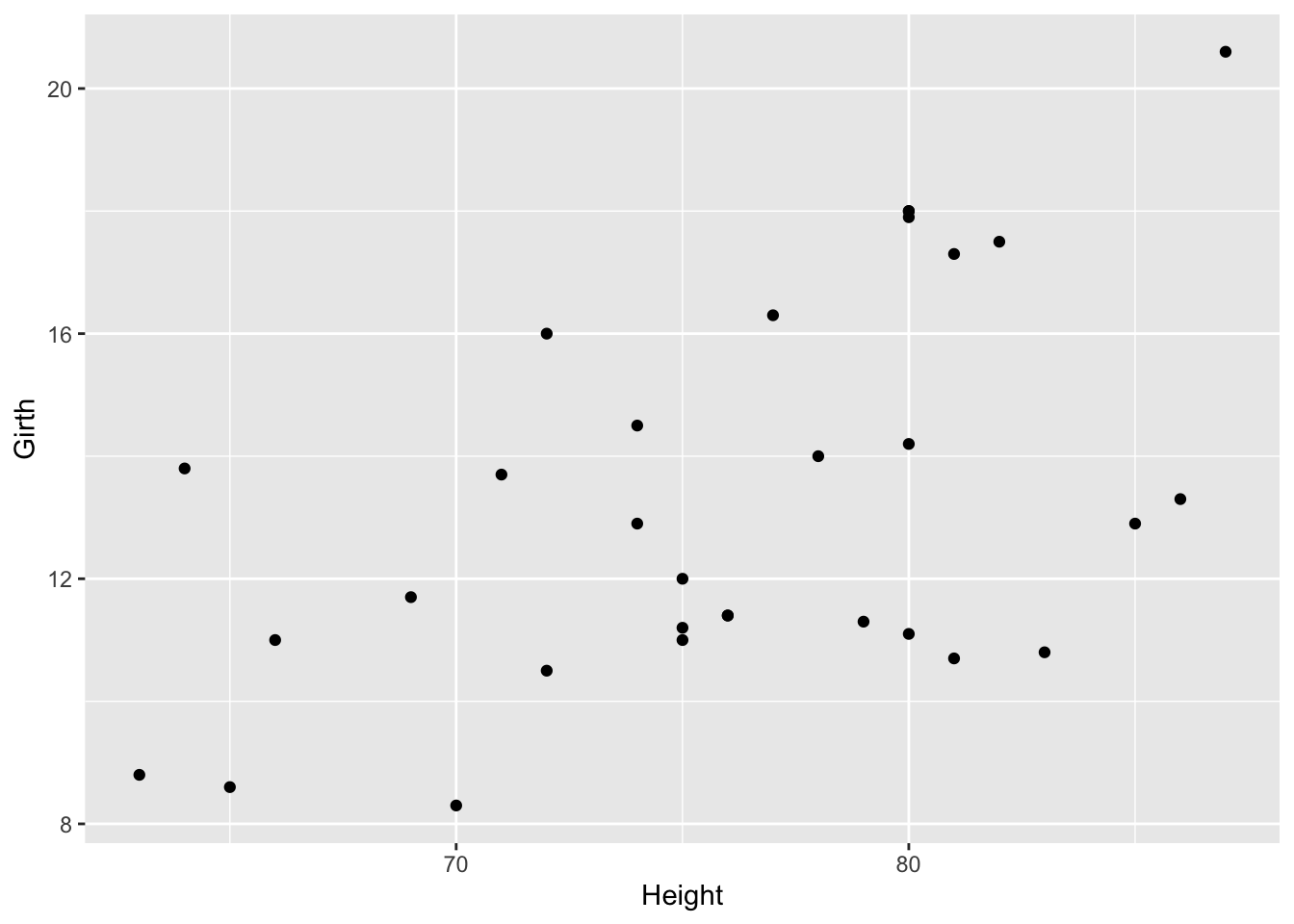

Now that we’ve discussed what you can learn from an association test, let’s look at an example in R. For this example we’ll use the trees dataset available in R, which includes girth, height, and volume measurements for 31 black cherry trees.

With this dataset, we’ll answer the question:

Can we infer the height of a tree given its girth?Presumably, it’s easier to measure a trees girth (width around) than it is to measure its height. Thus, here we want to know whether or not height and girth are associated.

In this case, since we’re asking if we can infer height from girth, girth is the independent variable and height is the dependent variable. In other words, we’re asking does height depend on girth?

First, before carrying out the linear regression to test for association and answer this question, we have to be sure linear regression is appropriate. We’ll test for linearity and homoscedasticity.

To do so, we’ll first use ggplot2 to generate a scatterplot of the variables of interest.

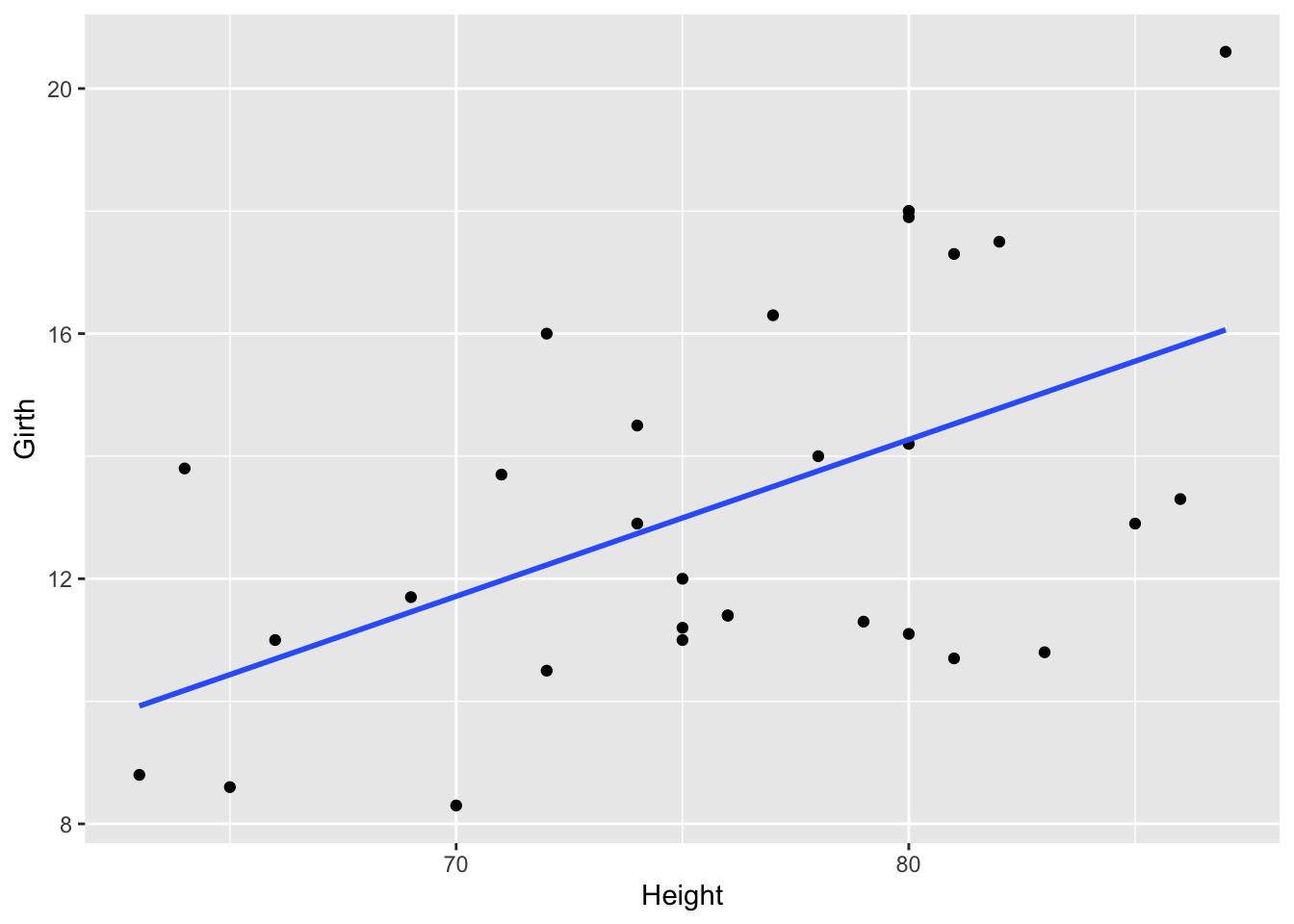

From the looks of this plot, the relationship looks approximately linear, but to visually make this a little easier, we’ll add a line of best first to the plot.

## `geom_smooth()` using formula = 'y ~ x'

On this graph, the relationship looks approximately linear and the variance (distance from points to the line) is constant across the data. Given this, it’s appropriate to use linear regression for these data.

5.7.5 Fitting the Model

Now that that’s established, we can run the linear regression. To do so, we’ll use the lm() function to fit the model. The syntax for this function is lm(dependent_variable ~ independent_variable, data = dataset).

5.7.6 Model Diagnostics

Above, we discussed a number of assumptions of linear regression. After fitting a model, it’s necessary to check the model to see if the model satisfies the assumptions of linear regression. If the model does not fit the data well (for example, the relationship is nonlinear), then you cannot use and interpret the model.

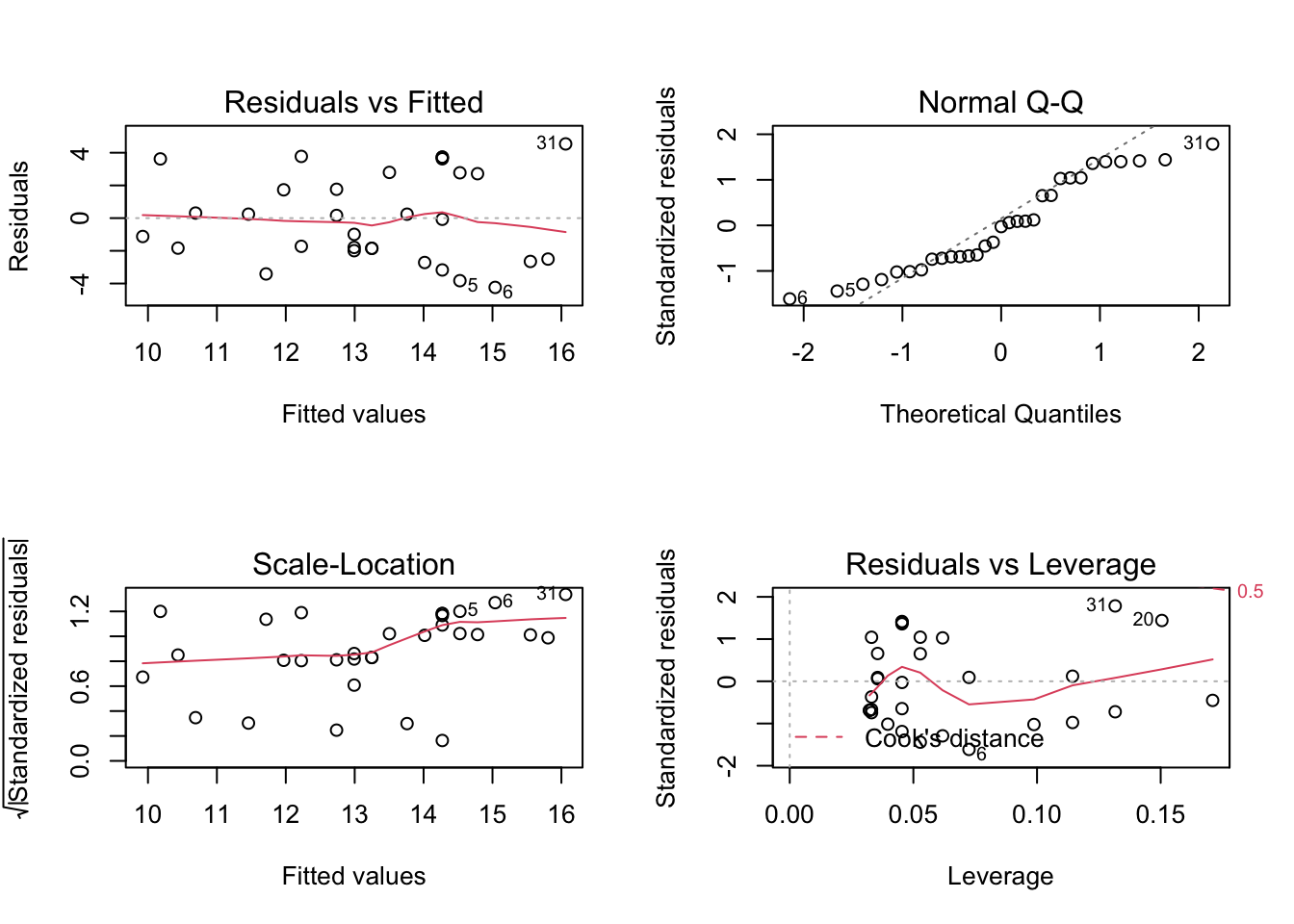

In order to assess your model, a number of diagnostic plots can be very helpful. Diagnostic plots can be generated using the plot() function with the fitted model as an argument.

This generates four plots: 1) Residuals vs Fitted - checks linear relationship assumption of linear regression. A linear relationship will demonstrate a horizontal red line here. Deviations from a horizontal line suggest nonlinearity and that a different approach may be necessary.

Normal Q-Q - checks whether or not the residuals (the difference between the observed and predicted values) from the model are normally distributed. The best fit models points fall along the dashed line on the plot. Deviation from this line suggests that a different analytical approach may be required.

Scale-Location - checks the homoscedasticity of the model. A horizontal red line with points equally spread out indicates a well-fit model. A non-horizontal line or points that cluster together suggests that your data are not homoscedastic.

Residuals vs Leverage - helps to identify outlier or extreme values that may disproportionately affect the model’s results. Their inclusion or exclusion from the analysis may affect the results of the analysis. Note that the top three most extreme values are identified with numbers next to the points in all four plots.

5.7.7 Tree Girth and Height Example

In our example looking at the relationship between tree girth and height, we can first check linearity of the data by looking at the Residuals vs Fitted plot. Here, we do see a red line that is approximately horizontal, which is what we’re looking for. Additionally, we’re looking to be sure there is no clear pattern in the points on the plot - we want them to be random on this plot. Clustering of a bunch of points together or trends in this plot would indicate that the data do not have a linear relationship.

To check for homogeneity of the variance, we can turn to the Scale-Location plot. Here, we’re again looking for a horizontal red line. In this dataset, there’s a suggestion that there is some heteroscedasticity, with points not being equally far from the regression line across the observations.

While not discussed explicitly here in this lesson, we will note that when the data are nonlinear or the variances are not homogeneous (are not homoscedastic), transformations of the data can often be applied and then linear regression can be used.

QQ Plots are very helpful in assessing the normality of residuals. Normally distributed residuals will fall along the grey dotted line. Deviation from the line suggests the residuals are not normally distributed. Here, in this example, we do not see the points fall perfectly along the dotted line, suggesting that our residuals are not normally distributed.

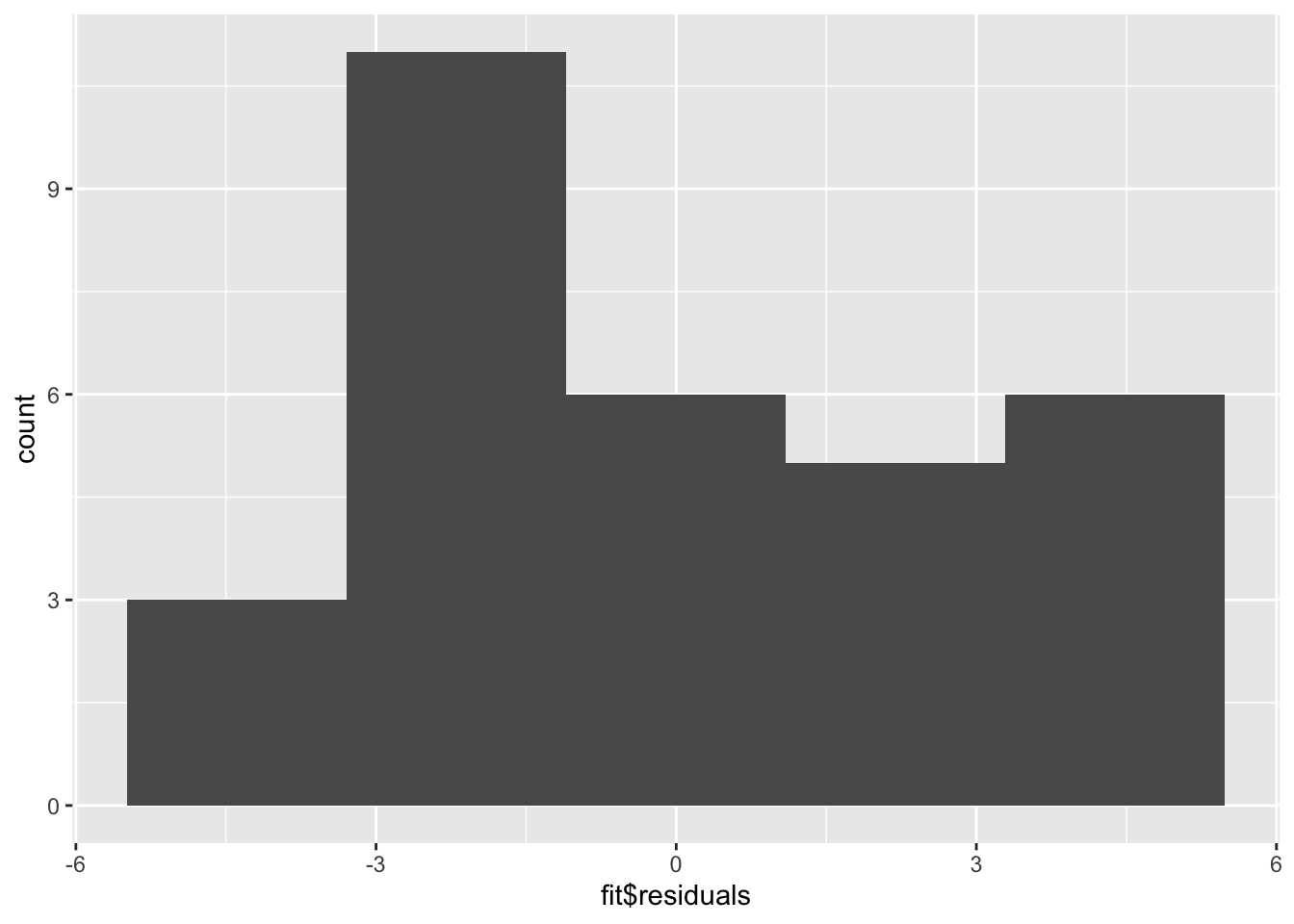

A histogram (or densityplot) of the residuals can also be used for this portion of regression diagnostics. Here, we’re looking for a Normal distribution of the residuals.

The QQ Plot and the histogram of the residuals will always give the same answer. Here, we see that with our limited sample size, we have fairly good Normally distributed residuals; and, the points do not fall wildly far from the dotted line.

Finally, whether or not outliers (extreme observations) are driving our results can be assessed by looking at the Residuals vs Leverage plot.

Generally speaking, standardized residuals greater than 3 or less than -3 are to be considered as outliers. Here, we do not see any values in that range (by looking at the y-axis), suggesting that there are no extreme outliers driving the results of our analysis.

5.7.8 Interpreting the Model

While the relationship in our example appears to be linear, does not indicate being driven by outliers, is approximately homoscedastic and has residuals that are not perfectly Normally distributed, but fall close to the line in the QQ plot, we can discuss how to interpret the results of the model.

The summary() function summarizes the model as well as the output of the model. We can see the values we’re interested in in this summary, including the beta estimate, the standard error (SE), and the p-value.

Specifically, from the beta estimate, which is positive, we confirm that the relationship is positive (which we could also tell from the scatterplot). We can also interpret this beta estimate explicitly.

The beta estimate (also known as the beta coefficient or coefficient in the Estimate column) is the amount the dependent variable will change given a one unit increase in the independent variable. In the case of the trees, a beta estimate of 1.054, says that for every inch a tree’s girth increases, its height will increase by 1.054 inches. Thus, we not only know that there’s a positive relationship between the two variables, but we know by precisely how much one variable will change given a single unit increase in the other variable. Note that we’re looking at the second row in the output here, where the row label is “Girth”. This row quantifies the relationship between our two variables. The first row quantifies the intercept, or where the line crosses the y-axis.

The standard error and p-value are also included in this output. Error is typically something we want to minimize (in life and statistical analyses), so the smaller the error, the more confident we are in the association between these two variables.

The beta estimate and the standard error are then both considered in the calculation of the p-value (found in the column Pr[>|t|]). The smaller this value is, the more confident we are that this relationship is not due to random chance alone.

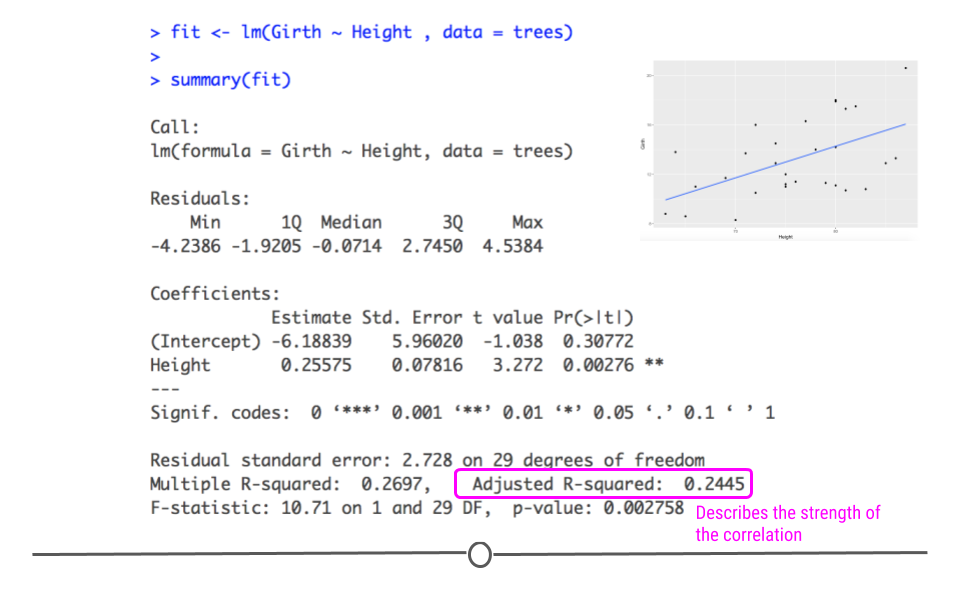

5.7.9 Variance Explained

Additionally, the strength of this relationship is summarized using the adjusted R-squared metric. This metric explains how much of the variance this regression line explains. The more variance explained, the closer this value is to 1. And, the closer this value is to 1, the closer the points in your dataset fall to the line of best fit. The further they are from the line, the closer this value will be to zero.

As we saw in the scatterplot, the data are not right up against the regression line, so a value of 0.2445 seems reasonable, suggesting that this model (this regression line) explains 24.45% of the variance in the data.

5.7.10 Using broom

Finally, while the summary() output are visually helpful, if you want to get any of the numbers out from that model, it’s not always straightforward. Thankfully, there is a package to help you with that! The tidy() function from the broom package helps take the summary output from a statistical model and organize it into a tabular output.

## # A tibble: 2 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 62.0 4.38 14.2 1.49e-14

## 2 Girth 1.05 0.322 3.27 2.76e- 3Note that the values haven’t changed. They’re just organized into an easy-to-use table. It’s helpful to keep in mind that this function and package exist as you work with statistical models.

Finally, it’s important to always keep in mind that the interpretation of your inferential data analysis is incredibly important. When you use linear regression to test for association, you’re looking at the relationship between the two variables. While girth can be used to infer a tree’s height, this is just a correlation. It does not mean that an increase in girth causes the tree to grow more. Associations are correlations. They are not causal.

For now, however, in response to our question, can we infer a black cherry tree’s height from its girth, the answer is yes. We would expect, on average, a tree’s height to increase 1.054 inches for every one inch increase in girth.

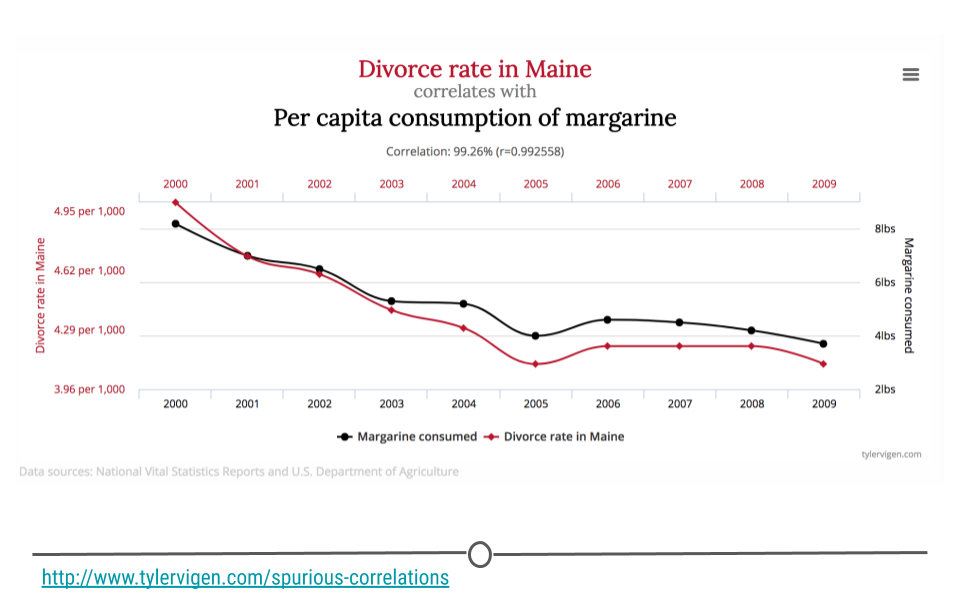

5.7.11 Correlation Is Not Causation

You’ve likely heard someone say before that “correlation is not causation,” and it’s true! In fact, there are entire websites dedicated to this concept. Let’s make sure we know exactly what that means before moving on. In the plot you see here, as the divorce rate in Maine decreases, so does per capita consumption of margarine. These two lines are clearly correlated; however, there isn’t really a strong (or any) argument to say that one caused the other. Thus, just because you see two things with the same trend does not mean that one caused the other. These are simply spurious correlations – things that trend together by chance. Always keep this in mind when you’re doing inferential analysis, and be sure that you never draw causal claims when all you have are associations.

In fact, one could argue that the only time you can make causal claims are when you have carried out a randomized experiment. Randomized experiments are studies that are designed and carried out by randomly assigning certain subjects to one treatment and the rest of the individuals to another treatment. The treatment is then applied and the results are then analyzed. In the case of a randomized experiment, causal claims can start to be made. Short of this, however, be careful with the language you choose and do not overstate your findings.

5.7.12 Confounding

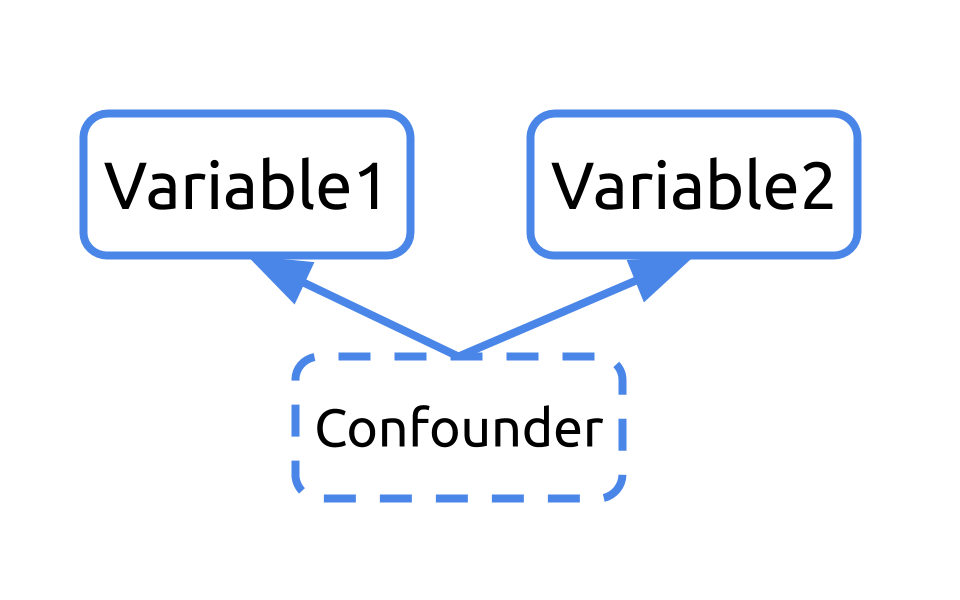

Confounding in something to watch out for in any analysis you’re doing that looks at the relationship between two more more variables. So…what is confounding?

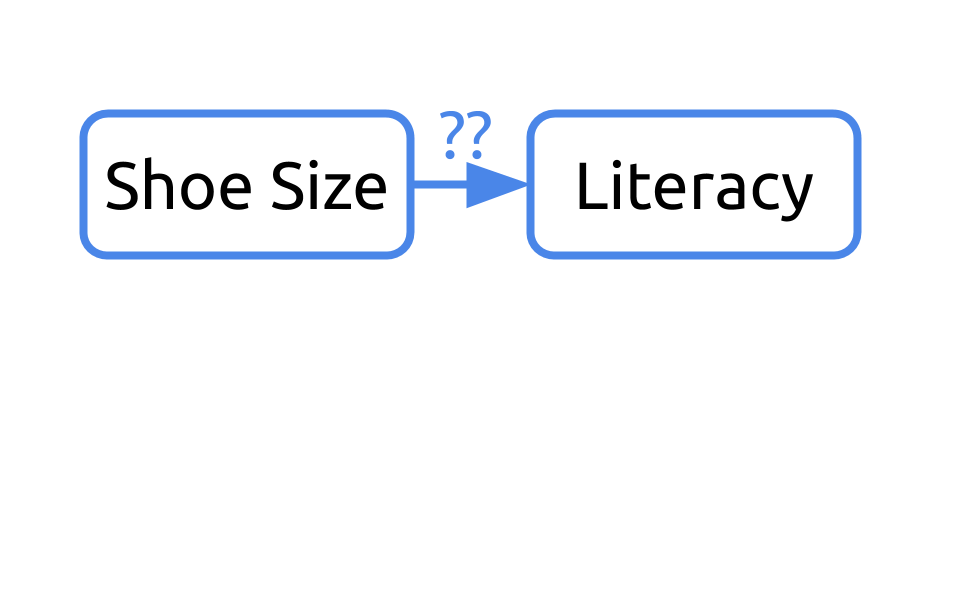

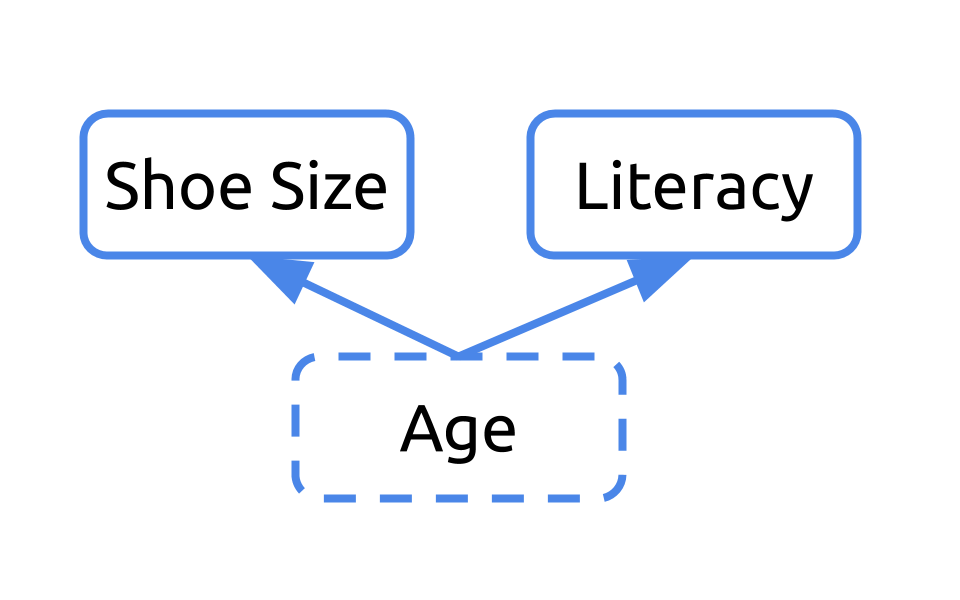

Let’s consider an example. What if we were interested in understanding the relationship between shoe size and literacy. To do so, we took a look at this small sample of two humans, one who wears small shoes and is not literate and one adult who wears big shoes and is literate.

If we were to diagram this question, we may ask “Can we infer literacy rates from shoe size?”

If we return to our sample, it’d be important to note that one of the humans is a young child and the other is an adult.

Our initial diagram failed to take into consideration the fact that these humans differed in their age. Age affects their shoe size and their literacy rates. In this example, age is a confounder.

Any time you have a variable that affects both your dependent and independent variables, it’s a confounder. Ignoring confounders is not appropriate when analyzing data. In fact, in this example, you would have concluded that people who wear small shoes have lower literacy rates than those who wear large shoes. That would have been incorrect. In fact, that analysis was confounded by age. Failing to correct for confounding has led to misreporting in the media and retraction of scientific studies. You don’t want to be in that situation. So, always consider and check for confounding among the variables in your dataset.

5.8 Multiple Linear Regression

There are ways to effectively handle confounders within an analysis. Confounders can be included in your linear regression model. When included, the analysis takes into account the fact that these variables are confounders and carries out the regression, removing the effect of the confounding variable from the estimates calculated for the variable of interest.

This type of analysis is known as multiple linear regression, and the general format is: lm(dependent_variable ~ independent_variable + confounder , data = dataset).

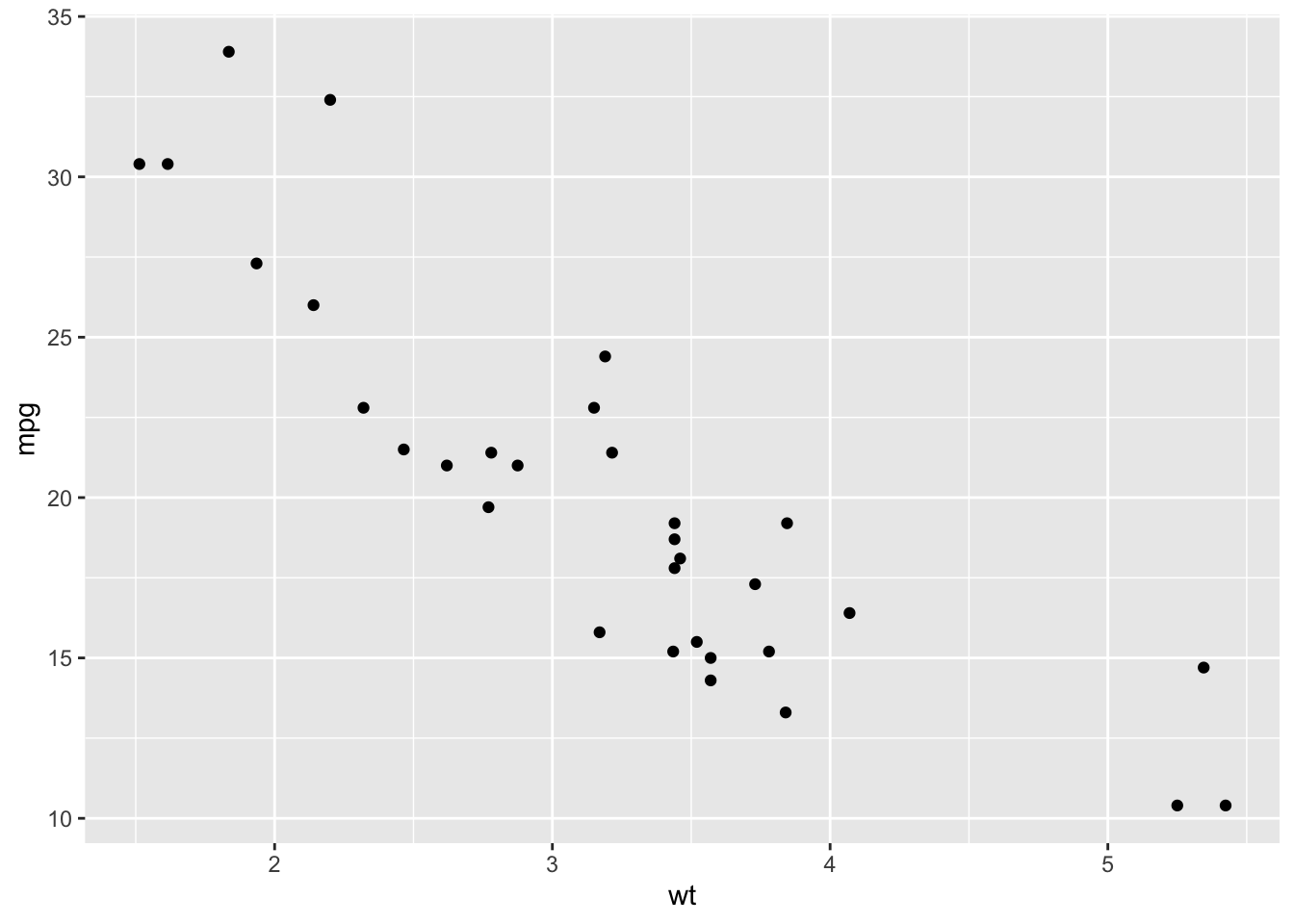

As a simple example, let’s return to the mtcars dataset, which we’ve worked with before. In this dataset, we have data from 32 automobiles, including their weight (wt), miles per gallon (mpg), and Engine (vs, where 0 is “V-shaped” and 1 is “straight”).

Suppose we were interested in inferring the mpg a car would get based on its weight. We’d first look at the relationship graphically:

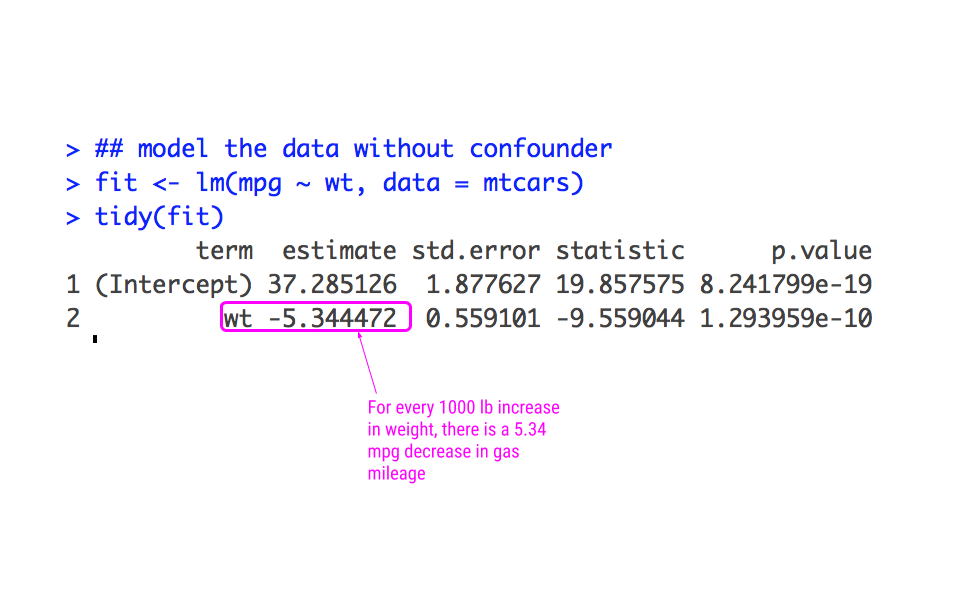

From the scatterplot, the relationship looks approximately linear and the variance looks constant. Thus, we could model this using linear regression:

From this analysis, we would infer that for every increase 1000 lbs more a car weighs, it gets 5.34 miles less per gallon.

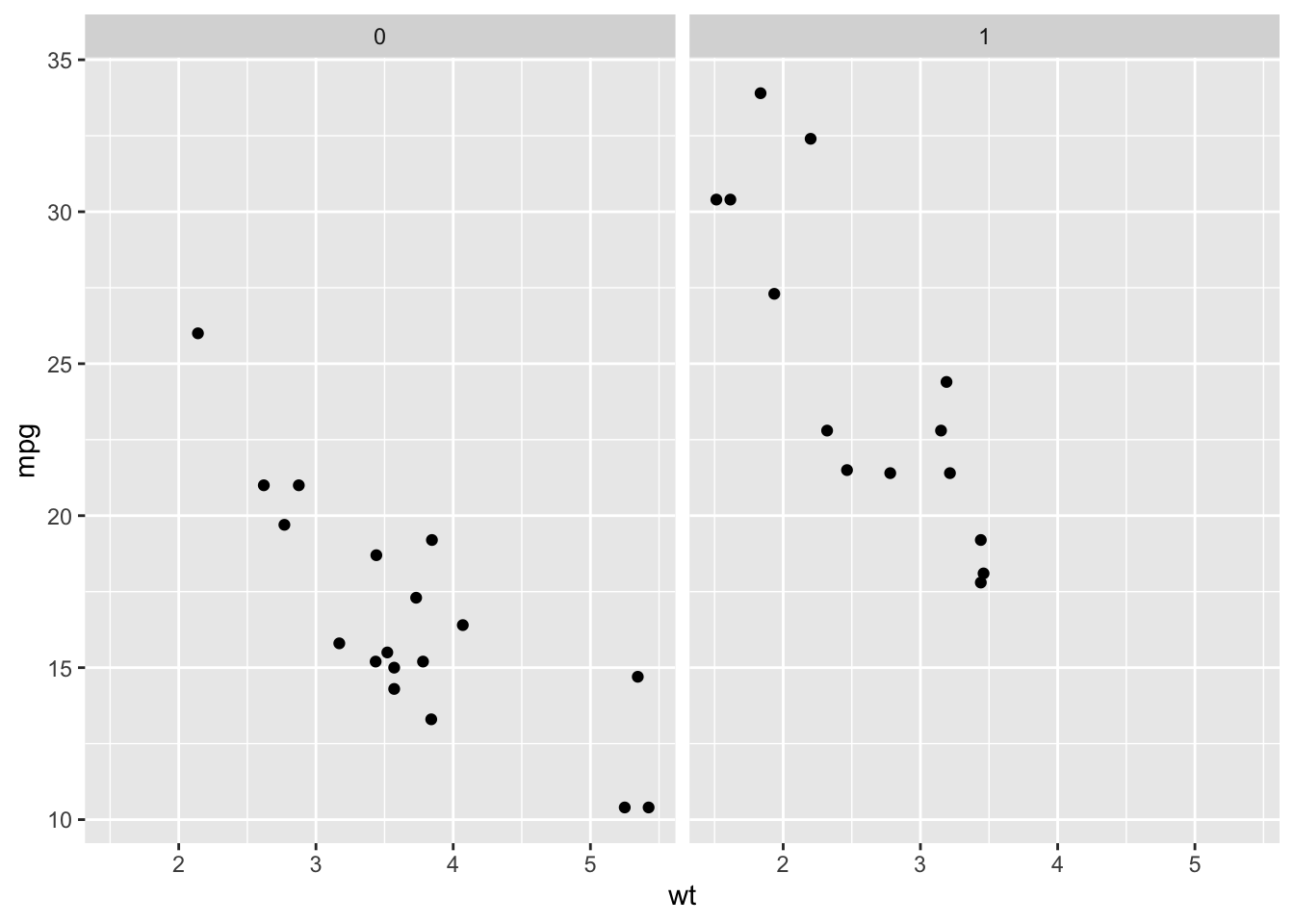

However, we know that the weight of a car doesn’t necessarily tell the whole story. The type of engine in the car likely affects both the weight of the car and the miles per gallon the car gets. Graphically, we could see if this were the case by looking at these scatterplots:

## look at the difference in relationship

## between Engine types

ggplot(mtcars, aes(wt, mpg)) +

geom_point() +

facet_wrap(~vs)

From this plot, we can see that V-shaped engines (vs= 0), tend to be heavier and get fewer miles per gallon while straight engines (vs = 1) tend to weigh less and get more miles per gallon. Importantly, however, we see that a car that weighs 3000 points (wt = 3) and has a V-Shaped engine (vs = 0) gets fewer miles per gallon than a car of the same weight with a straight engine (vs = 1), suggesting that simply modeling a linear relationship between weight and mpg is not appropriate.

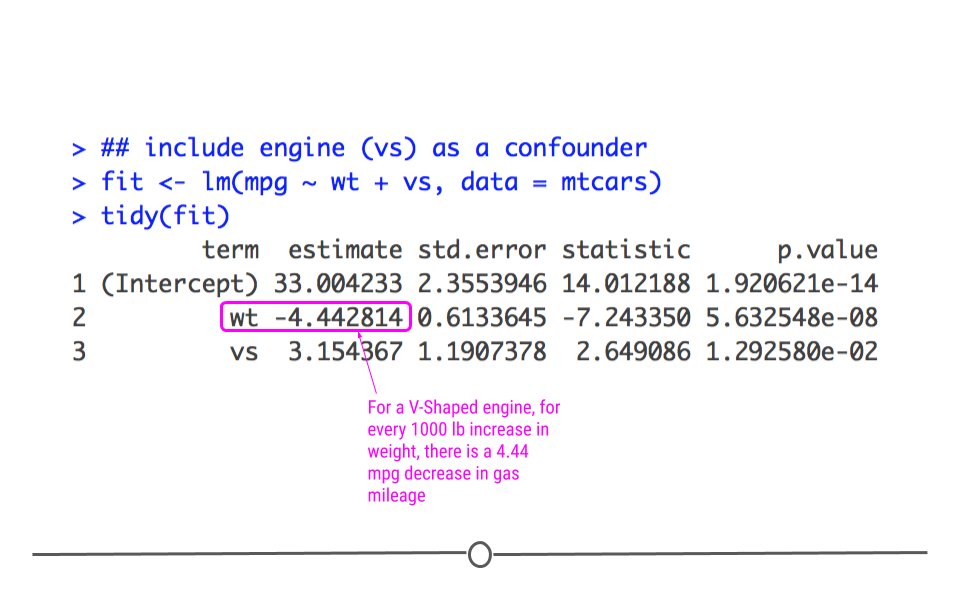

Let’s then model the data, taking this confounding into account:

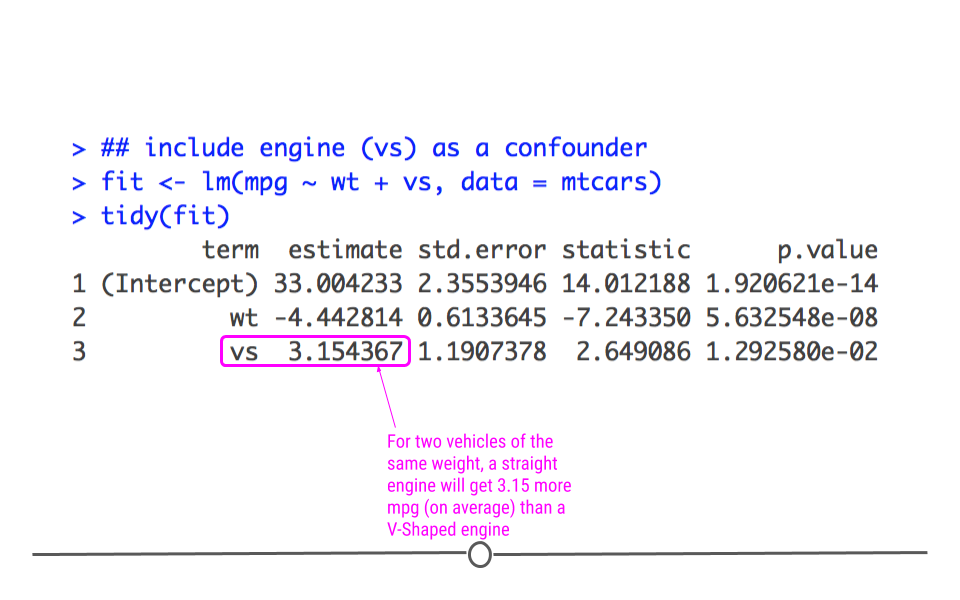

Here, we get a more accurate picture of what’s going on. Interpreting multiple regression models is slightly more complicated since there are more variables; however, we’ll practice how to do so now.

The best way to interpret the coefficients in a multiple linear regression model is to focus on a single variable of interest and hold all other variables constant. For instance, we’ll focus on weight (wt) while holding (vs) constant to interpret. This means that for a V-shaped engine, we expect to see a 4.44 miles per gallon decrease for every 1000 lb increase in weight.

We can similarly interpret the coefficients by focusing on the engines (vs). For example, for two cars that weigh the same, we’d expect a straight engine (vs = 1) to get 3.15 more miles per gallon than a V-Shaped engine (vs= 0).

Finally, we’ll point out that the p-value for wt decreased in this model relative to the model where we didn’t account for confounding. This is because the model was not initially taking into account the engine difference. Frequently when confounders are accounted for, the p-value will increase, and that’s OK. What’s important is that the data are most appropriately modeled.

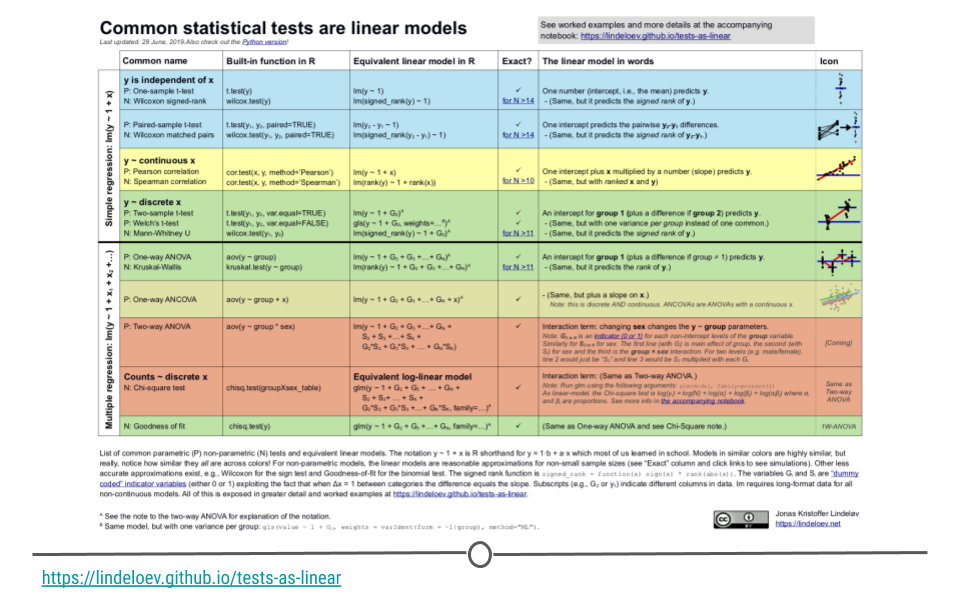

5.9 Beyond Linear Regression

While we’ve focused on linear regression in this lesson on inference, linear regression isn’t the only analytical approach out there. However, it is arguably the most commonly used. And, beyond that, there are many statistical tests and approaches that are slight variations on linear regression, so having a solid foundation and understanding of linear regression makes understanding these other tests and approaches much simpler.

For example, what if you didn’t want to measure the linear relationship between two variables, but instead wanted to know whether or not the average observed is different from expectation?

5.9.1 Mean Different From Expectation?

To answer a question like this, let’s consider the case where you’re interested in analyzing data about a single numeric variable. If you were doing descriptive statistics on this dataset, you’d likely calculate the mean for that variable. But, what if, in addition to knowing the mean, you wanted to know if the values in that variable were all within the bounds of normal variation. You could calculate that using inferential data analysis. You could use the data you have to infer whether or not the data are within the expected bounds.

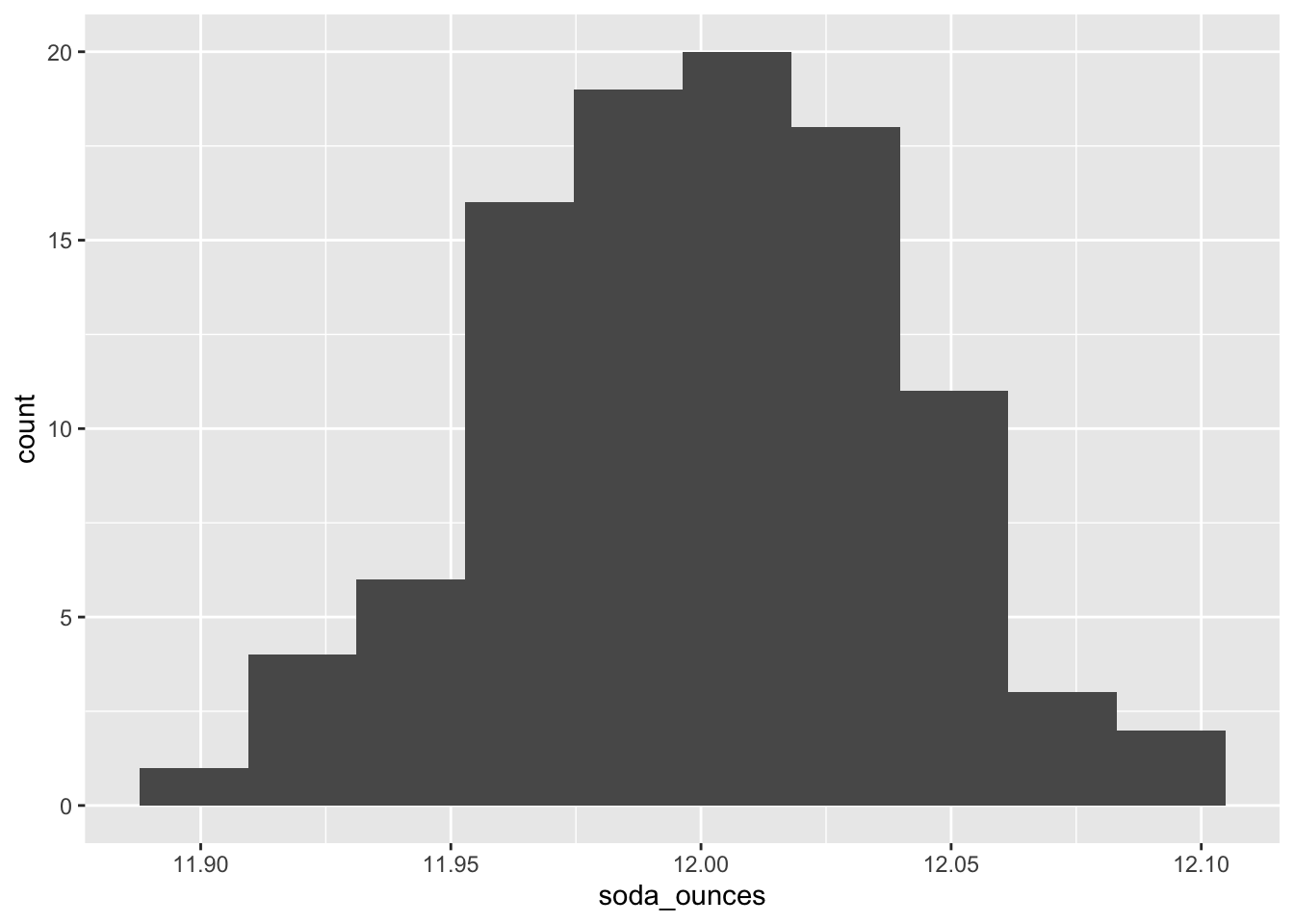

For example, let’s say you had a dataset that included the number of ounces actually included in 100 cans of a soft drink. You’d expect that each can have exactly 12 oz of liquid; however, there is some variation in the process. So, let’s test whether or not you’re consistently getting shorted on the amount of liquid in your can.

In fact, let’s go ahead and generate the dataset ourselves!

## generate the dataset

set.seed(34)

soda_ounces <- rnorm(100, mean = 12, sd = 0.04)

head(soda_ounces)## [1] 11.99444 12.04799 11.97009 11.97699 11.98946 11.98178In this code, we’re specifying that we want to take a random draw of 100 different values (representing our 100 cans of soft drink), where the mean is 12 (representing the 12 ounces of soda expected to be within each can), and allowing for some variation (we’ve set the standard deviation to be 0.04).

We can see that the values are approximately, but not always exactly equal to the expected 12 ounces.

5.9.2 Testing Mean Difference From Expectation in R

To make an inference as to whether or not we’re consistently getting shorted, we’re going to use this sample of 100 cans. Note that we’re using this sample of cans to infer something about all cans of this soft drink, since we aren’t able to measure the number of ounces in all cans of the soft drink generated.

To carry out this statistical test, we’ll use a t-test.

Wait, we haven’t talked about that statistical test yet. So, let’s take a quick detour to discuss t-tests and how they relate to linear regression.

R has a built in t-test function: t.test().

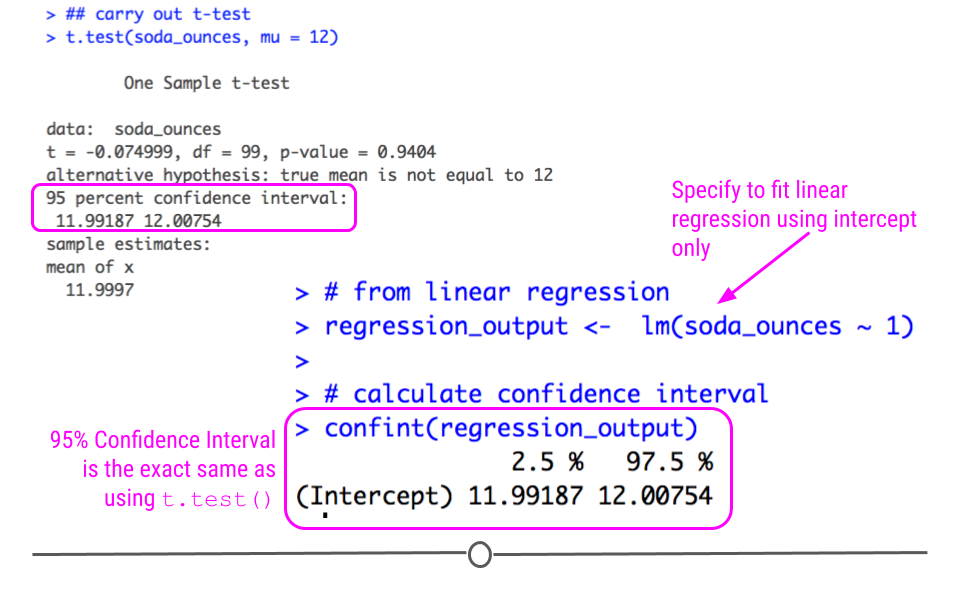

However, I mentioned earlier that many statistical tests are simply extension of linear regression. In fact, a t-test is simply a linear model where we specify to only fit an intercept (where the data crosses the y-axis). In other words, this specifies to calculate the mean…which is exactly what we’re looking to do here with our t-test! We’ll compare these two approaches below.

However, before we can do so, we have to ensure that the data follow a normal distribution, since this is the primary assumption of the t-test.

library(ggplot2)

## check for normality

ggplot(as.data.frame(soda_ounces))+

geom_histogram(aes(soda_ounces), bins = 10)

Here, we see that the data are approximately normally distributed.

A t-test will check whether the observed ounces differs from the expected mean (12 oz). As mentioned above, to run a t-test in R, most people use the built-in function: t.test().

##

## One Sample t-test

##

## data: soda_ounces

## t = -0.074999, df = 99, p-value = 0.9404

## alternative hypothesis: true mean is not equal to 12

## 95 percent confidence interval:

## 11.99187 12.00754

## sample estimates:

## mean of x

## 11.9997In the output from this function, we’ll focus on the 95 percent confidence interval. Confidence Intervals provide the range of values likely to contain the unknown population parameter. Here, the population parameter we’re interested in is the mean. Thus, the 95% Confidence Intervals provides us the range where, upon repeated sampling, the calculated mean would fall 95 percent of the time. More specifically, if the 95 percent confidence interval contains the expected mean (12 oz), then we can be confident that the company is not shorting us on the amount of liquid they’re putting into each can.

Here, since 12 is between 11.99187 and 12.00754, we can see that the amounts in the 100 sampled cans are within the expected variation. We could infer from this sample that the population of all cans of this soft drink are likely to have an appropriate amount of liquid in the cans.

However, as mentioned previously, t-tests are an extension of linear regression. We could also look to see whether or not the cans had the expected average of 12 oz in the data collected using lm().

# from linear regression

regression_output <- lm(soda_ounces ~ 1)

# calculate confidence interval

confint(regression_output)

Note that the confidence interval is the exact same here using lm() as above when we used t.test()! We bring this up not to confuse you, but to guide you away from trying to memorize each individual statistical test and instead understand how they relate to one another.

5.10 More Statistical Tests

Now that you’ve seen how to measure the linear relationship between variables (linear regression) and how to determine if the mean of a dataset differs from expectation (t-test), it’s important to know that you can ask lots of different questions using extensions of linear regression. These have been nicely summarized by Jonas Kristoffer Lindelov in is blog post Common statistical tests are linear models (or: how to teach stats).

5.11 Hypothesis Testing

You may have noticed in the previous sections that we were asking a question about the data. We did so by testing if a particular answer to the question was true.

For example:

In the cherry tree analysis we asked “Can we infer the height of a tree given its girth?”

We expected that we could. Thus we had a statement that “tree height can be inferred by it’s girth or can be predicted by girth”In the car mileage analysis we asked “Can we infer the miles the car can go per gallon of gasoline based on the car weight?”

We expected that we could. Thus we had a statement that “car mileage can be inferred by car weight”

We took this further and asked “Can we infer the miles the car can go per gallon of gasoline based on the car weight and care engine type?”

We again expected that it did. Thus we had a statement that ” car mileage can be inferred by weight and engine type”

- In the soda can analysis we asked “Do soda cans really have 12 ounces of fluid”. We expected that often do. Thus we had a statement that “soda cans typically have 12 ounces, the mean amount is 12”.

A common problem in many data science problem involves developing evidence for or against certain testable statements like these statements above. These testable statements are called hypotheses. Typically, the way these problems are structured is that a statement is made about the world (the hypothesis) and then the data are used (usually in the form of a summary statistic) to support or reject that statement.

Recall that we defined a p-value as “the probability of getting the observed results (or results more extreme) by chance alone.” Often p-values are used to determine if one should accept or reject that statement.

Typically a p-value of 0.05 is used as the threshold, however remember that it is best to report more than just the p-value, but also estimates and standard errors among other statistics. Different statistical tests allow for testing different hypotheses.

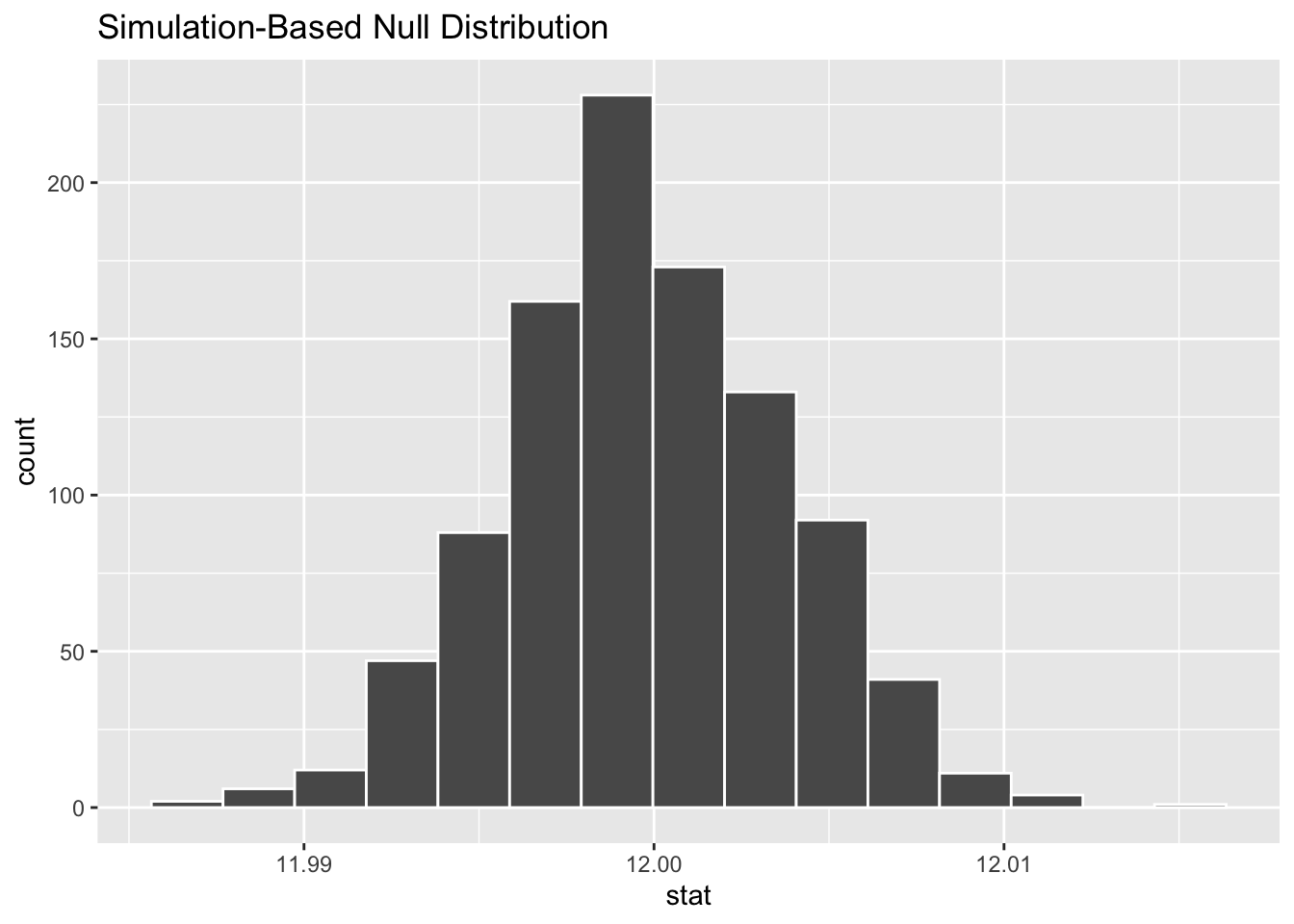

5.11.1 TheInfer Package

The infer package simplifies inference analyses. Users can quickly calculate a variety of statistics and perform statistical tests including those that require resampling, permutation, or simulations using data that is in tidy format.

In fact users can even perform analyses based on specified hypotheses with the hypothesize() function.

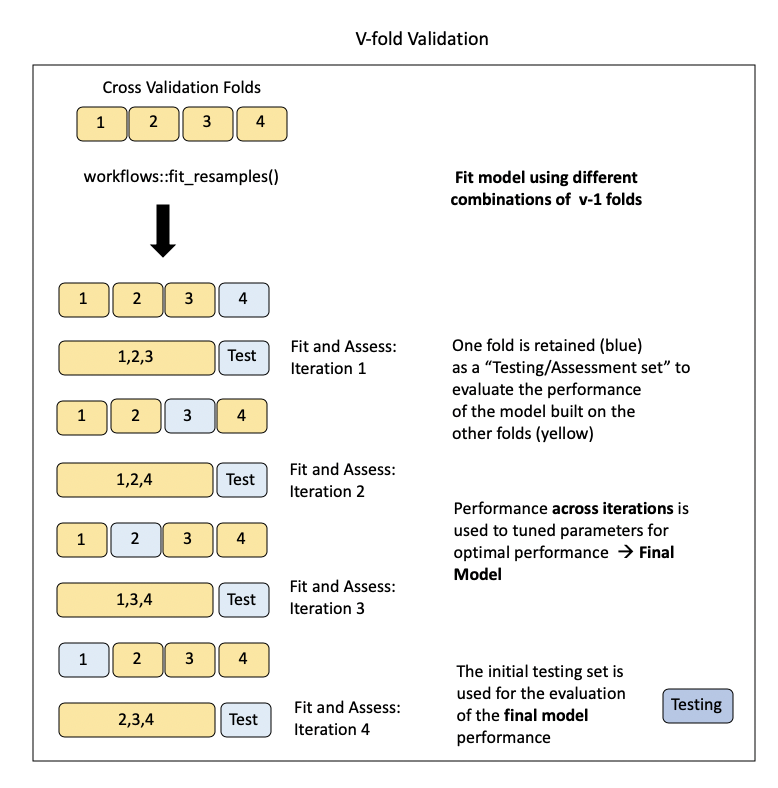

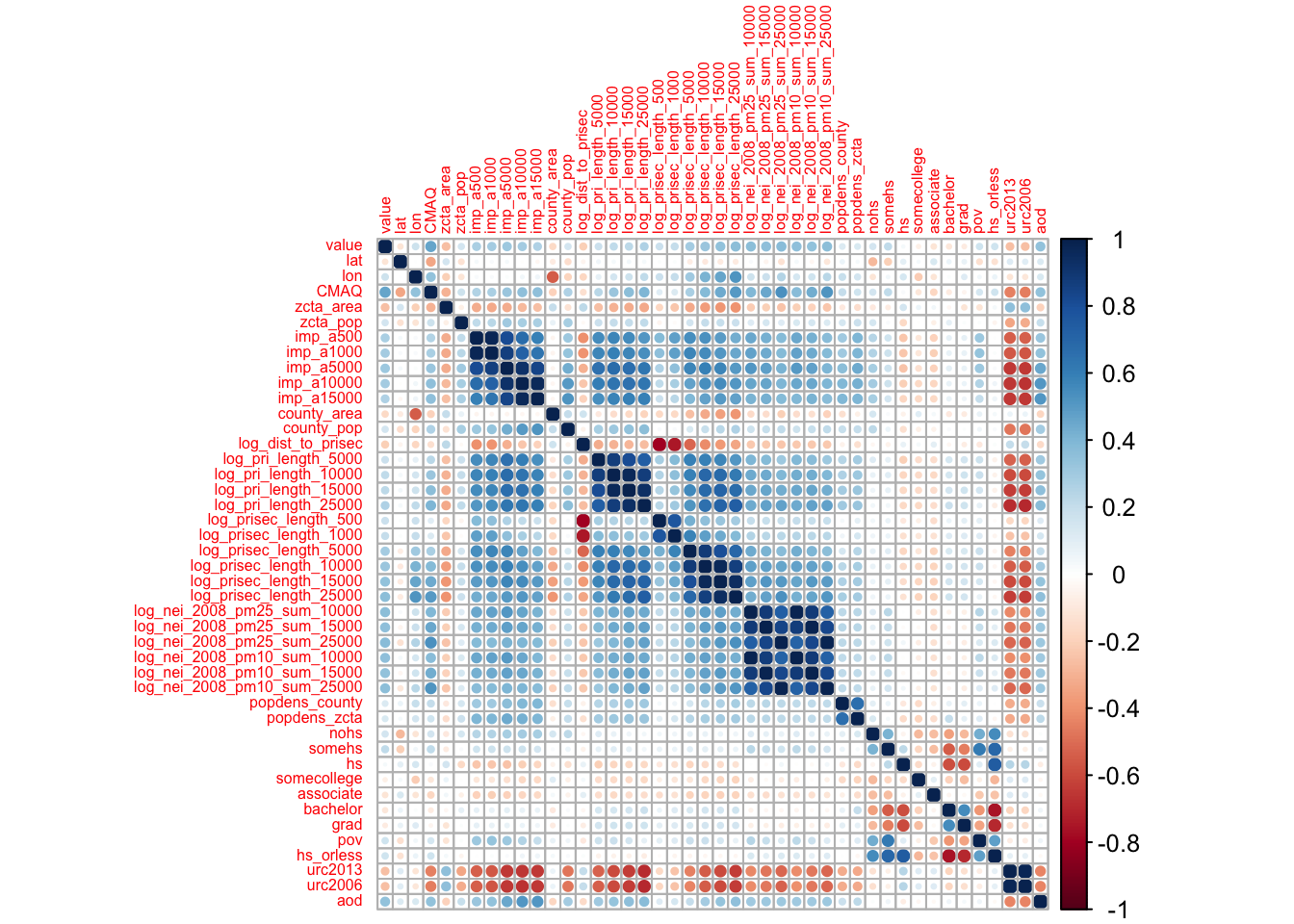

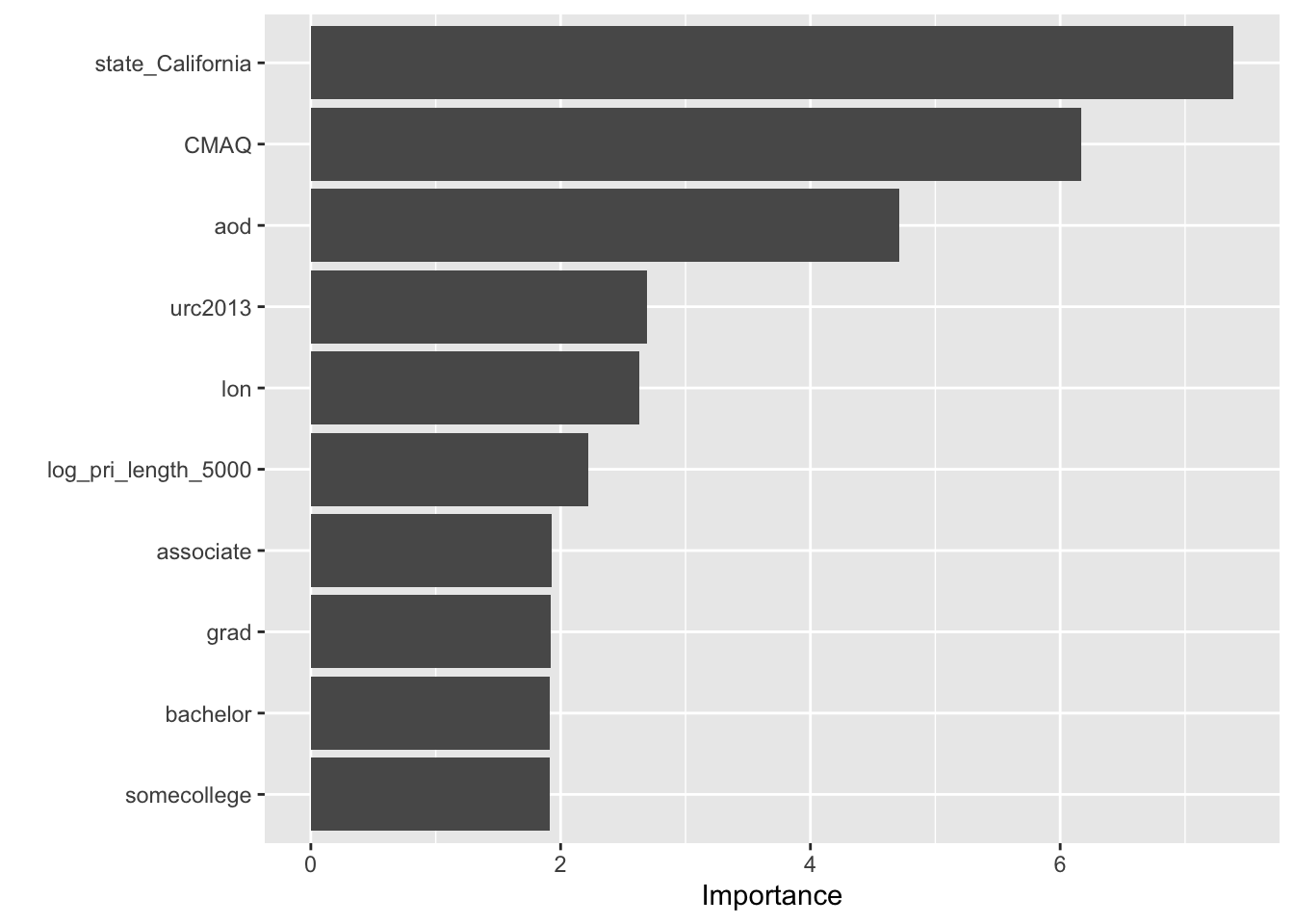

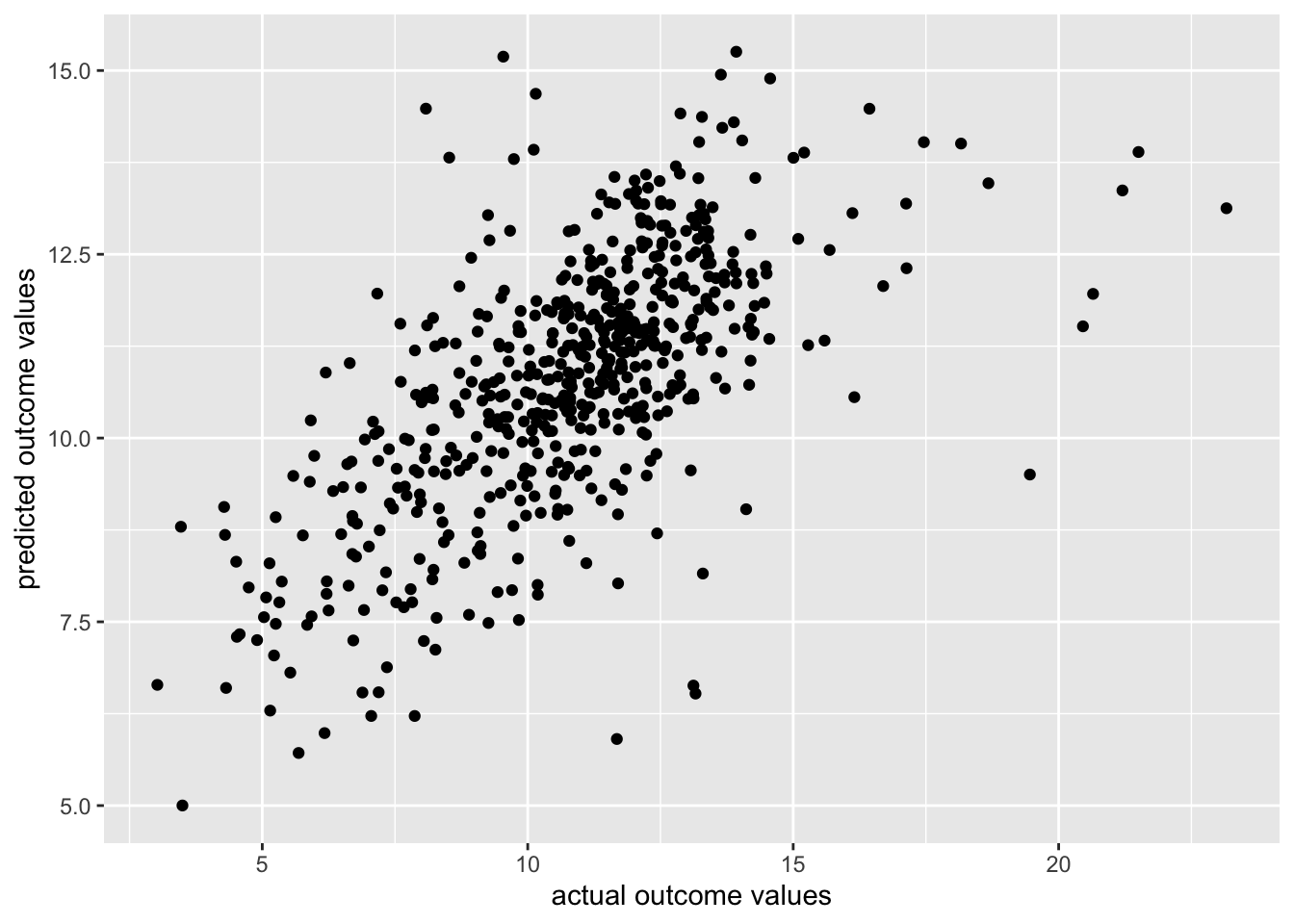

We will perform the same analysis about soda cans that we just did with this package to illustrate how to use it.